中文 | English

💜 Qwen Chat | 🤗 Hugging Face | 🤖 ModelScope | 📑 Blog | 📚 Cookbooks | 📑 Paper

🖥️ Demo | 💬 WeChat (微信) | 🫨 Discord | 📑 API

## 新闻

* 2025.04.11: 我们正式发布了支持音频输出的vllm版本!请从源码或者我们的镜像中来体验。

* 2025.04.02: ⭐️⭐️⭐️ Qwen2.5-Omni 达到 Hugging Face Trending 榜的 top-1!

* 2025.03.29: ⭐️⭐️⭐️ Qwen2.5-Omni 达到 Hugging Face Trending 榜的 top-2!

* 2025.03.26: 与Qwen2.5-Omni的实时交互体验已经在 [Qwen Chat](https://chat.qwen.ai/) 上线,欢迎体验!

* 2025.03.26: 我们发布了 [Qwen2.5-Omni](https://huggingface.co/collections/Qwen/qwen25-omni-67de1e5f0f9464dc6314b36e). 对于更多的信息请访问我们的[博客](https://qwenlm.github.io/zh/blog/qwen2.5-omni/)!

## 目录

- [概览](#概览)

- [简介](#简介)

- [主要特点](#主要特点)

- [模型结构](#模型结构)

- [性能指标](#性能指标)

- [快速开始](#快速开始)

- [Transformers 使用方法](#--transformers-使用方法)

- [ModelScope 使用方法](#-modelscope-使用方法)

- [使用提示](#使用提示)

- [更多使用用例的 Cookbooks](#更多使用用例的-cookbooks)

- [API 推理](#api-推理)

- [自定义模型设定](#自定义模型设定)

- [和 Qwen2.5-Omni 对话](#和-qwen25-omni-对话)

- [在线演示](#在线演示)

- [启动本地网页演示](#启动本地网页演示)

- [实时交互](#实时交互)

- [使用vLLM部署](#使用vLLM部署)

- [Docker](#-docker)

## 概览

### 简介

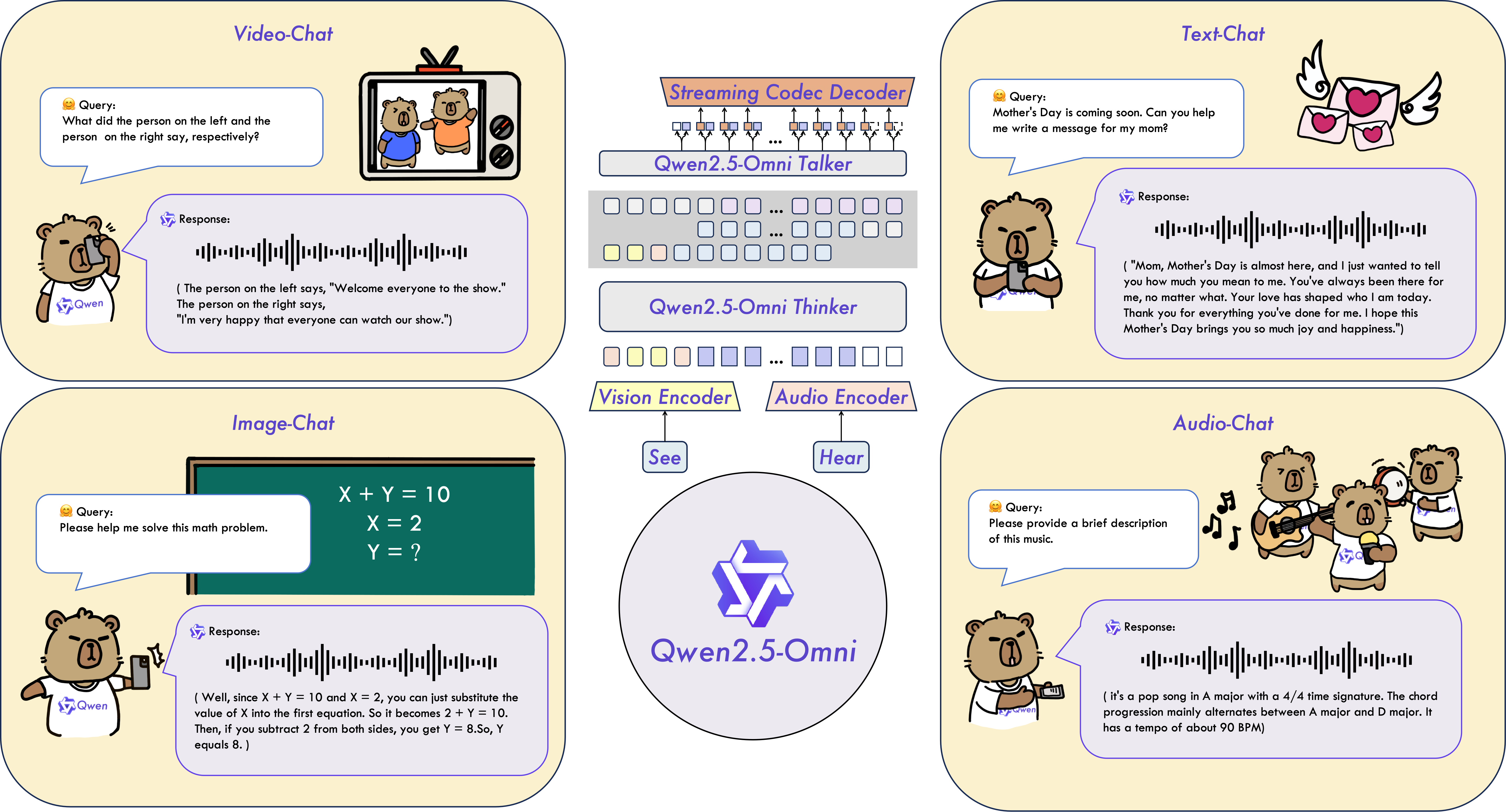

Qwen 2.5-Omni是一个端到端的多模态大语言模型,旨在感知包括文本、图像、音频和视频在内的多种模态,同时以流式的方式生成文本和自然语音响应。

## 新闻

* 2025.04.11: 我们正式发布了支持音频输出的vllm版本!请从源码或者我们的镜像中来体验。

* 2025.04.02: ⭐️⭐️⭐️ Qwen2.5-Omni 达到 Hugging Face Trending 榜的 top-1!

* 2025.03.29: ⭐️⭐️⭐️ Qwen2.5-Omni 达到 Hugging Face Trending 榜的 top-2!

* 2025.03.26: 与Qwen2.5-Omni的实时交互体验已经在 [Qwen Chat](https://chat.qwen.ai/) 上线,欢迎体验!

* 2025.03.26: 我们发布了 [Qwen2.5-Omni](https://huggingface.co/collections/Qwen/qwen25-omni-67de1e5f0f9464dc6314b36e). 对于更多的信息请访问我们的[博客](https://qwenlm.github.io/zh/blog/qwen2.5-omni/)!

## 目录

- [概览](#概览)

- [简介](#简介)

- [主要特点](#主要特点)

- [模型结构](#模型结构)

- [性能指标](#性能指标)

- [快速开始](#快速开始)

- [Transformers 使用方法](#--transformers-使用方法)

- [ModelScope 使用方法](#-modelscope-使用方法)

- [使用提示](#使用提示)

- [更多使用用例的 Cookbooks](#更多使用用例的-cookbooks)

- [API 推理](#api-推理)

- [自定义模型设定](#自定义模型设定)

- [和 Qwen2.5-Omni 对话](#和-qwen25-omni-对话)

- [在线演示](#在线演示)

- [启动本地网页演示](#启动本地网页演示)

- [实时交互](#实时交互)

- [使用vLLM部署](#使用vLLM部署)

- [Docker](#-docker)

## 概览

### 简介

Qwen 2.5-Omni是一个端到端的多模态大语言模型,旨在感知包括文本、图像、音频和视频在内的多种模态,同时以流式的方式生成文本和自然语音响应。

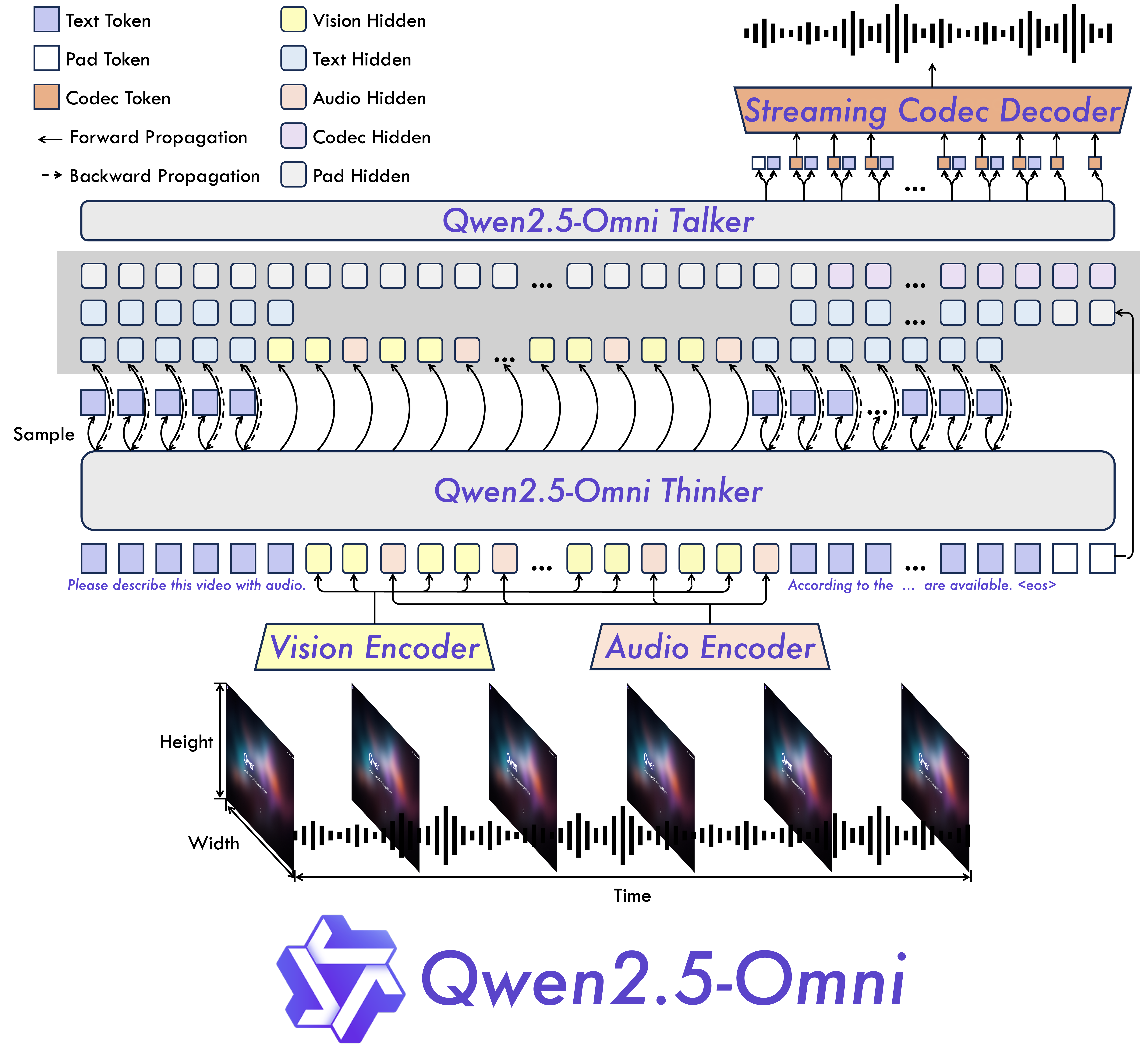

### 主要特点 * **全能创新架构**:我们提出了一种全新的Thinker-Talker架构,这是一种端到端的多模态模型,旨在支持文本/图像/音频/视频的跨模态理解,同时以流式方式生成文本和自然语音响应。我们提出了一种新的位置编码技术,称为TMRoPE(Time-aligned Multimodal RoPE),通过时间轴对齐实现视频与音频输入的精准同步。 * **实时音视频交互**:架构旨在支持完全实时交互,支持分块输入和即时输出。 * **自然流畅的语音生成**:在语音生成的自然性和稳定性方面超越了许多现有的流式和非流式替代方案。 * **全模态性能优势**:在同等规模的单模态模型进行基准测试时,表现出卓越的性能。Qwen2.5-Omni在音频能力上优于类似大小的Qwen2-Audio,并与Qwen2.5-VL-7B保持同等水平。 * **卓越的端到端语音指令跟随能力**:Qwen2.5-Omni在端到端语音指令跟随方面表现出与文本输入处理相媲美的效果,在MMLU通用知识理解和GSM8K数学推理等基准测试中表现优异。 ### 模型结构

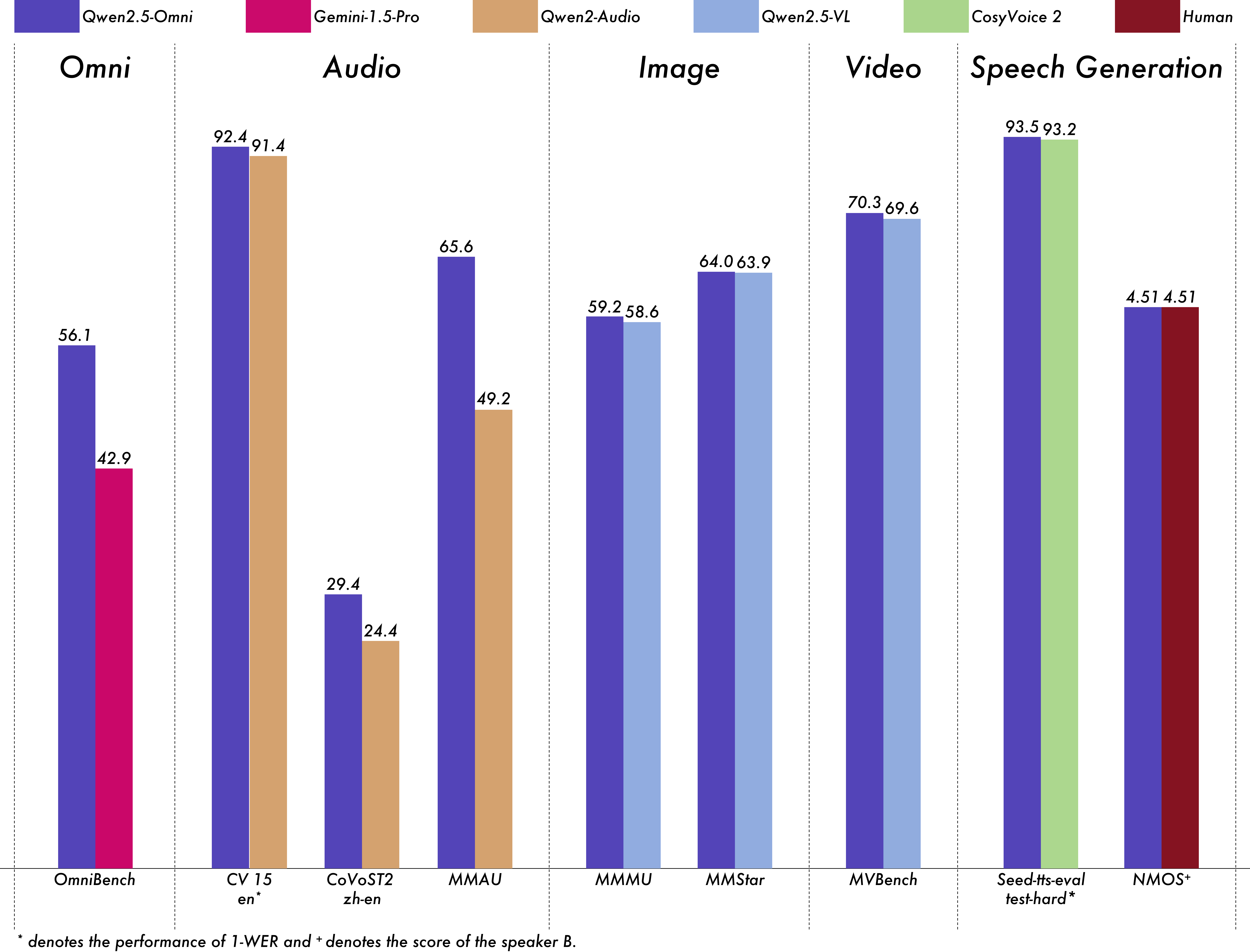

### 性能指标 Qwen2.5-Omni在包括图像,音频,音视频等各种模态下的表现都优于类似大小的单模态模型以及封闭源模型,例如Qwen2.5-VL-7B、Qwen2-Audio和Gemini-1.5-pro。在多模态任务OmniBench,Qwen2.5-Omni达到了SOTA的表现。此外,在单模态任务中,Qwen2.5-Omni在多个领域中表现优异,包括语音识别(Common Voice)、翻译(CoVoST2)、音频理解(MMAU)、图像推理(MMMU、MMStar)、视频理解(MVBench)以及语音生成(Seed-tts-eval和主观自然听感)。

Multimodality -> Text

Datasets

Model

Performance

OmniBench

Speech | Sound Event | Music | AvgGemini-1.5-Pro

42.67%|42.26%|46.23%|42.91%

MIO-Instruct

36.96%|33.58%|11.32%|33.80%

AnyGPT (7B)

17.77%|20.75%|13.21%|18.04%

video-SALMONN

34.11%|31.70%|56.60%|35.64%

UnifiedIO2-xlarge

39.56%|36.98%|29.25%|38.00%

UnifiedIO2-xxlarge

34.24%|36.98%|24.53%|33.98%

MiniCPM-o

-|-|-|40.50%

Baichuan-Omni-1.5

-|-|-|42.90%

Qwen2.5-Omni-7B

55.25%|60.00%|52.83%|56.13%

Audio -> Text

Datasets

Model

Performance

ASR

Librispeech

dev-clean | dev other | test-clean | test-otherSALMONN

-|-|2.1|4.9

SpeechVerse

-|-|2.1|4.4

Whisper-large-v3

-|-|1.8|3.6

Llama-3-8B

-|-|-|3.4

Llama-3-70B

-|-|-|3.1

Seed-ASR-Multilingual

-|-|1.6|2.8

MiniCPM-o

-|-|1.7|-

MinMo

-|-|1.7|3.9

Qwen-Audio

1.8|4.0|2.0|4.2

Qwen2-Audio

1.3|3.4|1.6|3.6

Qwen2.5-Omni-7B

1.6|3.5|1.8|3.4

Common Voice 15

en | zh | yue | frWhisper-large-v3

9.3|12.8|10.9|10.8

MinMo

7.9|6.3|6.4|8.5

Qwen2-Audio

8.6|6.9|5.9|9.6

Qwen2.5-Omni-7B

7.6|5.2|7.3|7.5

Fleurs

zh | enWhisper-large-v3

7.7|4.1

Seed-ASR-Multilingual

-|3.4

Megrez-3B-Omni

10.8|-

MiniCPM-o

4.4|-

MinMo

3.0|3.8

Qwen2-Audio

7.5|-

Qwen2.5-Omni-7B

3.0|4.1

Wenetspeech

test-net | test-meetingSeed-ASR-Chinese

4.7|5.7

Megrez-3B-Omni

-|16.4

MiniCPM-o

6.9|-

MinMo

6.8|7.4

Qwen2.5-Omni-7B

5.9|7.7

Voxpopuli-V1.0-en

Llama-3-8B

6.2

Llama-3-70B

5.7

Qwen2.5-Omni-7B

5.8

S2TT

CoVoST2

en-de | de-en | en-zh | zh-enSALMONN

18.6|-|33.1|-

SpeechLLaMA

-|27.1|-|12.3

BLSP

14.1|-|-|-

MiniCPM-o

-|-|48.2|27.2

MinMo

-|39.9|46.7|26.0

Qwen-Audio

25.1|33.9|41.5|15.7

Qwen2-Audio

29.9|35.2|45.2|24.4

Qwen2.5-Omni-7B

30.2|37.7|41.4|29.4

SER

Meld

WavLM-large

0.542

MiniCPM-o

0.524

Qwen-Audio

0.557

Qwen2-Audio

0.553

Qwen2.5-Omni-7B

0.570

VSC

VocalSound

CLAP

0.495

Pengi

0.604

Qwen-Audio

0.929

Qwen2-Audio

0.939

Qwen2.5-Omni-7B

0.939

Music

GiantSteps Tempo

Llark-7B

0.86

Qwen2.5-Omni-7B

0.88

MusicCaps

LP-MusicCaps

0.291|0.149|0.089|0.061|0.129|0.130

Qwen2.5-Omni-7B

0.328|0.162|0.090|0.055|0.127|0.225

Audio Reasoning

MMAU

Sound | Music | Speech | AvgGemini-Pro-V1.5

56.75|49.40|58.55|54.90

Qwen2-Audio

54.95|50.98|42.04|49.20

Qwen2.5-Omni-7B

67.87|69.16|59.76|65.60

Voice Chatting

VoiceBench

AlpacaEval | CommonEval | SD-QA | MMSUUltravox-v0.4.1-LLaMA-3.1-8B

4.55|3.90|53.35|47.17

MERaLiON

4.50|3.77|55.06|34.95

Megrez-3B-Omni

3.50|2.95|25.95|27.03

Lyra-Base

3.85|3.50|38.25|49.74

MiniCPM-o

4.42|4.15|50.72|54.78

Baichuan-Omni-1.5

4.50|4.05|43.40|57.25

Qwen2-Audio

3.74|3.43|35.71|35.72

Qwen2.5-Omni-7B

4.49|3.93|55.71|61.32

VoiceBench

OpenBookQA | IFEval | AdvBench | AvgUltravox-v0.4.1-LLaMA-3.1-8B

65.27|66.88|98.46|71.45

MERaLiON

27.23|62.93|94.81|62.91

Megrez-3B-Omni

28.35|25.71|87.69|46.25

Lyra-Base

72.75|36.28|59.62|57.66

MiniCPM-o

78.02|49.25|97.69|71.69

Baichuan-Omni-1.5

74.51|54.54|97.31|71.14

Qwen2-Audio

49.45|26.33|96.73|55.35

Qwen2.5-Omni-7B

81.10|52.87|99.42|74.12

Image -> Text

| Dataset | Qwen2.5-Omni-7B | Other Best | Qwen2.5-VL-7B | GPT-4o-mini |

|--------------------------------|--------------|------------|---------------|-------------|

| MMMUval | 59.2 | 53.9 | 58.6 | **60.0** |

| MMMU-Prooverall | 36.6 | - | **38.3** | 37.6 |

| MathVistatestmini | 67.9 | **71.9** | 68.2 | 52.5 |

| MathVisionfull | 25.0 | 23.1 | **25.1** | - |

| MMBench-V1.1-ENtest | 81.8 | 80.5 | **82.6** | 76.0 |

| MMVetturbo | 66.8 | **67.5** | 67.1 | 66.9 |

| MMStar | **64.0** | **64.0** | 63.9 | 54.8 |

| MMEsum | 2340 | **2372** | 2347 | 2003 |

| MuirBench | 59.2 | - | **59.2** | - |

| CRPErelation | **76.5** | - | 76.4 | - |

| RealWorldQAavg | 70.3 | **71.9** | 68.5 | - |

| MME-RealWorlden | **61.6** | - | 57.4 | - |

| MM-MT-Bench | 6.0 | - | **6.3** | - |

| AI2D | 83.2 | **85.8** | 83.9 | - |

| TextVQAval | 84.4 | 83.2 | **84.9** | - |

| DocVQAtest | 95.2 | 93.5 | **95.7** | - |

| ChartQAtest Avg | 85.3 | 84.9 | **87.3** | - |

| OCRBench_V2en | **57.8** | - | 56.3 | - |

| Dataset | Qwen2.5-Omni-7B | Qwen2.5-VL-7B | Grounding DINO | Gemini 1.5 Pro |

|--------------------------|--------------|---------------|----------------|----------------|

| Refcocoval | 90.5 | 90.0 | **90.6** | 73.2 |

| RefcocotextA | **93.5** | 92.5 | 93.2 | 72.9 |

| RefcocotextB | 86.6 | 85.4 | **88.2** | 74.6 |

| Refcoco+val | 85.4 | 84.2 | **88.2** | 62.5 |

| Refcoco+textA | **91.0** | 89.1 | 89.0 | 63.9 |

| Refcoco+textB | **79.3** | 76.9 | 75.9 | 65.0 |

| Refcocog+val | **87.4** | 87.2 | 86.1 | 75.2 |

| Refcocog+test | **87.9** | 87.2 | 87.0 | 76.2 |

| ODinW | 42.4 | 37.3 | **55.0** | 36.7 |

| PointGrounding | 66.5 | **67.3** | - | - |

Video(without audio) -> Text

| Dataset | Qwen2.5-Omni-7B | Other Best | Qwen2.5-VL-7B | GPT-4o-mini |

|-----------------------------|--------------|------------|---------------|-------------|

| Video-MMEw/o sub | 64.3 | 63.9 | **65.1** | 64.8 |

| Video-MMEw sub | **72.4** | 67.9 | 71.6 | - |

| MVBench | **70.3** | 67.2 | 69.6 | - |

| EgoSchematest | **68.6** | 63.2 | 65.0 | - |

Zero-shot Speech Generation

Datasets

Model

Performance

Content Consistency

SEED

test-zh | test-en | test-hard Seed-TTS_ICL

1.11 | 2.24 | 7.58

Seed-TTS_RL

1.00 | 1.94 | 6.42

MaskGCT

2.27 | 2.62 | 10.27

E2_TTS

1.97 | 2.19 | -

F5-TTS

1.56 | 1.83 | 8.67

CosyVoice 2

1.45 | 2.57 | 6.83

CosyVoice 2-S

1.45 | 2.38 | 8.08

Qwen2.5-Omni-7B_ICL

1.70 | 2.72 | 7.97

Qwen2.5-Omni-7B_RL

1.42 | 2.32 | 6.54

Speaker Similarity

SEED

test-zh | test-en | test-hard Seed-TTS_ICL

0.796 | 0.762 | 0.776

Seed-TTS_RL

0.801 | 0.766 | 0.782

MaskGCT

0.774 | 0.714 | 0.748

E2_TTS

0.730 | 0.710 | -

F5-TTS

0.741 | 0.647 | 0.713

CosyVoice 2

0.748 | 0.652 | 0.724

CosyVoice 2-S

0.753 | 0.654 | 0.732

Qwen2.5-Omni-7B_ICL

0.752 | 0.632 | 0.747

Qwen2.5-Omni-7B_RL

0.754 | 0.641 | 0.752

Text -> Text

| Dataset | Qwen2.5-Omni-7B | Qwen2.5-7B | Qwen2-7B | Llama3.1-8B | Gemma2-9B |

|-----------------------------------|-----------|------------|----------|-------------|-----------|

| MMLU-Pro | 47.0 | **56.3** | 44.1 | 48.3 | 52.1 |

| MMLU-redux | 71.0 | **75.4** | 67.3 | 67.2 | 72.8 |

| LiveBench0831 | 29.6 | **35.9** | 29.2 | 26.7 | 30.6 |

| GPQA | 30.8 | **36.4** | 34.3 | 32.8 | 32.8 |

| MATH | 71.5 | **75.5** | 52.9 | 51.9 | 44.3 |

| GSM8K | 88.7 | **91.6** | 85.7 | 84.5 | 76.7 |

| HumanEval | 78.7 | **84.8** | 79.9 | 72.6 | 68.9 |

| MBPP | 73.2 | **79.2** | 67.2 | 69.6 | 74.9 |

| MultiPL-E | 65.8 | **70.4** | 59.1 | 50.7 | 53.4 |

| LiveCodeBench2305-2409 | 24.6 | **28.7** | 23.9 | 8.3 | 18.9 |

最小GPU内存需求

| 精度 | 15(s) 视频 | 30(s) 视频 | 60(s) 视频 |

|-----------| ------------- | --------- | -------------- |

| FP32 | 93.56 GB | 不推荐 | 不推荐 |

| BF16 | 31.11 GB | 41.85 GB | 60.19 GB |

注意: 上述的表格所展示的只是使用`transformers`进行推理的理论最小值,并且`BF16`的结果是在`attn_implementation="flash_attention_2"`的情况下测试得到的,但是在实际中,内存使用通常比这个值要高至少1.2倍。 有关更多信息,请参阅[这里](https://huggingface.co/docs/accelerate/main/en/usage_guides/model_size_estimator)。

视频URL超链接使用方法

视频URL超链接的兼容性取决于第三方库的版本。具体的细节在下表中所示,如果您不希望使用默认值,可以通过设置`FORCE_QWENVL_VIDEO_READER=torchvision`或`FORCE_QWENVL_VIDEO_READER=decord`来实现。

| 后端类型 | HTTP | HTTPS |

|-------------|------|-------|

| torchvision >= 0.19.0 | ✅ | ✅ |

| torchvision < 0.19.0 | ❌ | ❌ |

| decord | ✅ | ❌ |

批处理

当`return_audio=False`设置时,模型支持混合输入的批处理,其中可以包含各种类型的样本,如文本、图像、音频和视频,以下是一个代码片段的示例。

```python

# Sample messages for batch inference

# Conversation with video only

conversation1 = [

{

"role": "system",

"content": [

{"type": "text", "text": "You are Qwen, a virtual human developed by the Qwen Team, Alibaba Group, capable of perceiving auditory and visual inputs, as well as generating text and speech."}

],

},

{

"role": "user",

"content": [

{"type": "video", "video": "/path/to/video.mp4"},

]

}

]

# Conversation with audio only

conversation2 = [

{

"role": "system",

"content": [

{"type": "text", "text": "You are Qwen, a virtual human developed by the Qwen Team, Alibaba Group, capable of perceiving auditory and visual inputs, as well as generating text and speech."}

],

},

{

"role": "user",

"content": [

{"type": "audio", "audio": "/path/to/audio.wav"},

]

}

]

# Conversation with pure text

conversation3 = [

{

"role": "system",

"content": [

{"type": "text", "text": "You are Qwen, a virtual human developed by the Qwen Team, Alibaba Group, capable of perceiving auditory and visual inputs, as well as generating text and speech."}

],

},

{

"role": "user",

"content": "who are you?"

}

]

# Conversation with mixed media

conversation4 = [

{

"role": "system",

"content": [

{"type": "text", "text": "You are Qwen, a virtual human developed by the Qwen Team, Alibaba Group, capable of perceiving auditory and visual inputs, as well as generating text and speech."}

],

},

{

"role": "user",

"content": [

{"type": "image", "image": "/path/to/image.jpg"},

{"type": "video", "video": "/path/to/video.mp4"},

{"type": "audio", "audio": "/path/to/audio.wav"},

{"type": "text", "text": "What are the elements can you see and hear in these medias?"},

],

}

]

# Combine messages for batch processing

conversations = [conversation1, conversation2, conversation3, conversation4]

# set use audio in video

USE_AUDIO_IN_VIDEO = True

# Preparation for batch inference

text = processor.apply_chat_template(conversations, add_generation_prompt=True, tokenize=False)

audios, images, videos = process_mm_info(conversations, use_audio_in_video=USE_AUDIO_IN_VIDEO)

inputs = processor(text=text, audio=audios, images=images, videos=videos, return_tensors="pt", padding=True, use_audio_in_video=USE_AUDIO_IN_VIDEO)

inputs = inputs.to(model.device).to(model.dtype)

# Batch Inference

text_ids = model.generate(**inputs, use_audio_in_video=USE_AUDIO_IN_VIDEO, return_audio=False)

text = processor.batch_decode(text_ids, skip_special_tokens=True, clean_up_tokenization_spaces=False)

print(text)

```