diff --git a/.gitignore b/.gitignore

index 72cb0b7534647201a06f79851b5fb6c35f96ef0a..549e00a2a96fa9d7c5dbc9859664a78d980158c2 100644

--- a/.gitignore

+++ b/.gitignore

@@ -1,2 +1,33 @@

-# 项目排除路径

-/target/

\ No newline at end of file

+HELP.md

+target/

+!.mvn/wrapper/maven-wrapper.jar

+!**/src/main/**/target/

+!**/src/test/**/target/

+

+### STS ###

+.apt_generated

+.classpath

+.factorypath

+.project

+.settings

+.springBeans

+.sts4-cache

+

+### IntelliJ IDEA ###

+.idea

+*.iws

+*.iml

+*.ipr

+

+### NetBeans ###

+/nbproject/private/

+/nbbuild/

+/dist/

+/nbdist/

+/.nb-gradle/

+build/

+!**/src/main/**/build/

+!**/src/test/**/build/

+

+### VS Code ###

+.vscode/

diff --git a/20240229172018.gif b/20240229172018.gif

new file mode 100644

index 0000000000000000000000000000000000000000..7b55fc07d514c6ca57e236b2a4f937e177e2a9da

Binary files /dev/null and b/20240229172018.gif differ

diff --git a/LICENSE b/LICENSE

new file mode 100644

index 0000000000000000000000000000000000000000..29f81d812f3e768fa89638d1f72920dbfd1413a8

--- /dev/null

+++ b/LICENSE

@@ -0,0 +1,201 @@

+ Apache License

+ Version 2.0, January 2004

+ http://www.apache.org/licenses/

+

+ TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

+

+ 1. Definitions.

+

+ "License" shall mean the terms and conditions for use, reproduction,

+ and distribution as defined by Sections 1 through 9 of this document.

+

+ "Licensor" shall mean the copyright owner or entity authorized by

+ the copyright owner that is granting the License.

+

+ "Legal Entity" shall mean the union of the acting entity and all

+ other entities that control, are controlled by, or are under common

+ control with that entity. For the purposes of this definition,

+ "control" means (i) the power, direct or indirect, to cause the

+ direction or management of such entity, whether by contract or

+ otherwise, or (ii) ownership of fifty percent (50%) or more of the

+ outstanding shares, or (iii) beneficial ownership of such entity.

+

+ "You" (or "Your") shall mean an individual or Legal Entity

+ exercising permissions granted by this License.

+

+ "Source" form shall mean the preferred form for making modifications,

+ including but not limited to software source code, documentation

+ source, and configuration files.

+

+ "Object" form shall mean any form resulting from mechanical

+ transformation or translation of a Source form, including but

+ not limited to compiled object code, generated documentation,

+ and conversions to other media types.

+

+ "Work" shall mean the work of authorship, whether in Source or

+ Object form, made available under the License, as indicated by a

+ copyright notice that is included in or attached to the work

+ (an example is provided in the Appendix below).

+

+ "Derivative Works" shall mean any work, whether in Source or Object

+ form, that is based on (or derived from) the Work and for which the

+ editorial revisions, annotations, elaborations, or other modifications

+ represent, as a whole, an original work of authorship. For the purposes

+ of this License, Derivative Works shall not include works that remain

+ separable from, or merely link (or bind by name) to the interfaces of,

+ the Work and Derivative Works thereof.

+

+ "Contribution" shall mean any work of authorship, including

+ the original version of the Work and any modifications or additions

+ to that Work or Derivative Works thereof, that is intentionally

+ submitted to Licensor for inclusion in the Work by the copyright owner

+ or by an individual or Legal Entity authorized to submit on behalf of

+ the copyright owner. For the purposes of this definition, "submitted"

+ means any form of electronic, verbal, or written communication sent

+ to the Licensor or its representatives, including but not limited to

+ communication on electronic mailing lists, source code control systems,

+ and issue tracking systems that are managed by, or on behalf of, the

+ Licensor for the purpose of discussing and improving the Work, but

+ excluding communication that is conspicuously marked or otherwise

+ designated in writing by the copyright owner as "Not a Contribution."

+

+ "Contributor" shall mean Licensor and any individual or Legal Entity

+ on behalf of whom a Contribution has been received by Licensor and

+ subsequently incorporated within the Work.

+

+ 2. Grant of Copyright License. Subject to the terms and conditions of

+ this License, each Contributor hereby grants to You a perpetual,

+ worldwide, non-exclusive, no-charge, royalty-free, irrevocable

+ copyright license to reproduce, prepare Derivative Works of,

+ publicly display, publicly perform, sublicense, and distribute the

+ Work and such Derivative Works in Source or Object form.

+

+ 3. Grant of Patent License. Subject to the terms and conditions of

+ this License, each Contributor hereby grants to You a perpetual,

+ worldwide, non-exclusive, no-charge, royalty-free, irrevocable

+ (except as stated in this section) patent license to make, have made,

+ use, offer to sell, sell, import, and otherwise transfer the Work,

+ where such license applies only to those patent claims licensable

+ by such Contributor that are necessarily infringed by their

+ Contribution(s) alone or by combination of their Contribution(s)

+ with the Work to which such Contribution(s) was submitted. If You

+ institute patent litigation against any entity (including a

+ cross-claim or counterclaim in a lawsuit) alleging that the Work

+ or a Contribution incorporated within the Work constitutes direct

+ or contributory patent infringement, then any patent licenses

+ granted to You under this License for that Work shall terminate

+ as of the date such litigation is filed.

+

+ 4. Redistribution. You may reproduce and distribute copies of the

+ Work or Derivative Works thereof in any medium, with or without

+ modifications, and in Source or Object form, provided that You

+ meet the following conditions:

+

+ (a) You must give any other recipients of the Work or

+ Derivative Works a copy of this License; and

+

+ (b) You must cause any modified files to carry prominent notices

+ stating that You changed the files; and

+

+ (c) You must retain, in the Source form of any Derivative Works

+ that You distribute, all copyright, patent, trademark, and

+ attribution notices from the Source form of the Work,

+ excluding those notices that do not pertain to any part of

+ the Derivative Works; and

+

+ (d) If the Work includes a "NOTICE" text file as part of its

+ distribution, then any Derivative Works that You distribute must

+ include a readable copy of the attribution notices contained

+ within such NOTICE file, excluding those notices that do not

+ pertain to any part of the Derivative Works, in at least one

+ of the following places: within a NOTICE text file distributed

+ as part of the Derivative Works; within the Source form or

+ documentation, if provided along with the Derivative Works; or,

+ within a display generated by the Derivative Works, if and

+ wherever such third-party notices normally appear. The contents

+ of the NOTICE file are for informational purposes only and

+ do not modify the License. You may add Your own attribution

+ notices within Derivative Works that You distribute, alongside

+ or as an addendum to the NOTICE text from the Work, provided

+ that such additional attribution notices cannot be construed

+ as modifying the License.

+

+ You may add Your own copyright statement to Your modifications and

+ may provide additional or different license terms and conditions

+ for use, reproduction, or distribution of Your modifications, or

+ for any such Derivative Works as a whole, provided Your use,

+ reproduction, and distribution of the Work otherwise complies with

+ the conditions stated in this License.

+

+ 5. Submission of Contributions. Unless You explicitly state otherwise,

+ any Contribution intentionally submitted for inclusion in the Work

+ by You to the Licensor shall be under the terms and conditions of

+ this License, without any additional terms or conditions.

+ Notwithstanding the above, nothing herein shall supersede or modify

+ the terms of any separate license agreement you may have executed

+ with Licensor regarding such Contributions.

+

+ 6. Trademarks. This License does not grant permission to use the trade

+ names, trademarks, service marks, or product names of the Licensor,

+ except as required for reasonable and customary use in describing the

+ origin of the Work and reproducing the content of the NOTICE file.

+

+ 7. Disclaimer of Warranty. Unless required by applicable law or

+ agreed to in writing, Licensor provides the Work (and each

+ Contributor provides its Contributions) on an "AS IS" BASIS,

+ WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

+ implied, including, without limitation, any warranties or conditions

+ of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

+ PARTICULAR PURPOSE. You are solely responsible for determining the

+ appropriateness of using or redistributing the Work and assume any

+ risks associated with Your exercise of permissions under this License.

+

+ 8. Limitation of Liability. In no event and under no legal theory,

+ whether in tort (including negligence), contract, or otherwise,

+ unless required by applicable law (such as deliberate and grossly

+ negligent acts) or agreed to in writing, shall any Contributor be

+ liable to You for damages, including any direct, indirect, special,

+ incidental, or consequential damages of any character arising as a

+ result of this License or out of the use or inability to use the

+ Work (including but not limited to damages for loss of goodwill,

+ work stoppage, computer failure or malfunction, or any and all

+ other commercial damages or losses), even if such Contributor

+ has been advised of the possibility of such damages.

+

+ 9. Accepting Warranty or Additional Liability. While redistributing

+ the Work or Derivative Works thereof, You may choose to offer,

+ and charge a fee for, acceptance of support, warranty, indemnity,

+ or other liability obligations and/or rights consistent with this

+ License. However, in accepting such obligations, You may act only

+ on Your own behalf and on Your sole responsibility, not on behalf

+ of any other Contributor, and only if You agree to indemnify,

+ defend, and hold each Contributor harmless for any liability

+ incurred by, or claims asserted against, such Contributor by reason

+ of your accepting any such warranty or additional liability.

+

+ END OF TERMS AND CONDITIONS

+

+ APPENDIX: How to apply the Apache License to your work.

+

+ To apply the Apache License to your work, attach the following

+ boilerplate notice, with the fields enclosed by brackets "[]"

+ replaced with your own identifying information. (Don't include

+ the brackets!) The text should be enclosed in the appropriate

+ comment syntax for the file format. We also recommend that a

+ file or class name and description of purpose be included on the

+ same "printed page" as the copyright notice for easier

+ identification within third-party archives.

+

+ Copyright [yyyy] [name of copyright owner]

+

+ Licensed under the Apache License, Version 2.0 (the "License");

+ you may not use this file except in compliance with the License.

+ You may obtain a copy of the License at

+

+ http://www.apache.org/licenses/LICENSE-2.0

+

+ Unless required by applicable law or agreed to in writing, software

+ distributed under the License is distributed on an "AS IS" BASIS,

+ WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ See the License for the specific language governing permissions and

+ limitations under the License.

diff --git a/README.md b/README.md

new file mode 100644

index 0000000000000000000000000000000000000000..0f85bc53575a573e84145a14614bef50ac5ac8af

--- /dev/null

+++ b/README.md

@@ -0,0 +1,124 @@

+# 先 完整阅读文档!!!

+## 项目由来

+1. 在调用深度学习训练好的AI模型时,如果使用python调用非常简单,甚至不用编写代码,大部分深度学习框架就是python编写的,自带有推理逻辑文件和方法

+2. 但是不是每个同学都会python,不是每个项目都是python语言开发,不是每个岗位都会深度学习

+3. 由于大部分服务器项目还是由java语言居多,之前java方向的同学也多,由于找遍全网也没有找到java调用AI模型的例子,

+4. 所以特意编写一个java调用AI模型的方法(全网应该就这一份)。思路是通用的,只需要替换不同的模型即可达到不同效果

+6. 极其轻量,两个依赖,一个java主文件即可运行

+5. **不懂项目有什么用作?不知道用在什么地方?没关系,先下载运行看效果后立马就明白了!**

+

+---

+

+## 环境

+- **master分支:面向过程写法,dp分支:面向对象写法。第一次先运行master分支代码**

+- 只需要java环境不需要安装其它!JDK大于等于11,不能用1.8。 代码目录不能含有中文!

+- maven源记得改为国内源,否则下载依赖需要2天。

+- CPU建议i7十一代以上,自己测试可以不用GPU,实际项目必须GPU,尽量3060以上(图片检测无所谓,视频流实时检测必须GPU)

+- 本项目相当于最基础工具处理方法,不包含和结合业务逻辑,项目使用时视频流需要多线程,队列等等,需要自己处理。

+- 不包含视频流处理以及存储,转发等功能,具体实现搜索关键字:流媒体服务器,rtmp 等等。 **思路如下:一个线程拉流,一个或多个线程识别,一个线程推流,一个共变量存储最新画面防止堆积,拉流线程只负责更新最新画面,识别线程只负责识别最新画面,识别后放到队列等待推流线程推流(注意帧率)**

+

+---

+

+## 紧接着下载运行看效果再研究代码,别忘记点star

+## 看不懂也要先运行

+1. 下载代码可直接运行主文件:`CameraDetection.java(优先)`,`ObjectDetection_1_25200_n.java` , `ObjectDetection_n_7.java`,`ObjectDetection_1_n_8400.java` 都 **可以直接运行不会报错**

+2. `CameraDetection.java`,是实时视频流识别检测,**也可直接运行**,三个文件完全独立,不互相依赖,如果有GPU帧率会更高,需要开启调用GPU。images目录下有视频文件也可以改为路径预览视频识别效果,根据视频实时识别demo,其他文件都可以改为实时识别

+3. 多个主文件是为了支持不用网络结构的模型,即使是`onnx`模型,输出的结果参数也不一样,目前支持三种结构,下面有讲解

+4. 可以封装为`http` `controller` `api`接口,也可以结合摄像头实时分析视频流,进行识别后预览和告警

+5. 支持`yolov7` , `yolov5`和`yolov8`,`paddlepaddle`后处理稍微改一下也可以支持, **代码中自带的onnx模型仅仅为了演示,准确率非常低,实际应用需要自己训练**

+6. 训练出来的模型成为基础模型,可以用于测试。生产环境的模型需要经过模型压缩,量化,剪枝,蒸馏,才可以使用(当然这不是java开发者的工作)。会提升视频华民啊帧率达到60-120帧左右。点击查看:[百度压缩模型工具](https://www.paddlepaddle.org.cn/tutorials/projectdetail/3949129),[基础概念](https://zhuanlan.zhihu.com/p/138059904),[参考文章](https://zhuanlan.zhihu.com/p/430910227)

+8. 视频流检测用小模型,接口图片检测用大模型

+6. 替换`model`目录下的onnx模型文件,可以识别检测任何物体(烟火,跌倒,抽烟,安全帽,口罩,人,打架,计数,攀爬,垃圾,开关,状态,分类,等等),有模型即可

+7. **模型不是onnx格式怎么办?不要紧张,主流AI框架模型都可以转为onnx格式。怎么转?自己搜!**.

+---

+

+## ObjectDetection_1_25200_n.java

+ - `yolov5`

+ - **85**:每一行`85`个数值,`5`个center_x,center_y, width, height,score ,`80`个标签类别得分(不一定是80要看模型标签数量)

+ - **25200**:三个尺度上的预测框总和 `( 80∗80∗3 + 40∗40∗3 + 20∗20∗3 )`,每个网格三个预测框,后续需要`非极大值抑制NMS`处理

+ - **1**:没有批量预测推理,即每次输入推理一张图片

+

+

+---

+

+## ObjectDetection_n_7.java

+ - `yolov7`

+ - **Concatoutput_dim_0** :变量,表示当前图像中预测目标的数量,

+ - **7**:表示每个目标的七个参数:`batch_id,x0,y0,x1,y1,cls_id,score`

+

+

+---

+

+## ObjectDetection_1_n_8400.java

+ - `yolov8`

+

+

+---

+## 暂不直接支持输出结果是三个数组参数的模型(因为不常用)

+- 但是这种结构模型可以导出为`[1,25200,85]`或`[n,7]`输出结构,然后就可以使用已有代码调用。

+- **yolov5** :导出onnx时增加参数 `inplace=True,simplify=True`(ObjectDetection_1_25200_n.java)

+- **yolov7** :导出onnx时增加参数 `grid=True,simplify=True`(ObjectDetection_1_25200_n.java) 或者 `grid=True,simplify=True,end2end=True`(ObjectDetection_n_7.java)

+

+

+

+---

+

+## ONNX

+Open Neural Network Exchange(ONNX,开放神经网络交换)格式,是一个用于表示深度学习模型的标准,可使模型在不同框架之间进行转移.

+是一种针对机器学习所设计的开放式的文件格式,用于存储训练好的模型。它使得不同的人工智能框架(如Pytorch,TensorFlow,PaddlePaddle,MXNet)可以采用相同格式存储模型数据并交互。 ONNX的规范及代码主要由微软,亚马逊 ,Facebook 和 IBM 等公司共同开发,以开放源代码的方式托管在Github.

+

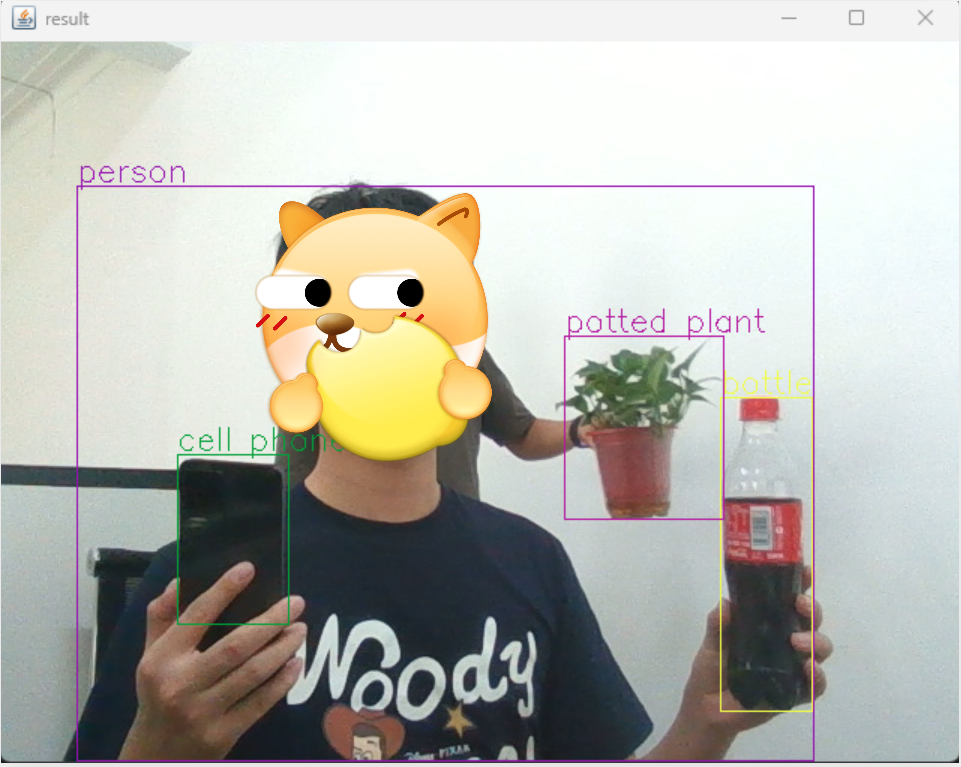

+## 图片效果

+

+

+

+

+## 视频效果(必看)

+- https://live.csdn.net/v/308058

+

+- https://live.csdn.net/v/296613

+

+- https://blog.csdn.net/changzengli/article/details/129661570

+

+## 扫码备注:onnx,可以加群学习如何训练模型

+- 无备注不通过

+- 进群1小时内发运行成功代码截图,不然踢出群,真踢。

+

+

+## 有用链接

+- https://blog.csdn.net/changzengli/article/details/129182528

+- https://blog.csdn.net/xqtt29/article/details/110918397

+- https://blog.csdn.net/changzengli/article/details/127904594

+- 使用封装后的javacpp中的javacv 和 ffmpeg 也可以

+

+## 使用GPU前提

+- 对于图片处理,不是必须使用GPU,处理视频建议使用

+- 更新显卡驱动,显卡驱动一定要最新版本

+- 安装对应版本的:cuda 和 cudnn,版本需要和自己电脑上的GPU型号对应,和项目无关

+- 并测试是否安装成功,一定要测试: nvcc -V

+- 版本不要高于:cuda11.8

+- **安装环境要有耐心,初学者可能需要2周左右才能安装好,别着急**

+

+## 中文解决方案

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+### 旋转目标检测(定位方向和角度:摆放电池和抓取螺丝等等)

+

diff --git a/demo/1.png b/demo/1.png

new file mode 100644

index 0000000000000000000000000000000000000000..5c01f6f26dfaab74de757ae9c8d74b1625b0acc4

Binary files /dev/null and b/demo/1.png differ

diff --git a/demo/2.gif b/demo/2.gif

new file mode 100644

index 0000000000000000000000000000000000000000..5384bba4eb09090aabf7eb05c7dd7c90fa8e41b7

Binary files /dev/null and b/demo/2.gif differ

diff --git a/demo/2.png b/demo/2.png

new file mode 100644

index 0000000000000000000000000000000000000000..0fd463a9e489cc5d32b104e17145e4dd5280e511

Binary files /dev/null and b/demo/2.png differ

diff --git a/demo/3.png b/demo/3.png

new file mode 100644

index 0000000000000000000000000000000000000000..36ea2bed0daa51ce294caf8cac44c5260896382e

Binary files /dev/null and b/demo/3.png differ

diff --git a/demo/4.png b/demo/4.png

new file mode 100644

index 0000000000000000000000000000000000000000..bfebd3c9293033f85c89d5f4a8083abb9a5d722e

Binary files /dev/null and b/demo/4.png differ

diff --git a/demo/5.gif b/demo/5.gif

new file mode 100644

index 0000000000000000000000000000000000000000..fa38199d1e3b92f43f281530b630ff5ea931f091

Binary files /dev/null and b/demo/5.gif differ

diff --git a/demo/6.png b/demo/6.png

new file mode 100644

index 0000000000000000000000000000000000000000..5fbc0b1a60ccabe6f5320b3934c4b983d91c8674

Binary files /dev/null and b/demo/6.png differ

diff --git a/demo/7.png b/demo/7.png

new file mode 100644

index 0000000000000000000000000000000000000000..bc47f85417b3f18d365d0108940c6e6af0925d9f

Binary files /dev/null and b/demo/7.png differ

diff --git a/demo/8.png b/demo/8.png

new file mode 100644

index 0000000000000000000000000000000000000000..a5aed13ad11b6515bc6e7d4a002f8683fbedd6c7

Binary files /dev/null and b/demo/8.png differ

diff --git a/demo/image-20231129214334426.png b/demo/image-20231129214334426.png

new file mode 100644

index 0000000000000000000000000000000000000000..ef0ed9c25ebbd42215e46995659806d22c931506

Binary files /dev/null and b/demo/image-20231129214334426.png differ

diff --git a/demo/image-20231221191740601.png b/demo/image-20231221191740601.png

new file mode 100644

index 0000000000000000000000000000000000000000..4c24ffe630619f678aa3ab28e889bde89f491aec

Binary files /dev/null and b/demo/image-20231221191740601.png differ

diff --git "a/demo/\345\276\256\344\277\241\345\233\276\347\211\207_20240303133256.jpg" "b/demo/\345\276\256\344\277\241\345\233\276\347\211\207_20240303133256.jpg"

new file mode 100644

index 0000000000000000000000000000000000000000..9a324de6505b2a8736713b35c04878d7272054e0

Binary files /dev/null and "b/demo/\345\276\256\344\277\241\345\233\276\347\211\207_20240303133256.jpg" differ

diff --git "a/demo/\345\276\256\344\277\241\346\210\252\345\233\276_20240302144451.png" "b/demo/\345\276\256\344\277\241\346\210\252\345\233\276_20240302144451.png"

new file mode 100644

index 0000000000000000000000000000000000000000..a7119ac730bb8b1860451010261f08ac454edef0

Binary files /dev/null and "b/demo/\345\276\256\344\277\241\346\210\252\345\233\276_20240302144451.png" differ

diff --git "a/demo/\345\276\256\344\277\241\346\210\252\345\233\276_20240302144535.png" "b/demo/\345\276\256\344\277\241\346\210\252\345\233\276_20240302144535.png"

new file mode 100644

index 0000000000000000000000000000000000000000..8681ca2e4b3563130a797dce75787f586c956356

Binary files /dev/null and "b/demo/\345\276\256\344\277\241\346\210\252\345\233\276_20240302144535.png" differ

diff --git "a/demo/\345\276\256\344\277\241\346\210\252\345\233\276_20240302144556.png" "b/demo/\345\276\256\344\277\241\346\210\252\345\233\276_20240302144556.png"

new file mode 100644

index 0000000000000000000000000000000000000000..2de18d5766975d7d854a73f5a7b114911b018bef

Binary files /dev/null and "b/demo/\345\276\256\344\277\241\346\210\252\345\233\276_20240302144556.png" differ

diff --git "a/demo/\345\276\256\344\277\241\346\210\252\345\233\276_20240302144728.png" "b/demo/\345\276\256\344\277\241\346\210\252\345\233\276_20240302144728.png"

new file mode 100644

index 0000000000000000000000000000000000000000..001aea51ea19e2c9113098f473ae6d093b26e68b

Binary files /dev/null and "b/demo/\345\276\256\344\277\241\346\210\252\345\233\276_20240302144728.png" differ

diff --git "a/demo/\345\276\256\344\277\241\346\210\252\345\233\276_20240302145028.png" "b/demo/\345\276\256\344\277\241\346\210\252\345\233\276_20240302145028.png"

new file mode 100644

index 0000000000000000000000000000000000000000..0267225c09b618f8d757143d154f8c72538f2719

Binary files /dev/null and "b/demo/\345\276\256\344\277\241\346\210\252\345\233\276_20240302145028.png" differ

diff --git a/images/20230731212230.png b/images/10230731212230.png

similarity index 100%

rename from images/20230731212230.png

rename to images/10230731212230.png

diff --git a/images/20230731211539.png b/images/20230731211539.png

deleted file mode 100644

index 12e8bccb7880996b9588be2ad1b28fc9ceeca9e8..0000000000000000000000000000000000000000

Binary files a/images/20230731211539.png and /dev/null differ

diff --git a/images/20230810214652.png b/images/20230810214652.png

new file mode 100644

index 0000000000000000000000000000000000000000..262513364fc701389f37fc18e3065f7eaa08aefe

Binary files /dev/null and b/images/20230810214652.png differ

diff --git a/pom.xml b/pom.xml

index 8519784fc595a459ab1c90883ac8ce78d209032d..3897b5554ea94e30f3b970c714ffd1bfd3a1e7f1 100644

--- a/pom.xml

+++ b/pom.xml

@@ -2,7 +2,7 @@

- 4.0.0z

+ 4.0.0

cn.changakng

yolo-onnx-java

@@ -17,22 +17,50 @@

com.microsoft.onnxruntime

onnxruntime

- 1.15.1

+ 1.16.1

-

-

- org.opencv

- opencv

- 4.6.0

- system

- ${project.basedir}/src/main/resources/lib/opencv-460.jar

-

+

+

+

+

+

+ org.openpnp

+ opencv

+ 4.7.0-0

+

+

+

+

+ org.springframework.boot

+ spring-boot-maven-plugin

+ 2.7.18-SNAPSHOT

+

+

+

+

\ No newline at end of file

diff --git a/src/main/java/cn/ck/CameraDetection.java b/src/main/java/cn/ck/CameraDetection.java

new file mode 100644

index 0000000000000000000000000000000000000000..f4218069632e3599bd6bc8f8b6100ec0c7b9f6a7

--- /dev/null

+++ b/src/main/java/cn/ck/CameraDetection.java

@@ -0,0 +1,198 @@

+package cn.ck;

+

+import ai.onnxruntime.OnnxTensor;

+import ai.onnxruntime.OrtEnvironment;

+import ai.onnxruntime.OrtException;

+import ai.onnxruntime.OrtSession;

+import cn.ck.config.ODConfig;

+import cn.ck.domain.ODResult;

+import cn.ck.utils.ImageUtil;

+import cn.ck.utils.Letterbox;

+import org.opencv.core.CvType;

+import org.opencv.core.Mat;

+import org.opencv.core.Point;

+import org.opencv.core.Scalar;

+import org.opencv.highgui.HighGui;

+import org.opencv.imgproc.Imgproc;

+import org.opencv.videoio.VideoCapture;

+import org.opencv.videoio.Videoio;

+

+import java.nio.FloatBuffer;

+import java.util.HashMap;

+

+/**

+ * 摄像头识别,这是yolov7的视频识别例子,v5和v8的根据下面的思路,将其他文件中的代码复制过来即可

+ *

+ * 视频帧率15最佳,20也可以,不建议30,分辨率640最佳,720也可以。不建议1080,码率不要超过2048,1024最佳

+ */

+

+public class CameraDetection {

+

+

+

+ // 视频帧率15最佳,20也可以,不建议30,分辨率640最佳,720也可以。不建议1080,码率不要超过2048,1024最佳 。可在摄像头自带的管理页面中设备,主码流和子码流

+ public static void main(String[] args) throws OrtException {

+

+ //System.load(ClassLoader.getSystemResource("lib/opencv_java470-无用.dll").getPath());

+ nu.pattern.OpenCV.loadLocally();

+

+ //linux和苹果系统需要注释这一行,如果仅打开摄像头预览,这一行没有用,可以删除,如果rtmp或者rtsp等等这一样有用,也可以用pom依赖代替

+ String OS = System.getProperty("os.name").toLowerCase();

+ if (OS.contains("win")) {

+ System.load(ClassLoader.getSystemResource("lib/opencv_videoio_ffmpeg470_64.dll").getPath());

+ }

+ String model_path = "src\\main\\resources\\model\\yolov7-tiny.onnx";

+

+ String[] labels = {

+ "person", "bicycle", "car", "motorcycle", "airplane", "bus", "train",

+ "truck", "boat", "traffic light", "fire hydrant", "stop sign", "parking meter",

+ "bench", "bird", "cat", "dog", "horse", "sheep", "cow", "elephant", "bear",

+ "zebra", "giraffe", "backpack", "umbrella", "handbag", "tie", "suitcase",

+ "frisbee", "skis", "snowboard", "sports ball", "kite", "baseball bat",

+ "baseball glove", "skateboard", "surfboard", "tennis racket", "bottle",

+ "wine glass", "cup", "fork", "knife", "spoon", "bowl", "banana", "apple",

+ "sandwich", "orange", "broccoli", "carrot", "hot dog", "pizza", "donut",

+ "cake", "chair", "couch", "potted plant", "bed", "dining table", "toilet",

+ "tv", "laptop", "mouse", "remote", "keyboard", "cell phone", "microwave",

+ "oven", "toaster", "sink", "refrigerator", "book", "clock", "vase", "scissors",

+ "teddy bear", "hair drier", "toothbrush"};

+

+ // 加载ONNX模型

+ OrtEnvironment environment = OrtEnvironment.getEnvironment();

+ OrtSession.SessionOptions sessionOptions = new OrtSession.SessionOptions();

+

+ // 使用gpu,需要本机按钻过cuda,并修改pom.xml,不安装也能运行本程序

+ // sessionOptions.addCUDA(0);

+ // 实际项目中,视频识别必须开启GPU,并且要防止队列堆积

+

+ OrtSession session = environment.createSession(model_path, sessionOptions);

+ // 输出基本信息

+ session.getInputInfo().keySet().forEach(x -> {

+ try {

+ System.out.println("input name = " + x);

+ System.out.println(session.getInputInfo().get(x).getInfo().toString());

+ } catch (OrtException e) {

+ throw new RuntimeException(e);

+ }

+ });

+

+ // 加载标签及颜色

+ ODConfig odConfig = new ODConfig();

+ VideoCapture video = new VideoCapture();

+

+ // 也可以设置为rtmp或者rtsp视频流:video.open("rtmp://192.168.1.100/live/test"), 海康,大华,乐橙,宇视,录像机等等

+ // video.open("rtsp://192.168.1.100/live/test")

+ // 也可以静态视频文件:video.open("video/car3.mp4"); flv 等

+ // 不持支h265视频编码,如果无法播放或者程序卡住,请修改视频编码格式

+ video.open(0); //获取电脑上第0个摄像头

+ //video.open("images/car2.mp4"); //不开启gpu比较卡

+

+ //可以把识别后的视频在通过rtmp转发到其他流媒体服务器,就可以远程预览视频后视频,需要使用ffmpeg将连续图片合成flv 等等,很简单。

+ if (!video.isOpened()) {

+ System.err.println("打开视频流失败,未检测到监控,请先用vlc软件测试链接是否可以播放!,下面试用默认测试视频进行预览效果!");

+ video.open("video/car3.mp4");

+ }

+

+ // 在这里先定义下框的粗细、字的大小、字的类型、字的颜色(按比例设置大小粗细比较好一些)

+ int minDwDh = Math.min((int)video.get(Videoio.CAP_PROP_FRAME_WIDTH), (int)video.get(Videoio.CAP_PROP_FRAME_HEIGHT));

+ int thickness = minDwDh / ODConfig.lineThicknessRatio;

+ double fontSize = minDwDh / ODConfig.fontSizeRatio;

+ int fontFace = Imgproc.FONT_HERSHEY_SIMPLEX;

+

+ Mat img = new Mat();

+

+ // 跳帧检测,一般设置为3,毫秒内视频画面变化是不大的,快了无意义,反而浪费性能

+ int detect_skip = 4;

+

+ // 跳帧计数

+ int detect_skip_index = 1;

+

+ // 最新一帧也就是上一帧推理结果

+ float[][] outputData = null;

+

+ //当前最新一帧。上一帧也可以暂存一下

+ Mat image;

+

+ Letterbox letterbox = new Letterbox();

+ OnnxTensor tensor;

+ // 使用多线程和GPU可以提升帧率,一个线程拉流,一个线程模型推理,中间通过变量或者队列交换数据,代码示例仅仅使用单线程

+ while (video.read(img)) {

+ if ((detect_skip_index % detect_skip == 0) || outputData == null){

+ image = img.clone();

+ image = letterbox.letterbox(image);

+ Imgproc.cvtColor(image, image, Imgproc.COLOR_BGR2RGB);

+

+ image.convertTo(image, CvType.CV_32FC1, 1. / 255);

+ float[] whc = new float[3 * 640 * 640];

+ image.get(0, 0, whc);

+ float[] chw = ImageUtil.whc2cwh(whc);

+

+ detect_skip_index = 1;

+

+ FloatBuffer inputBuffer = FloatBuffer.wrap(chw);

+ tensor = OnnxTensor.createTensor(environment, inputBuffer, new long[]{1, 3, 640, 640});

+

+ HashMap stringOnnxTensorHashMap = new HashMap<>();

+ stringOnnxTensorHashMap.put(session.getInputInfo().keySet().iterator().next(), tensor);

+

+ // 运行推理

+ // 模型推理本质是多维矩阵运算,而GPU是专门用于矩阵运算,占用率低,如果使用cpu也可以运行,可能占用率100%属于正常现象,不必纠结。

+ OrtSession.Result output = session.run(stringOnnxTensorHashMap);

+

+ // 得到结果,缓存结果

+ try{

+ outputData = (float[][]) output.get(0).getValue();

+ }catch (OrtException e){}

+ }else{

+ detect_skip_index = detect_skip_index + 1;

+ }

+

+ for(float[] x : outputData){

+

+ ODResult odResult = new ODResult(x);

+ // 业务逻辑写在这里,注释下面代码,增加自己的代码,根据返回识别到的目标类型,编写告警逻辑。等等

+ // 实际项目中建议不要在视频画面上画框和文字,只告警,或者在告警图片上画框。画框和文字对视频帧率影响非常大

+ // 画框

+ Point topLeft = new Point((odResult.getX0() - letterbox.getDw()) / letterbox.getRatio(), (odResult.getY0() - letterbox.getDh()) / letterbox.getRatio());

+ Point bottomRight = new Point((odResult.getX1() - letterbox.getDw()) / letterbox.getRatio(), (odResult.getY1() - letterbox.getDh()) / letterbox.getRatio());

+ Scalar color = new Scalar(odConfig.getOtherColor(odResult.getClsId()));

+

+ Imgproc.rectangle(img, topLeft, bottomRight, color, thickness);

+ // 框上写文字

+ String boxName = labels[odResult.getClsId()];

+ Point boxNameLoc = new Point((odResult.getX0() - letterbox.getDw()) / letterbox.getRatio(), (odResult.getY0() - letterbox.getDh()) / letterbox.getRatio() - 3);

+

+ // 也可以二次往视频画面上叠加其他文字或者数据,比如物联网设备数据等等

+ Imgproc.putText(img, boxName, boxNameLoc, fontFace, 0.7, color, thickness);

+ // System.out.println(odResult+" "+ boxName);

+

+ }

+

+ // 保存告警图像到同级目录

+ // Imgcodecs.imwrite(ODConfig.savePicPath, img);

+ //服务器部署:由于服务器没有桌面,所以无法弹出画面预览,主要注释一下代码

+ HighGui.imshow("result", img);

+

+ // 多次按任意按键关闭弹窗画面,结束程序

+ if(HighGui.waitKey(1) != -1){

+ break;

+ }

+ }

+

+ HighGui.destroyAllWindows();

+ video.release();

+ System.exit(0);

+

+ }

+

+ // 关于重复告警问题思路

+

+ // 目标数量变化就表示有新目标进来或者消失,已经目标类型是否变化,比如 目标数量无变化 不告警 ,本地实现

+

+ // 比如规定时间内,和 上一次告警类型对比是否一样(类型,数量),一样不告警,云端实现

+

+ // 阿里云示例:您的服务器在过去1小时内,共发生了1000次cpu使用率高的告警。连续同类型告警只推送一次。

+

+}

+

+

diff --git a/src/main/java/cn/ck/CameraDetectionWarnDemo.java b/src/main/java/cn/ck/CameraDetectionWarnDemo.java

new file mode 100644

index 0000000000000000000000000000000000000000..1eea82796a592cc3852645034fdd21b9b40ba7fc

--- /dev/null

+++ b/src/main/java/cn/ck/CameraDetectionWarnDemo.java

@@ -0,0 +1,229 @@

+package cn.ck;

+

+import ai.onnxruntime.OnnxTensor;

+import ai.onnxruntime.OrtEnvironment;

+import ai.onnxruntime.OrtException;

+import ai.onnxruntime.OrtSession;

+import cn.ck.config.ODConfig;

+import cn.ck.domain.ODResult;

+import cn.ck.utils.ImageUtil;

+import cn.ck.utils.Letterbox;

+import org.opencv.core.CvType;

+import org.opencv.core.Mat;

+import org.opencv.core.Point;

+import org.opencv.core.Scalar;

+import org.opencv.highgui.HighGui;

+import org.opencv.imgproc.Imgproc;

+import org.opencv.videoio.VideoCapture;

+import org.opencv.videoio.Videoio;

+

+import java.nio.FloatBuffer;

+import java.util.HashMap;

+import java.util.Map;

+import java.util.concurrent.ConcurrentHashMap;

+

+/**

+ * 摄像头识别,告警判断示例

+ */

+

+public class CameraDetectionWarnDemo {

+

+

+ // 标准的对象去重或者判断是否为同一个对象避免重复告警应该使用目标跟踪,但是这里使用数量来简易判断,不是标准用法

+ static Map last = new ConcurrentHashMap<>();

+ static Map current = new ConcurrentHashMap<>();

+

+ static Map count = new ConcurrentHashMap<>();

+

+ public static void main(String[] args) throws OrtException {

+

+ //System.load(ClassLoader.getSystemResource("lib/opencv_java470-无用.dll").getPath());

+ nu.pattern.OpenCV.loadLocally();

+

+ //linux和苹果系统需要注释这一行,如果仅打开摄像头预览,这一行没有用,可以删除,如果rtmp或者rtsp等等这一样有用,也可以用pom依赖代替

+ String OS = System.getProperty("os.name").toLowerCase();

+ if (OS.contains("win")) {

+ System.load(ClassLoader.getSystemResource("lib/opencv_videoio_ffmpeg470_64.dll").getPath());

+ }

+ String model_path = "src\\main\\resources\\model\\yolov7-tiny.onnx";

+

+ String[] labels = {

+ "person", "bicycle", "car", "motorcycle", "airplane", "bus", "train",

+ "truck", "boat", "traffic light", "fire hydrant", "stop sign", "parking meter",

+ "bench", "bird", "cat", "dog", "horse", "sheep", "cow", "elephant", "bear",

+ "zebra", "giraffe", "backpack", "umbrella", "handbag", "tie", "suitcase",

+ "frisbee", "skis", "snowboard", "sports ball", "kite", "baseball bat",

+ "baseball glove", "skateboard", "surfboard", "tennis racket", "bottle",

+ "wine glass", "cup", "fork", "knife", "spoon", "bowl", "banana", "apple",

+ "sandwich", "orange", "broccoli", "carrot", "hot dog", "pizza", "donut",

+ "cake", "chair", "couch", "potted plant", "bed", "dining table", "toilet",

+ "tv", "laptop", "mouse", "remote", "keyboard", "cell phone", "microwave",

+ "oven", "toaster", "sink", "refrigerator", "book", "clock", "vase", "scissors",

+ "teddy bear", "hair drier", "toothbrush"};

+

+ // 加载ONNX模型

+ OrtEnvironment environment = OrtEnvironment.getEnvironment();

+ OrtSession.SessionOptions sessionOptions = new OrtSession.SessionOptions();

+

+ // 使用gpu,需要本机按钻过cuda,并修改pom.xml,不安装也能运行本程序

+ // sessionOptions.addCUDA(0);

+ // 实际项目中,视频识别必须开启GPU,并且要防止队列堆积

+

+ OrtSession session = environment.createSession(model_path, sessionOptions);

+ // 输出基本信息

+ session.getInputInfo().keySet().forEach(x -> {

+ try {

+ System.out.println("input name = " + x);

+ System.out.println(session.getInputInfo().get(x).getInfo().toString());

+ } catch (OrtException e) {

+ throw new RuntimeException(e);

+ }

+ });

+

+ // 加载标签及颜色

+ ODConfig odConfig = new ODConfig();

+ VideoCapture video = new VideoCapture();

+

+ // 也可以设置为rtmp或者rtsp视频流:video.open("rtmp://192.168.1.100/live/test"), 海康,大华,乐橙,宇视,录像机等等

+ // video.open("rtsp://192.168.1.100/live/test")

+ // 也可以静态视频文件:video.open("video/car3.mp4"); flv 等

+ // 不持支h265视频编码,如果无法播放或者程序卡住,请修改视频编码格式

+ video.open(0); //获取电脑上第0个摄像头

+ //video.open("images/car2.mp4"); //不开启gpu比较卡

+

+ //可以把识别后的视频在通过rtmp转发到其他流媒体服务器,就可以远程预览视频后视频,需要使用ffmpeg将连续图片合成flv 等等,很简单。

+ if (!video.isOpened()) {

+ System.err.println("打开视频流失败,未检测到监控,请先用vlc软件测试链接是否可以播放!,下面试用默认测试视频进行预览效果!");

+ video.open("video/car3.mp4");

+ }

+

+ // 在这里先定义下框的粗细、字的大小、字的类型、字的颜色(按比例设置大小粗细比较好一些)

+ int minDwDh = Math.min((int)video.get(Videoio.CAP_PROP_FRAME_WIDTH), (int)video.get(Videoio.CAP_PROP_FRAME_HEIGHT));

+ int thickness = minDwDh / ODConfig.lineThicknessRatio;

+ double fontSize = minDwDh / ODConfig.fontSizeRatio;

+ int fontFace = Imgproc.FONT_HERSHEY_SIMPLEX;

+

+ Mat img = new Mat();

+

+ // 跳帧检测,一般设置为3,毫秒内视频画面变化是不大的,快了无意义,反而浪费性能

+ int detect_skip = 4;

+

+ // 跳帧计数

+ int detect_skip_index = 1;

+

+ // 最新一帧也就是上一帧推理结果

+ float[][] outputData = null;

+

+ //当前最新一帧。上一帧也可以暂存一下

+ Mat image;

+

+ Letterbox letterbox = new Letterbox();

+ OnnxTensor tensor;

+ // 使用多线程和GPU可以提升帧率,一个线程拉流,一个线程模型推理,中间通过变量或者队列交换数据,代码示例仅仅使用单线程

+ while (video.read(img)) {

+ if ((detect_skip_index % detect_skip == 0) || outputData == null){

+ image = img.clone();

+ image = letterbox.letterbox(image);

+ Imgproc.cvtColor(image, image, Imgproc.COLOR_BGR2RGB);

+

+ image.convertTo(image, CvType.CV_32FC1, 1. / 255);

+ float[] whc = new float[3 * 640 * 640];

+ image.get(0, 0, whc);

+ float[] chw = ImageUtil.whc2cwh(whc);

+

+ detect_skip_index = 1;

+

+ FloatBuffer inputBuffer = FloatBuffer.wrap(chw);

+ tensor = OnnxTensor.createTensor(environment, inputBuffer, new long[]{1, 3, 640, 640});

+

+ HashMap stringOnnxTensorHashMap = new HashMap<>();

+ stringOnnxTensorHashMap.put(session.getInputInfo().keySet().iterator().next(), tensor);

+

+ // 运行推理

+ // 模型推理本质是多维矩阵运算,而GPU是专门用于矩阵运算,占用率低,如果使用cpu也可以运行,可能占用率100%属于正常现象,不必纠结。

+ OrtSession.Result output = session.run(stringOnnxTensorHashMap);

+

+ // 得到结果,缓存结果

+ outputData = (float[][]) output.get(0).getValue();

+ }else{

+ detect_skip_index = detect_skip_index + 1;

+ }

+

+ current.clear();

+ for(float[] x : outputData){

+

+ ODResult odResult = new ODResult(x);

+ String boxName = labels[odResult.getClsId()];

+ // 业务逻辑写在这里,注释下面代码,增加自己的代码,根据返回识别到的目标类型,编写告警逻辑。等等

+ if(current.containsKey(boxName)){

+ current.put(boxName,current.get(boxName)+1);

+ }else{

+ current.put(boxName,1);

+ }

+

+ // 画框

+ Point topLeft = new Point((odResult.getX0() - letterbox.getDw()) / letterbox.getRatio(), (odResult.getY0() - letterbox.getDh()) / letterbox.getRatio());

+ Point bottomRight = new Point((odResult.getX1() - letterbox.getDw()) / letterbox.getRatio(), (odResult.getY1() - letterbox.getDh()) / letterbox.getRatio());

+ Scalar color = new Scalar(odConfig.getOtherColor(odResult.getClsId()));

+

+ Imgproc.rectangle(img, topLeft, bottomRight, color, thickness);

+ // 框上写文字

+

+ Point boxNameLoc = new Point((odResult.getX0() - letterbox.getDw()) / letterbox.getRatio(), (odResult.getY0() - letterbox.getDh()) / letterbox.getRatio() - 3);

+

+ // 也可以二次往视频画面上叠加其他文字或者数据,比如物联网设备数据等等

+ Imgproc.putText(img, boxName, boxNameLoc, fontFace, 0.7, color, thickness);

+ // System.out.println(odResult+" "+ boxName);

+

+ }

+

+ // 可以自己记录连续帧出现的次数来判断,来避免模型不不准导致的突然误报

+ // 判断连续几帧都出现或者都消失,才告警。这样才比较稳定,自己写吧

+ StringBuilder info = new StringBuilder();

+

+ for (Map.Entry entry : last.entrySet()) {

+ if(!current.containsKey(entry.getKey())){

+ System.err.println(entry.getValue() +"个 "+entry.getKey()+" 离开了");

+ count.remove(entry.getKey());

+ }

+ }

+

+ for (Map.Entry entry : current.entrySet()) {

+

+ int lastCount = last.get(entry.getKey()) == null?0:entry.getValue();

+ int currentCount = entry.getValue();

+ if((lastCount < currentCount) ){

+ System.err.println(+(currentCount - lastCount)+"个 "+entry.getKey()+" 出现了");

+ }

+/* info.append(" ");

+ info.append(entry.getKey());

+ info.append(" : ");

+ info.append(currentCount);*/

+ }

+ last.clear();

+ last.putAll(current);

+/* Point boxNameLoc = new Point(20, 20);

+ Scalar color = new Scalar(odConfig.getOtherColor(0));

+ Imgproc.putText(img, info.toString(), boxNameLoc, fontFace, 0.6, color, thickness);*/

+ //服务器部署:由于服务器没有桌面,所以无法弹出画面预览,主要注释一下代码

+ HighGui.imshow("result", img);

+

+ // 多次按任意按键关闭弹窗画面,结束程序

+ if(HighGui.waitKey(1) != -1){

+ break;

+ }

+ }

+

+ HighGui.destroyAllWindows();

+ video.release();

+ System.exit(0);

+

+ }

+

+ class Identify{

+ int count;

+ int number;

+ }

+}

+

+

diff --git a/src/main/java/cn/halashuo/ObjectDetection_1_25200_n.java b/src/main/java/cn/ck/ObjectDetection_1_25200_n.java

similarity index 92%

rename from src/main/java/cn/halashuo/ObjectDetection_1_25200_n.java

rename to src/main/java/cn/ck/ObjectDetection_1_25200_n.java

index e22a433185321f0bfdad8b4e1efa3e1314d1b61d..9d8936aa2923592866564b5e204a321a60411e94 100644

--- a/src/main/java/cn/halashuo/ObjectDetection_1_25200_n.java

+++ b/src/main/java/cn/ck/ObjectDetection_1_25200_n.java

@@ -1,12 +1,12 @@

-package cn.halashuo;

+package cn.ck;

import ai.onnxruntime.OnnxTensor;

import ai.onnxruntime.OrtEnvironment;

import ai.onnxruntime.OrtException;

import ai.onnxruntime.OrtSession;

-import cn.halashuo.config.ODConfig;

-import cn.halashuo.domain.Detection;

-import cn.halashuo.utils.Letterbox;

+import cn.ck.config.ODConfig;

+import cn.ck.domain.Detection;

+import cn.ck.utils.Letterbox;

import org.opencv.core.Mat;

import org.opencv.core.Point;

import org.opencv.core.Scalar;

@@ -28,9 +28,9 @@ import java.util.stream.Collectors;

public class ObjectDetection_1_25200_n {

static {

- // 加载opencv动态库,仅能在windows中运行,如果在linux中运行,需要加载linux动态库

- URL url = ClassLoader.getSystemResource("lib/opencv_java460.dll");

- System.load(url.getPath());

+ // 加载opencv动态库,

+ //System.load(ClassLoader.getSystemResource("lib/opencv_java470-无用.dll").getPath());

+ nu.pattern.OpenCV.loadLocally();

}

public static void main(String[] args) throws OrtException {

@@ -39,7 +39,7 @@ public class ObjectDetection_1_25200_n {

float confThreshold = 0.35F;

- float nmsThreshold = 0.55F;

+ float nmsThreshold = 0.45F;

String[] labels = {"no_helmet", "helmet"};

@@ -47,7 +47,7 @@ public class ObjectDetection_1_25200_n {

OrtEnvironment environment = OrtEnvironment.getEnvironment();

OrtSession.SessionOptions sessionOptions = new OrtSession.SessionOptions();

- // 使用gpu,需要本机按钻过cuda,并修改pom.xml

+ // 使用gpu,需要本机按钻过cuda,并修改pom.xml,不安装也能运行本程序

// sessionOptions.addCUDA(0);

OrtSession session = environment.createSession(model_path, sessionOptions);

@@ -76,7 +76,6 @@ public class ObjectDetection_1_25200_n {

Imgproc.cvtColor(image, image, Imgproc.COLOR_BGR2RGB);

-

// img.convertTo(img, CvType.CV_32FC1, 1. / 255);

// float[] whc = new float[NUM_INPUT_ELEMENTS];

// img.get(0, 0, whc);

@@ -87,9 +86,6 @@ public class ObjectDetection_1_25200_n {

// 在这里先定义下框的粗细、字的大小、字的类型、字的颜色(按比例设置大小粗细比较好一些)

int minDwDh = Math.min(img.width(), img.height());

int thickness = minDwDh/ODConfig.lineThicknessRatio;

- double fontSize = minDwDh/ODConfig.fontSizeRatio;

- int fontFace = Imgproc.FONT_HERSHEY_SIMPLEX;

- Scalar fontColor = new Scalar(255, 255, 255);

long start_time = System.currentTimeMillis();

// 更改 image 尺寸

Letterbox letterbox = new Letterbox();

@@ -151,25 +147,28 @@ public class ObjectDetection_1_25200_n {

bboxes = nonMaxSuppression(bboxes, nmsThreshold);

for (float[] bbox : bboxes) {

String labelString = labels[entry.getKey()];

- detections.add(new Detection(labelString, Arrays.copyOfRange(bbox, 0, 4), bbox[4]));

+ detections.add(new Detection(labelString,entry.getKey(), Arrays.copyOfRange(bbox, 0, 4), bbox[4]));

}

}

+

for (Detection detection : detections) {

float[] bbox = detection.getBbox();

System.out.println(detection.toString());

// 画框

Point topLeft = new Point((bbox[0]-dw)/ratio, (bbox[1]-dh)/ratio);

Point bottomRight = new Point((bbox[2]-dw)/ratio, (bbox[3]-dh)/ratio);

- Scalar color = new Scalar(odConfig.getColor(1));

+ Scalar color = new Scalar(odConfig.getOtherColor(detection.getClsId()));

Imgproc.rectangle(img, topLeft, bottomRight, color, thickness);

// 框上写文字

Point boxNameLoc = new Point((bbox[0]-dw)/ratio, (bbox[1]-dh)/ratio-3);

- Imgproc.putText(img, detection.getLabel(), boxNameLoc, fontFace, fontSize, fontColor, thickness);

+ Imgproc.putText(img, detection.getLabel(), boxNameLoc, Imgproc.FONT_HERSHEY_SIMPLEX, 0.7, color, thickness);

}

System.out.printf("time:%d ms.", (System.currentTimeMillis() - start_time));

+

System.out.println();

+ //服务器部署:由于服务器没有桌面,所以无法弹出画面预览,主要注释一下代码

// 保存图像到同级目录

// Imgcodecs.imwrite(ODConfig.savePicPath, img);

@@ -183,8 +182,6 @@ public class ObjectDetection_1_25200_n {

}

-

-

public static void scaleCoords(float[] bbox, float orgW, float orgH, float padW, float padH, float gain) {

// xmin, ymin, xmax, ymax -> (xmin_org, ymin_org, xmax_org, ymax_org)

bbox[0] = Math.max(0, Math.min(orgW - 1, (bbox[0] - padW) / gain));

@@ -206,13 +203,10 @@ public class ObjectDetection_1_25200_n {

public static List nonMaxSuppression(List bboxes, float iouThreshold) {

-

List bestBboxes = new ArrayList<>();

-

bboxes.sort(Comparator.comparing(a -> a[4]));

-

while (!bboxes.isEmpty()) {

float[] bestBbox = bboxes.remove(bboxes.size() - 1);

bestBboxes.add(bestBbox);

@@ -238,6 +232,7 @@ public class ObjectDetection_1_25200_n {

}

+ //返回最大值的索引

public static int argmax(float[] a) {

float re = -Float.MAX_VALUE;

int arg = -1;

diff --git a/src/main/java/cn/ck/ObjectDetection_1_n_8400.java b/src/main/java/cn/ck/ObjectDetection_1_n_8400.java

new file mode 100644

index 0000000000000000000000000000000000000000..b66fc7033cf751caffb9bd1122a332ac4db4a6b6

--- /dev/null

+++ b/src/main/java/cn/ck/ObjectDetection_1_n_8400.java

@@ -0,0 +1,284 @@

+package cn.ck;

+

+import ai.onnxruntime.OnnxTensor;

+import ai.onnxruntime.OrtEnvironment;

+import ai.onnxruntime.OrtException;

+import ai.onnxruntime.OrtSession;

+import cn.ck.config.ODConfig;

+import cn.ck.domain.Detection;

+import cn.ck.utils.Letterbox;

+import org.opencv.core.Mat;

+import org.opencv.core.Point;

+import org.opencv.core.Scalar;

+import org.opencv.highgui.HighGui;

+import org.opencv.imgcodecs.Imgcodecs;

+import org.opencv.imgproc.Imgproc;

+

+import java.io.File;

+import java.nio.FloatBuffer;

+import java.util.*;

+import java.util.regex.Matcher;

+import java.util.regex.Pattern;

+import java.util.stream.Collectors;

+

+/**

+ * yolov8

+ *

+ * 作者:常康

+ */

+public class ObjectDetection_1_n_8400 {

+

+ static {

+ // 加载opencv动态库,

+ //System.load(ClassLoader.getSystemResource("lib/opencv_java470-无用.dll").getPath());

+ nu.pattern.OpenCV.loadLocally();

+ }

+

+ public static void main(String[] args) throws OrtException {

+

+ String model_path = "src\\main\\resources\\model\\yolov8s.onnx";

+

+ List colors = new ArrayList<>();

+

+ float confThreshold = 0.35F;

+

+ float nmsThreshold = 0.55F;

+

+ String[] labels = null;

+

+ // 加载ONNX模型

+ OrtEnvironment environment = OrtEnvironment.getEnvironment();

+ OrtSession.SessionOptions sessionOptions = new OrtSession.SessionOptions();

+

+ // 使用gpu,需要本机按钻过cuda,并修改pom.xml,不安装也能运行本程序

+ // sessionOptions.addCUDA(0);

+

+ OrtSession session = environment.createSession(model_path, sessionOptions);

+ String meteStr = session.getMetadata().getCustomMetadata().get("names");

+

+

+ labels = new String[meteStr.split(",").length];

+

+

+ Pattern pattern = Pattern.compile("'([^']*)'");

+ Matcher matcher = pattern.matcher(meteStr);

+

+ int h = 0;

+ while (matcher.find()) {

+ labels[h] = matcher.group(1);

+ Random random = new Random();

+ double[] color = {random.nextDouble()*256, random.nextDouble()*256, random.nextDouble()*256};

+ colors.add(color);

+ h++;

+ }

+ // 输出基本信息

+ session.getInputInfo().keySet().forEach(x-> {

+ try {

+ System.out.println("input name = " + x);

+ System.out.println(session.getInputInfo().get(x).getInfo().toString());

+ } catch (OrtException e) {

+ throw new RuntimeException(e);

+ }

+ });

+

+ // 要检测的图片所在目录

+ String imagePath = "images";

+

+

+ Map map = getImagePathMap(imagePath);

+ for(String fileName : map.keySet()){

+ String imageFilePath = map.get(fileName);

+ System.out.println(imageFilePath);

+ // 读取 image

+ Mat img = Imgcodecs.imread(imageFilePath);

+ Mat image = img.clone();

+ Imgproc.cvtColor(image, image, Imgproc.COLOR_BGR2RGB);

+

+

+ // 在这里先定义下框的粗细、字的大小、字的类型、字的颜色(按比例设置大小粗细比较好一些)

+ int minDwDh = Math.min(img.width(), img.height());

+ int thickness = minDwDh/ODConfig.lineThicknessRatio;

+ long start_time = System.currentTimeMillis();

+ // 更改 image 尺寸

+ Letterbox letterbox = new Letterbox();

+ image = letterbox.letterbox(image);

+

+ double ratio = letterbox.getRatio();

+ double dw = letterbox.getDw();

+ double dh = letterbox.getDh();

+ int rows = letterbox.getHeight();

+ int cols = letterbox.getWidth();

+ int channels = image.channels();

+

+ // 将Mat对象的像素值赋值给Float[]对象

+ float[] pixels = new float[channels * rows * cols];

+ for (int i = 0; i < rows; i++) {

+ for (int j = 0; j < cols; j++) {

+ double[] pixel = image.get(j,i);

+ for (int k = 0; k < channels; k++) {

+ // 这样设置相当于同时做了image.transpose((2, 0, 1))操作

+ pixels[rows*cols*k+j*cols+i] = (float) pixel[k]/255.0f;

+ }

+ }

+ }

+

+ // 创建OnnxTensor对象

+ long[] shape = { 1L, (long)channels, (long)rows, (long)cols };

+ OnnxTensor tensor = OnnxTensor.createTensor(environment, FloatBuffer.wrap(pixels), shape);

+ HashMap stringOnnxTensorHashMap = new HashMap<>();

+ stringOnnxTensorHashMap.put(session.getInputInfo().keySet().iterator().next(), tensor);

+

+ // 运行推理

+ OrtSession.Result output = session.run(stringOnnxTensorHashMap);

+ float[][] outputData = ((float[][][])output.get(0).getValue())[0];

+

+ outputData = transposeMatrix(outputData);

+ Map> class2Bbox = new HashMap<>();

+

+ for (float[] bbox : outputData) {

+

+

+ float[] conditionalProbabilities = Arrays.copyOfRange(bbox, 4, outputData.length);

+ int label = argmax(conditionalProbabilities);

+ float conf = conditionalProbabilities[label];

+ if (conf < confThreshold) continue;

+

+ bbox[4] = conf;

+

+ // xywh to (x1, y1, x2, y2)

+ xywh2xyxy(bbox);

+

+ // skip invalid predictions

+ if (bbox[0] >= bbox[2] || bbox[1] >= bbox[3]) continue;

+

+

+ class2Bbox.putIfAbsent(label, new ArrayList<>());

+ class2Bbox.get(label).add(bbox);

+ }

+

+ List detections = new ArrayList<>();

+ for (Map.Entry> entry : class2Bbox.entrySet()) {

+ int label = entry.getKey();

+ List bboxes = entry.getValue();

+ bboxes = nonMaxSuppression(bboxes, nmsThreshold);

+ for (float[] bbox : bboxes) {

+ String labelString = labels[label];

+ detections.add(new Detection(labelString,entry.getKey(), Arrays.copyOfRange(bbox, 0, 4), bbox[4]));

+ }

+ }

+

+

+ for (Detection detection : detections) {

+ float[] bbox = detection.getBbox();

+ System.out.println(detection.toString());

+ // 画框

+ Point topLeft = new Point((bbox[0]-dw)/ratio, (bbox[1]-dh)/ratio);

+ Point bottomRight = new Point((bbox[2]-dw)/ratio, (bbox[3]-dh)/ratio);

+ Scalar color = new Scalar(colors.get(detection.getClsId()));

+ Imgproc.rectangle(img, topLeft, bottomRight, color, thickness);

+ // 框上写文字

+ Point boxNameLoc = new Point((bbox[0]-dw)/ratio, (bbox[1]-dh)/ratio-3);

+

+ Imgproc.putText(img, detection.getLabel(), boxNameLoc, Imgproc.FONT_HERSHEY_SIMPLEX, 0.7, color, thickness);

+ }

+ System.out.printf("time:%d ms.", (System.currentTimeMillis() - start_time));

+

+ System.out.println();

+ //服务器部署:由于服务器没有桌面,所以无法弹出画面预览,主要注释一下代码

+

+ // 保存图像到同级目录

+ // Imgcodecs.imwrite(ODConfig.savePicPath, img);

+ // 弹窗展示图像

+ HighGui.imshow("Display Image", img);

+ // 按任意按键关闭弹窗画面,结束程序

+ HighGui.waitKey();

+ }

+ HighGui.destroyAllWindows();

+ System.exit(0);

+

+ }

+

+ public static void scaleCoords(float[] bbox, float orgW, float orgH, float padW, float padH, float gain) {

+ // xmin, ymin, xmax, ymax -> (xmin_org, ymin_org, xmax_org, ymax_org)

+ bbox[0] = Math.max(0, Math.min(orgW - 1, (bbox[0] - padW) / gain));

+ bbox[1] = Math.max(0, Math.min(orgH - 1, (bbox[1] - padH) / gain));

+ bbox[2] = Math.max(0, Math.min(orgW - 1, (bbox[2] - padW) / gain));

+ bbox[3] = Math.max(0, Math.min(orgH - 1, (bbox[3] - padH) / gain));

+ }

+ public static void xywh2xyxy(float[] bbox) {

+ float x = bbox[0];

+ float y = bbox[1];

+ float w = bbox[2];

+ float h = bbox[3];

+

+ bbox[0] = x - w * 0.5f;

+ bbox[1] = y - h * 0.5f;

+ bbox[2] = x + w * 0.5f;

+ bbox[3] = y + h * 0.5f;

+ }

+

+ public static float[][] transposeMatrix(float [][] m){

+ float[][] temp = new float[m[0].length][m.length];

+ for (int i = 0; i < m.length; i++)

+ for (int j = 0; j < m[0].length; j++)

+ temp[j][i] = m[i][j];

+ return temp;

+ }

+

+ public static List nonMaxSuppression(List bboxes, float iouThreshold) {

+

+ List bestBboxes = new ArrayList<>();

+

+ bboxes.sort(Comparator.comparing(a -> a[4]));

+

+ while (!bboxes.isEmpty()) {

+ float[] bestBbox = bboxes.remove(bboxes.size() - 1);

+ bestBboxes.add(bestBbox);

+ bboxes = bboxes.stream().filter(a -> computeIOU(a, bestBbox) < iouThreshold).collect(Collectors.toList());

+ }

+

+ return bestBboxes;

+ }

+

+ public static float computeIOU(float[] box1, float[] box2) {

+

+ float area1 = (box1[2] - box1[0]) * (box1[3] - box1[1]);

+ float area2 = (box2[2] - box2[0]) * (box2[3] - box2[1]);

+

+ float left = Math.max(box1[0], box2[0]);

+ float top = Math.max(box1[1], box2[1]);

+ float right = Math.min(box1[2], box2[2]);

+ float bottom = Math.min(box1[3], box2[3]);

+

+ float interArea = Math.max(right - left, 0) * Math.max(bottom - top, 0);

+ float unionArea = area1 + area2 - interArea;

+ return Math.max(interArea / unionArea, 1e-8f);

+

+ }

+

+ //返回最大值的索引

+ public static int argmax(float[] a) {

+ float re = -Float.MAX_VALUE;

+ int arg = -1;

+ for (int i = 0; i < a.length; i++) {

+ if (a[i] >= re) {

+ re = a[i];

+ arg = i;

+ }

+ }

+ return arg;

+ }

+

+ public static Map getImagePathMap(String imagePath){

+ Map map = new TreeMap<>();

+ File file = new File(imagePath);

+ if(file.isFile()){

+ map.put(file.getName(), file.getAbsolutePath());

+ }else if(file.isDirectory()){

+ for(File tmpFile : Objects.requireNonNull(file.listFiles())){

+ map.putAll(getImagePathMap(tmpFile.getPath()));

+ }

+ }

+ return map;

+ }

+}

diff --git a/src/main/java/cn/halashuo/ObjectDetection_n_7.java b/src/main/java/cn/ck/ObjectDetection_n_7.java

similarity index 87%

rename from src/main/java/cn/halashuo/ObjectDetection_n_7.java

rename to src/main/java/cn/ck/ObjectDetection_n_7.java

index 66f0d7aa76d18cb52e0389efdbb0999c20845f12..bc790345f29820b948f2c36ee06c4b0cdb8503d7 100644

--- a/src/main/java/cn/halashuo/ObjectDetection_n_7.java

+++ b/src/main/java/cn/ck/ObjectDetection_n_7.java

@@ -1,9 +1,9 @@

-package cn.halashuo;

+package cn.ck;

import ai.onnxruntime.*;

-import cn.halashuo.domain.ODResult;

-import cn.halashuo.config.ODConfig;

-import cn.halashuo.utils.Letterbox;

+import cn.ck.domain.ODResult;

+import cn.ck.config.ODConfig;

+import cn.ck.utils.Letterbox;

import org.opencv.core.Mat;

import org.opencv.core.Point;

import org.opencv.core.Scalar;

@@ -24,9 +24,9 @@ import java.util.*;

public class ObjectDetection_n_7 {

static {

- // 加载opencv动态库,仅能在windows中运行,如果在linux中运行,需要加载linux动态库

- URL url = ClassLoader.getSystemResource("lib/opencv_java460.dll");

- System.load(url.getPath());

+ // 加载opencv动态库

+ //System.load(ClassLoader.getSystemResource("lib/opencv_java470-无用.dll").getPath());

+ nu.pattern.OpenCV.loadLocally();

}

public static void main(String[] args) throws OrtException {

@@ -37,7 +37,7 @@ public class ObjectDetection_n_7 {

OrtEnvironment environment = OrtEnvironment.getEnvironment();

OrtSession.SessionOptions sessionOptions = new OrtSession.SessionOptions();

- // 使用gpu,需要本机按钻过cuda,并修改pom.xml

+ // 使用gpu,需要本机按钻过cuda,并修改pom.xml,不安装也能运行本程序

// sessionOptions.addCUDA(0);

OrtSession session = environment.createSession(model_path, sessionOptions);

@@ -57,6 +57,7 @@ public class ObjectDetection_n_7 {

// 加载标签及颜色

ODConfig odConfig = new ODConfig();

Map map = getImagePathMap(imagePath);

+

for(String fileName : map.keySet()){

String imageFilePath = map.get(fileName);

System.out.println(imageFilePath);

@@ -69,9 +70,6 @@ public class ObjectDetection_n_7 {

// 在这里先定义下框的粗细、字的大小、字的类型、字的颜色(按比例设置大小粗细比较好一些)

int minDwDh = Math.min(img.width(), img.height());

int thickness = minDwDh/ODConfig.lineThicknessRatio;

- double fontSize = minDwDh/ODConfig.fontSizeRatio;

- int fontFace = Imgproc.FONT_HERSHEY_SIMPLEX;

- Scalar fontColor = new Scalar(255, 255, 255);

// 上面代码都是初始化后静态的,不用写在循环内,所以不计算时间

long start_time = System.currentTimeMillis();

@@ -114,19 +112,19 @@ public class ObjectDetection_n_7 {

Arrays.stream(outputData).iterator().forEachRemaining(x->{

ODResult odResult = new ODResult(x);

- System.out.println(odResult);

// 画框

Point topLeft = new Point((odResult.getX0()-dw)/ratio, (odResult.getY0()-dh)/ratio);

Point bottomRight = new Point((odResult.getX1()-dw)/ratio, (odResult.getY1()-dh)/ratio);

- Scalar color = new Scalar(odConfig.getColor(odResult.getClsId()));

+ Scalar color = new Scalar(odConfig.getOtherColor(odResult.getClsId()));

Imgproc.rectangle(img, topLeft, bottomRight, color, thickness);

// 框上写文字

String boxName = odConfig.getName(odResult.getClsId());

Point boxNameLoc = new Point((odResult.getX0()-dw)/ratio, (odResult.getY0()-dh)/ratio-3);

- Imgproc.putText(img, boxName, boxNameLoc, fontFace, fontSize, fontColor, thickness);

+ Imgproc.putText(img, boxName, boxNameLoc, Imgproc.FONT_HERSHEY_SIMPLEX, 0.7, color, thickness);

+ System.out.println(odResult+" "+ boxName);

});

System.out.printf("time:%d ms.", (System.currentTimeMillis() - start_time));

System.out.println();

@@ -134,9 +132,13 @@ public class ObjectDetection_n_7 {

// 保存图像到同级目录

// Imgcodecs.imwrite(ODConfig.savePicPath, img);

// 弹窗展示图像

- HighGui.imshow("Display Image", img);

+

+ //服务器部署:由于服务器没有桌面,所以无法弹出画面预览,主要注释一下代码

+ HighGui.imshow("result", img);

+

// 按任意按键关闭弹窗画面,结束程序

HighGui.waitKey();

+

}

HighGui.destroyAllWindows();

System.exit(0);

diff --git a/src/main/java/cn/halashuo/config/ODConfig.java b/src/main/java/cn/ck/config/ODConfig.java

similarity index 79%

rename from src/main/java/cn/halashuo/config/ODConfig.java

rename to src/main/java/cn/ck/config/ODConfig.java

index 012e5f24c5b30745d6dba60561d6c8dc6fdc593d..40f945970819d65efdfd2ad52e43ac86b459c837 100644

--- a/src/main/java/cn/halashuo/config/ODConfig.java

+++ b/src/main/java/cn/ck/config/ODConfig.java

@@ -1,4 +1,4 @@

-package cn.halashuo.config;

+package cn.ck.config;

import java.util.Random;

import java.util.ArrayList;

@@ -11,9 +11,9 @@ public final class ODConfig {

public static final Integer lineThicknessRatio = 333;

- public static final Double fontSizeRatio = 1145.14;

+ public static final Double fontSizeRatio = 1080.0;

-/* private static final List names = new ArrayList<>(Arrays.asList(

+ private static final List default_names = new ArrayList<>(Arrays.asList(

"person", "bicycle", "car", "motorcycle", "airplane", "bus", "train",

"truck", "boat", "traffic light", "fire hydrant", "stop sign", "parking meter",

"bench", "bird", "cat", "dog", "horse", "sheep", "cow", "elephant", "bear",

@@ -25,7 +25,7 @@ public final class ODConfig {

"cake", "chair", "couch", "potted plant", "bed", "dining table", "toilet",

"tv", "laptop", "mouse", "remote", "keyboard", "cell phone", "microwave",

"oven", "toaster", "sink", "refrigerator", "book", "clock", "vase", "scissors",

- "teddy bear", "hair drier", "toothbrush"));*/

+ "teddy bear", "hair drier", "toothbrush"));

private static final List names = new ArrayList<>(Arrays.asList(

@@ -35,7 +35,7 @@ public final class ODConfig {

public ODConfig() {

this.colors = new HashMap<>();

- names.forEach(name->{

+ default_names.forEach(name->{

Random random = new Random();

double[] color = {random.nextDouble()*256, random.nextDouble()*256, random.nextDouble()*256};

colors.put(name, color);

@@ -49,4 +49,12 @@ public final class ODConfig {

public double[] getColor(int clsId) {

return colors.get(getName(clsId));

}

+

+ public double[] getNameColor(String Name){

+ return colors.get(Name);

+ }

+

+ public double[] getOtherColor(int clsId) {

+ return colors.get(default_names.get(clsId));

+ }

}

\ No newline at end of file

diff --git a/src/main/java/cn/halashuo/domain/Detection.java b/src/main/java/cn/ck/domain/Detection.java

similarity index 69%

rename from src/main/java/cn/halashuo/domain/Detection.java

rename to src/main/java/cn/ck/domain/Detection.java

index 5fb19ece3bc0d98c630b46296da2a9b8b85fa779..907f8ec8052990ffb36a84363b2bbf8b40c49994 100644

--- a/src/main/java/cn/halashuo/domain/Detection.java

+++ b/src/main/java/cn/ck/domain/Detection.java

@@ -1,16 +1,18 @@

-package cn.halashuo.domain;

+package cn.ck.domain;

public class Detection{

public String label;

+ private Integer clsId;

+

public float[] bbox;

public float confidence;

- public Detection(String label, float[] bbox, float confidence){

-

+ public Detection(String label,Integer clsId, float[] bbox, float confidence){

+ this.clsId = clsId;

this.label = label;

this.bbox = bbox;

this.confidence = confidence;

@@ -20,6 +22,14 @@ public class Detection{

}

+ public Integer getClsId() {

+ return clsId;

+ }

+

+ public void setClsId(Integer clsId) {

+ this.clsId = clsId;

+ }

+

public String getLabel() {

return label;

}

@@ -39,6 +49,7 @@ public class Detection{

@Override

public String toString() {

return " label="+label +

+ " \t clsId="+clsId +

" \t x0="+bbox[0] +

" \t y0="+bbox[1] +

" \t x1="+bbox[2] +

diff --git a/src/main/java/cn/halashuo/domain/ODResult.java b/src/main/java/cn/ck/domain/ODResult.java

similarity index 98%

rename from src/main/java/cn/halashuo/domain/ODResult.java

rename to src/main/java/cn/ck/domain/ODResult.java

index 9526d71b48fb35f89584741bcd4c8dd10a937545..860f951431ccfe66910170e6f1a12f87a0760f28 100644

--- a/src/main/java/cn/halashuo/domain/ODResult.java

+++ b/src/main/java/cn/ck/domain/ODResult.java

@@ -1,4 +1,4 @@

-package cn.halashuo.domain;

+package cn.ck.domain;

import java.text.DecimalFormat;

diff --git a/src/main/java/cn/ck/utils/9457f0e295d0a8a5c9fd0e28cdf7ea0.png b/src/main/java/cn/ck/utils/9457f0e295d0a8a5c9fd0e28cdf7ea0.png

new file mode 100644

index 0000000000000000000000000000000000000000..a92cf0ed784071540e939f06749e8739f6c443ab

Binary files /dev/null and b/src/main/java/cn/ck/utils/9457f0e295d0a8a5c9fd0e28cdf7ea0.png differ

diff --git a/src/main/java/cn/halashuo/utils/ImageUtil.java b/src/main/java/cn/ck/utils/ImageUtil.java

similarity index 75%

rename from src/main/java/cn/halashuo/utils/ImageUtil.java

rename to src/main/java/cn/ck/utils/ImageUtil.java

index 9263618822c9426ed33cb5a67b824387b7b5fbbf..ba63a53f5485fde4e2115bb3054385bf847594ec 100644

--- a/src/main/java/cn/halashuo/utils/ImageUtil.java

+++ b/src/main/java/cn/ck/utils/ImageUtil.java

@@ -1,6 +1,6 @@

-package cn.halashuo.utils;

+package cn.ck.utils;

-import cn.halashuo.domain.Detection;

+import cn.ck.domain.Detection;

import org.opencv.core.*;

import org.opencv.imgproc.Imgproc;

@@ -68,6 +68,27 @@ public class ImageUtil {

}

}

+

+ public void xywh2xyxy(float[] bbox) {

+ float x = bbox[0];

+ float y = bbox[1];

+ float w = bbox[2];

+ float h = bbox[3];

+

+ bbox[0] = x - w * 0.5f;

+ bbox[1] = y - h * 0.5f;

+ bbox[2] = x + w * 0.5f;

+ bbox[3] = y + h * 0.5f;

+ }

+

+ public void scaleCoords(float[] bbox, float orgW, float orgH, float padW, float padH, float gain) {

+ // xmin, ymin, xmax, ymax -> (xmin_org, ymin_org, xmax_org, ymax_org)

+ bbox[0] = Math.max(0, Math.min(orgW - 1, (bbox[0] - padW) / gain));

+ bbox[1] = Math.max(0, Math.min(orgH - 1, (bbox[1] - padH) / gain));

+ bbox[2] = Math.max(0, Math.min(orgW - 1, (bbox[2] - padW) / gain));

+ bbox[3] = Math.max(0, Math.min(orgH - 1, (bbox[3] - padH) / gain));

+ }

+

public static float[] whc2cwh(float[] src) {

float[] chw = new float[src.length];

int j = 0;

@@ -98,11 +119,11 @@ public class ImageUtil {

float[] bbox = detection.getBbox();

Scalar color = new Scalar(249, 218, 60);

- Imgproc.rectangle(img, //Matrix obj of the image

- new Point(bbox[0], bbox[1]), //p1

- new Point(bbox[2], bbox[3]), //p2

- color, //Scalar object for color

- 2 //Thickness of the line

+ Imgproc.rectangle(img,

+ new Point(bbox[0], bbox[1]),

+ new Point(bbox[2], bbox[3]),

+ color,

+ 2

);

Imgproc.putText(

img,

diff --git a/src/main/java/cn/halashuo/utils/Letterbox.java b/src/main/java/cn/ck/utils/Letterbox.java

similarity index 98%

rename from src/main/java/cn/halashuo/utils/Letterbox.java

rename to src/main/java/cn/ck/utils/Letterbox.java

index ab03142e626311aa74171a4d4a1152c44a2584a0..6fe79d153b23a3133dbcac2cc6beb0ba2d977116 100644

--- a/src/main/java/cn/halashuo/utils/Letterbox.java

+++ b/src/main/java/cn/ck/utils/Letterbox.java

@@ -1,4 +1,4 @@

-package cn.halashuo.utils;

+package cn.ck.utils;

import org.opencv.core.Core;

import org.opencv.core.Mat;

diff --git a/src/main/java/cn/ck/utils/RTSPStreamer.ava b/src/main/java/cn/ck/utils/RTSPStreamer.ava

new file mode 100644

index 0000000000000000000000000000000000000000..d487e05d543db465840bebdcfab555c037775d7b

--- /dev/null

+++ b/src/main/java/cn/ck/utils/RTSPStreamer.ava

@@ -0,0 +1,196 @@

+package cn.ck.utils;

+import ai.onnxruntime.OnnxTensor;

+import ai.onnxruntime.OrtEnvironment;

+import ai.onnxruntime.OrtException;

+import ai.onnxruntime.OrtSession;

+import cn.ck.config.ODConfig;

+import org.bytedeco.ffmpeg.global.avcodec;

+import org.bytedeco.javacv.FFmpegFrameRecorder;

+import org.bytedeco.javacv.Frame;

+import org.bytedeco.javacv.FrameRecorder;

+import org.bytedeco.javacv.OpenCVFrameConverter;

+import org.opencv.core.CvType;

+import org.opencv.core.Mat;

+import org.opencv.core.Point;

+import org.opencv.core.Scalar;

+import org.opencv.imgproc.Imgproc;

+import org.opencv.videoio.VideoCapture;

+import org.opencv.videoio.Videoio;

+

+import java.nio.FloatBuffer;

+import java.util.HashMap;

+

+

+

+/*

+org.bytedeco

+javacv-platform

+1.5.7

+*/

+

+// 低效率ffmpeg示例,只提供思路,

+// 播放工具flv

+

+public class RTSPStreamer {

+

+ static Mat img = null;

+

+ public static void main(String[] args) throws OrtException, FrameRecorder.Exception {

+

+ nu.pattern.OpenCV.loadLocally();

+ img= new Mat();

+

+ String OS = System.getProperty("os.name").toLowerCase();

+ if (OS.contains("win")) {

+ System.load(ClassLoader.getSystemResource("lib/opencv_videoio_ffmpeg470_64.dll").getPath());

+ }

+ String model_path = "src\\main\\resources\\model\\yolov7-tiny.onnx";

+

+ String[] labels = {

+ "person", "bicycle", "car", "motorcycle", "airplane", "bus", "train",

+ "truck", "boat", "traffic light", "fire hydrant", "stop sign", "parking meter",

+ "bench", "bird", "cat", "dog", "horse", "sheep", "cow", "elephant", "bear",

+ "zebra", "giraffe", "backpack", "umbrella", "handbag", "tie", "suitcase",

+ "frisbee", "skis", "snowboard", "sports ball", "kite", "baseball bat",

+ "baseball glove", "skateboard", "surfboard", "tennis racket", "bottle",

+ "wine glass", "cup", "fork", "knife", "spoon", "bowl", "banana", "apple",

+ "sandwich", "orange", "broccoli", "carrot", "hot dog", "pizza", "donut",

+ "cake", "chair", "couch", "potted plant", "bed", "dining table", "toilet",

+ "tv", "laptop", "mouse", "remote", "keyboard", "cell phone", "microwave",

+ "oven", "toaster", "sink", "refrigerator", "book", "clock", "vase", "scissors",

+ "teddy bear", "hair drier", "toothbrush"};

+

+ // 加载ONNX模型

+ OrtEnvironment environment = OrtEnvironment.getEnvironment();

+ OrtSession.SessionOptions sessionOptions = new OrtSession.SessionOptions();

+

+ //sessionOptions.addCUDA(0);

+

+ OrtSession session = environment.createSession(model_path, sessionOptions);

+ // 输出基本信息

+ session.getInputInfo().keySet().forEach(x -> {

+ try {

+ System.out.println("input name = " + x);

+ System.out.println(session.getInputInfo().get(x).getInfo().toString());

+ } catch (OrtException e) {

+ throw new RuntimeException(e);

+ }

+ });

+

+ ODConfig odConfig = new ODConfig();

+ VideoCapture video = new VideoCapture();

+

+ video.open(0); //获取电脑上第0个摄像头

+

+ if (!video.isOpened()) {

+ video.open("video/car3.mp4");

+ }

+

+ int videoWidth = (int)video.get(Videoio.CAP_PROP_FRAME_WIDTH);

+ int heightWidth = (int)video.get(Videoio.CAP_PROP_FRAME_HEIGHT);

+ int frameRate = (int)video.get(Videoio.CAP_PROP_FPS);

+

+ int minDwDh = Math.min(videoWidth, heightWidth);

+ int thickness = minDwDh / ODConfig.lineThicknessRatio;

+

+ int detect_skip = 4;

+

+ // 跳帧计数

+ int detect_skip_index = 1;

+

+ // 最新一帧也就是上一帧推理结果

+ float[][] outputData = null;

+

+ //当前最新一帧。上一帧也可以暂存一下

+ Mat image;

+

+ Letterbox letterbox = new Letterbox();

+ OnnxTensor tensor;

+

+ Thread t = new Thread(new Runnable(){

+ @Override

+ public void run() {

+

+ // 视频720分辨率最好,20-25帧最佳 , 画面清晰度自己调参ffmpeg很多参数

+ FrameRecorder recorder = new FFmpegFrameRecorder("rtmp://192.168.167.141:30780/live/hiknvr-86", videoWidth, heightWidth, 0);

+

+ recorder.setVideoCodec(avcodec.AV_CODEC_ID_H264);

+ recorder.setFormat("flv");

+ recorder.setFrameRate(frameRate);

+ recorder.setVideoBitrate(1024); //画面清晰的和模糊和马赛克 受码率影响,码率越大画面越清晰,延迟越高,根据自己服务器带宽调整

+ recorder.setVideoOption("tune", "zerolatency");

+ recorder.setVideoOption("preset", "ultrafast");

+ recorder.setOption("buffer_size", "1000k");

+ recorder.setOption("max_delay", "500000");

+ recorder.setOption("rtmp_buffer", "100");

+ recorder.setOption("rtmp_live", "live");

+

+ recorder.setGopSize(50);

+ try {

+ recorder.start();

+ } catch (FrameRecorder.Exception e) {

+ throw new RuntimeException(e);

+ }

+ OpenCVFrameConverter.ToMat converterToMat = new OpenCVFrameConverter.ToMat();

+ while(true){

+ Frame frame = converterToMat.convert(img.clone());

+ try {

+ Thread.sleep((long)(1000/frameRate));

+ recorder.record(frame);

+ } catch (Exception e) {

+ throw new RuntimeException(e);

+ }

+ }

+

+

+ }

+ });

+ // 启动线程

+ t.start();

+

+ // 使用多线程和GPU可以提升帧率,一个线程拉流,一个线程模型推理,中间通过变量或者队列交换数据,代码示例仅仅使用单线程

+ while (video.read(img)) {

+ if ((detect_skip_index % detect_skip == 0) || outputData == null){

+ image = img.clone();

+ image = letterbox.letterbox(image);

+ Imgproc.cvtColor(image, image, Imgproc.COLOR_BGR2RGB);

+

+ image.convertTo(image, CvType.CV_32FC1, 1. / 255);

+ float[] whc = new float[3 * 640 * 640];

+ image.get(0, 0, whc);

+ float[] chw = ImageUtil.whc2cwh(whc);

+

+ detect_skip_index = 1;

+

+ FloatBuffer inputBuffer = FloatBuffer.wrap(chw);

+ tensor = OnnxTensor.createTensor(environment, inputBuffer, new long[]{1, 3, 640, 640});

+

+ HashMap stringOnnxTensorHashMap = new HashMap<>();

+ stringOnnxTensorHashMap.put(session.getInputInfo().keySet().iterator().next(), tensor);

+ OrtSession.Result output = session.run(stringOnnxTensorHashMap);

+

+ // 得到结果,缓存结果

+ outputData = (float[][]) output.get(0).getValue();

+ }else{

+ detect_skip_index = detect_skip_index + 1;

+ }

+

+ for(float[] x : outputData){

+

+ Point topLeft = new Point((x[1] - letterbox.getDw()) / letterbox.getRatio(), (x[2] - letterbox.getDh()) / letterbox.getRatio());

+ Point bottomRight = new Point((x[3] - letterbox.getDw()) / letterbox.getRatio(), (x[4] - letterbox.getDh()) / letterbox.getRatio());

+ Scalar color = new Scalar(odConfig.getOtherColor((int) x[0]));

+

+ Imgproc.rectangle(img, topLeft, bottomRight, color, thickness);

+ // 框上写文字

+ // String boxName = labels[(int) x[0]];

+ // Point boxNameLoc = new Point((x[1] - letterbox.getDw()) / letterbox.getRatio(), (x[2] - letterbox.getDh()) / letterbox.getRatio() - 3);

+ // Imgproc.putText(img, boxName, boxNameLoc, fontFace, 0.7, color, thickness);

+

+ }

+ }

+ video.release();

+ System.exit(0);

+

+ }

+}

diff --git a/src/main/java/cn/ck/utils/Test1.java b/src/main/java/cn/ck/utils/Test1.java

new file mode 100644

index 0000000000000000000000000000000000000000..f94ee1c86585a709a21904468c589b4acc89bf02

--- /dev/null

+++ b/src/main/java/cn/ck/utils/Test1.java

@@ -0,0 +1,106 @@

+package cn.ck.utils;

+

+public class Test1 {

+

+ /*public Test(String modelPath, String labelPath, float confThreshold, float nmsThreshold, int gpuDeviceId) throws OrtException, IOException {

+ super(modelPath, labelPath, confThreshold, nmsThreshold, gpuDeviceId);

+ }

+

+ @Override

+ public List run(Mat img) throws OrtException {

+

+ float orgW = (float) img.size().width;

+ float orgH = (float) img.size().height;

+

+ float gain = Math.min((float) INPUT_SIZE / orgW, (float) INPUT_SIZE / orgH);

+ float padW = (INPUT_SIZE - orgW * gain) * 0.5f;

+ float padH = (INPUT_SIZE - orgH * gain) * 0.5f;

+

+

+ Map inputContainer = this.preprocess(img);

+

+

+ float[][] predictions;

+

+ OrtSession.Result results = this.session.run(inputContainer);

+ predictions = ((float[][][]) results.get(0).getValue())[0];

+

+

+ return postprocess(predictions, orgW, orgH, padW, padH, gain);

+ }

+

+

+ public Map preprocess(Mat img) throws OrtException {

+