基本信息

+ +**发布者(Publisher):Huawei** + +**应用领域(Application Domain):Recommendation** + +**版本(Version):1.1** + +**修改时间(Modified) :2021.10.01** + +**大小(Size)**_**:324KB** + +**框架(Framework):TensorFlow 2.4.1** + +**模型格式(Model Format):ckpt** + +**精度(Precision):Mixed** + +**处理器(Processor):昇腾910** + +**应用级别(Categories):Benchmark** + +**描述(Description):基于TensorFlow框架的推荐网络训练代码** + +概述

+ +- 开源项目Recommender System with TF2.0主要是对经典的推荐算法论文进行复现,包括Matching(召回)(MF、BPR、SASRec等)、Ranking(排序)(DeepFM、DCN等)。 + +- 参考论文: + + [https://arxiv.org/pdf/1706.06978.pdf](https://arxiv.org/pdf/1706.06978.pdf) + +- 参考实现: + + [https://github.com/ZiyaoGeng/Recommender-System-with-TF2.0/tree/master/DIN](https://github.com/ZiyaoGeng/Recommender-System-with-TF2.0/tree/master/DIN) + +- 适配昇腾 AI 处理器的实现: + + skip + +- 通过Git获取对应commit\_id的代码方法如下: + + ``` + git clone {repository_url} # 克隆仓库的代码 + cd {repository_name} # 切换到模型的代码仓目录 + git checkout {branch} # 切换到对应分支 + git reset --hard {commit_id} # 代码设置到对应的commit_id + cd {code_path} # 切换到模型代码所在路径,若仓库下只有该模型,则无需切换 + ``` + +## 默认配置 +- 网络结构 + +- 训练超参(单卡): + - Batch size: 4096 + - Train epochs:5 + + +## 支持特性 + +| 特性列表 | 是否支持 | +| ---------- | -------- | +| 分布式训练 | 否 | +| 混合精度 | 是 | +| 数据并行 | 否 | + + +## 混合精度训练 + +昇腾910 AI处理器提供自动混合精度功能,可以针对全网中float32数据类型的算子,按照内置的优化策略,自动将部分float32的算子降低精度到float16,从而在精度损失很小的情况下提升系统性能并减少内存使用。 + +## 开启混合精度 +相关代码示例。 + +``` +config_proto = tf.ConfigProto(allow_soft_placement=True) + custom_op = config_proto.graph_options.rewrite_options.custom_optimizers.add() + custom_op.name = 'NpuOptimizer' + custom_op.parameter_map["use_off_line"].b = True + custom_op.parameter_map["precision_mode"].s = tf.compat.as_bytes("allow_mix_precision") + config_proto.graph_options.rewrite_options.remapping = RewriterConfig.OFF + session_config = npu_config_proto(config_proto=config_proto) +``` + +训练环境准备

+ +- 硬件环境和运行环境准备请参见《[CANN软件安装指南](https://support.huawei.com/enterprise/zh/ascend-computing/cann-pid-251168373?category=installation-update)》 +- 运行以下命令安装依赖。 +``` +pip3 install requirements.txt +``` +说明:依赖配置文件requirements.txt文件位于模型的根目录 + + +快速上手

+ +## 数据集准备 + +1. 数据集请用户自行获取。 + +## 模型训练 +- 单击“立即下载”,并选择合适的下载方式下载源码包。 +- 开始训练。 + + 1. 启动训练之前,首先要配置程序运行相关环境变量。 + + 环境变量配置信息参见: + + [Ascend 910训练平台环境变量设置](https://gitee.com/ascend/modelzoo/wikis/Ascend%20910%E8%AE%AD%E7%BB%83%E5%B9%B3%E5%8F%B0%E7%8E%AF%E5%A2%83%E5%8F%98%E9%87%8F%E8%AE%BE%E7%BD%AE?sort_id=3148819) + + + 2. 单卡训练 + + 2.1 设置单卡训练参数(脚本位于DIN_ID2641_for_TensorFlow2.X/test/train_performance_1p.sh),示例如下。 + + + ``` + batch_size=4096 + #训练step + train_epochs=5 + ``` + + 2.2 单卡训练指令(脚本位于DIN_ID2641_for_TensorFlow2.X/test) + + ``` + 于终端中运行export ASCEND_DEVICE_ID=0 (0~7)以指定单卡训练时使用的卡 + bash train_full_1p.sh --data_path=xx + 数据集应有如下结构(数据切分可能不同) + | + ├─meta.pkl + ├─remap.pkl + ├─reviews.pkl + + ``` + +迁移学习指导

+ +- 数据集准备。 + + 1. 获取数据。 + 请参见“快速上手”中的数据集准备 + +- 模型训练 + + 请参考“快速上手”章节 + +高级参考

+ +## 脚本和示例代码 + + ├── README.md //说明文档 + ├── requirements.txt //依赖 + ├── train.py //主脚本 + ├── utils.py + ├── model.py + ├── modules.py + ├── test + | |—— train_full_1p.sh //单卡训练脚本 + | |—— train_performance_1p.sh //单卡训练脚本 + +## 脚本参数 + +``` +batch_size 训练batch_size +train_epochs 总训练epoch数 +其余参数请在utils.py中配置flag默认值 +``` + +## 训练过程 + +通过“模型训练”中的训练指令启动单卡训练。 +将训练脚本(train_full_1p.sh)中的data_path设置为训练数据集的路径。具体的流程参见“模型训练”的示例。 \ No newline at end of file diff --git a/TensorFlow2/built-in/keras_sample/DIN_ID2641_for_TensorFlow2.X/__init__.py b/TensorFlow2/built-in/keras_sample/DIN_ID2641_for_TensorFlow2.X/__init__.py new file mode 100644 index 0000000000000000000000000000000000000000..4b6fedbd5de92e1613326db46a44e19e9eb0a600 --- /dev/null +++ b/TensorFlow2/built-in/keras_sample/DIN_ID2641_for_TensorFlow2.X/__init__.py @@ -0,0 +1,36 @@ +# +# Copyright 2017 The TensorFlow Authors. All Rights Reserved. +# +# Licensed under the Apache License, Version 2.0 (the "License"); +# you may not use this file except in compliance with the License. +# You may obtain a copy of the License at +# +# http://www.apache.org/licenses/LICENSE-2.0 +# +# Unless required by applicable law or agreed to in writing, software +# distributed under the License is distributed on an "AS IS" BASIS, +# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. +# See the License for the specific language governing permissions and +# limitations under the License. +# ============================================================================ +# Copyright 2021 Huawei Technologies Co., Ltd +# +# Licensed under the Apache License, Version 2.0 (the "License"); +# you may not use this file except in compliance with the License. +# You may obtain a copy of the License at +# +# http://www.apache.org/licenses/LICENSE-2.0 +# +# Unless required by applicable law or agreed to in writing, software +# distributed under the License is distributed on an "AS IS" BASIS, +# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. +# See the License for the specific language governing permissions and +# limitations under the License. +# +""" +Created on May 23, 2020 + +model: Deep interest network for click-through rate prediction + +@author: Ziyao Geng +""" \ No newline at end of file diff --git a/TensorFlow2/built-in/keras_sample/DIN_ID2641_for_TensorFlow2.X/model.py b/TensorFlow2/built-in/keras_sample/DIN_ID2641_for_TensorFlow2.X/model.py new file mode 100644 index 0000000000000000000000000000000000000000..57815e06357e4615e474515a3ff8a8cc429c9eb9 --- /dev/null +++ b/TensorFlow2/built-in/keras_sample/DIN_ID2641_for_TensorFlow2.X/model.py @@ -0,0 +1,153 @@ +# +# Copyright 2017 The TensorFlow Authors. All Rights Reserved. +# +# Licensed under the Apache License, Version 2.0 (the "License"); +# you may not use this file except in compliance with the License. +# You may obtain a copy of the License at +# +# http://www.apache.org/licenses/LICENSE-2.0 +# +# Unless required by applicable law or agreed to in writing, software +# distributed under the License is distributed on an "AS IS" BASIS, +# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. +# See the License for the specific language governing permissions and +# limitations under the License. +# ============================================================================ +# Copyright 2021 Huawei Technologies Co., Ltd +# +# Licensed under the Apache License, Version 2.0 (the "License"); +# you may not use this file except in compliance with the License. +# You may obtain a copy of the License at +# +# http://www.apache.org/licenses/LICENSE-2.0 +# +# Unless required by applicable law or agreed to in writing, software +# distributed under the License is distributed on an "AS IS" BASIS, +# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. +# See the License for the specific language governing permissions and +# limitations under the License. +# +""" +Created on May 23, 2020 + +model: Deep interest network for click-through rate prediction + +@author: Ziyao Geng +""" +import tensorflow as tf + +from tensorflow.keras import Model +from tensorflow.keras.layers import Embedding, Dense, BatchNormalization, Input, PReLU, Dropout +from tensorflow.keras.regularizers import l2 + +from modules import * + + +class DIN(Model): + def __init__(self, feature_columns, behavior_feature_list, att_hidden_units=(80, 40), + ffn_hidden_units=(80, 40), att_activation='prelu', ffn_activation='prelu', maxlen=40, dnn_dropout=0., embed_reg=1e-4): + """ + DIN + :param feature_columns: A list. dense_feature_columns + sparse_feature_columns + :param behavior_feature_list: A list. the list of behavior feature names + :param att_hidden_units: A tuple or list. Attention hidden units. + :param ffn_hidden_units: A tuple or list. Hidden units list of FFN. + :param att_activation: A String. The activation of attention. + :param ffn_activation: A String. Prelu or Dice. + :param maxlen: A scalar. Maximum sequence length. + :param dropout: A scalar. The number of Dropout. + :param embed_reg: A scalar. The regularizer of embedding. + """ + super(DIN, self).__init__() + self.maxlen = maxlen + + self.dense_feature_columns, self.sparse_feature_columns = feature_columns + + # len + self.other_sparse_len = len(self.sparse_feature_columns) - len(behavior_feature_list) + self.dense_len = len(self.dense_feature_columns) + self.behavior_num = len(behavior_feature_list) + + # other embedding layers + self.embed_sparse_layers = [Embedding(input_dim=feat['feat_num'], + input_length=1, + output_dim=feat['embed_dim'], + embeddings_initializer='random_uniform', + embeddings_regularizer=l2(embed_reg)) + for feat in self.sparse_feature_columns + if feat['feat'] not in behavior_feature_list] + # behavior embedding layers, item id and category id + self.embed_seq_layers = [Embedding(input_dim=feat['feat_num'], + input_length=1, + output_dim=feat['embed_dim'], + embeddings_initializer='random_uniform', + embeddings_regularizer=l2(embed_reg)) + for feat in self.sparse_feature_columns + if feat['feat'] in behavior_feature_list] + + # attention layer + self.attention_layer = Attention_Layer(att_hidden_units, att_activation) + + self.bn = BatchNormalization(trainable=True) + # ffn + self.ffn = [Dense(unit, activation=PReLU() if ffn_activation == 'prelu' else Dice())\ + for unit in ffn_hidden_units] + self.dropout = Dropout(dnn_dropout) + self.dense_final = Dense(1) + + def call(self, inputs): + # dense_inputs and sparse_inputs is empty + # seq_inputs (None, maxlen, behavior_num) + # item_inputs (None, behavior_num) + dense_inputs, sparse_inputs, seq_inputs, item_inputs = inputs + # attention ---> mask, if the element of seq_inputs is equal 0, it must be filled in. + mask = tf.cast(tf.not_equal(seq_inputs[:, :, 0], 0), dtype=tf.float32) # (None, maxlen) + # other + other_info = dense_inputs + for i in range(self.other_sparse_len): + other_info = tf.concat([other_info, self.embed_sparse_layers[i](sparse_inputs[:, i])], axis=-1) + + # seq, item embedding and category embedding should concatenate + seq_embed = tf.concat([self.embed_seq_layers[i](seq_inputs[:, :, i]) for i in range(self.behavior_num)], axis=-1) + item_embed = tf.concat([self.embed_seq_layers[i](item_inputs[:, i]) for i in range(self.behavior_num)], axis=-1) + + # att + user_info = self.attention_layer([item_embed, seq_embed, seq_embed, mask]) # (None, d * 2) + + # concat user_info(att hist), cadidate item embedding, other features + if self.dense_len > 0 or self.other_sparse_len > 0: + info_all = tf.concat([user_info, item_embed, other_info], axis=-1) + else: + info_all = tf.concat([user_info, item_embed], axis=-1) + + info_all = self.bn(info_all) + + # ffn + for dense in self.ffn: + info_all = dense(info_all) + + info_all = self.dropout(info_all) + outputs = tf.nn.sigmoid(self.dense_final(info_all)) + return outputs + + def summary(self): + dense_inputs = Input(shape=(self.dense_len, ), dtype=tf.float32) + sparse_inputs = Input(shape=(self.other_sparse_len, ), dtype=tf.int32) + seq_inputs = Input(shape=(self.maxlen, self.behavior_num), dtype=tf.int32) + item_inputs = Input(shape=(self.behavior_num, ), dtype=tf.int32) + tf.keras.Model(inputs=[dense_inputs, sparse_inputs, seq_inputs, item_inputs], + outputs=self.call([dense_inputs, sparse_inputs, seq_inputs, item_inputs])).summary() + + +def test_model(): + dense_features = [{'feat': 'a'}, {'feat': 'b'}] + sparse_features = [{'feat': 'item_id', 'feat_num': 100, 'embed_dim': 8}, + {'feat': 'cate_id', 'feat_num': 100, 'embed_dim': 8}, + {'feat': 'adv_id', 'feat_num': 100, 'embed_dim': 8}] + behavior_list = ['item_id', 'cate_id'] + features = [dense_features, sparse_features] + model = DIN(features, behavior_list) + model.summary() + + +# test_model() \ No newline at end of file diff --git a/TensorFlow2/built-in/keras_sample/DIN_ID2641_for_TensorFlow2.X/modelzoo_level.txt b/TensorFlow2/built-in/keras_sample/DIN_ID2641_for_TensorFlow2.X/modelzoo_level.txt new file mode 100644 index 0000000000000000000000000000000000000000..a829ab59b97a1022dd6fc33b59b7ae0d55009432 --- /dev/null +++ b/TensorFlow2/built-in/keras_sample/DIN_ID2641_for_TensorFlow2.X/modelzoo_level.txt @@ -0,0 +1,3 @@ +FuncStatus:OK +PerfStatus:NOK +PrecisionStatus:OK \ No newline at end of file diff --git a/TensorFlow2/built-in/keras_sample/DIN_ID2641_for_TensorFlow2.X/modules.py b/TensorFlow2/built-in/keras_sample/DIN_ID2641_for_TensorFlow2.X/modules.py new file mode 100644 index 0000000000000000000000000000000000000000..9ed3b07cac7943138a0f4f56f976a8dab427e19d --- /dev/null +++ b/TensorFlow2/built-in/keras_sample/DIN_ID2641_for_TensorFlow2.X/modules.py @@ -0,0 +1,94 @@ +# +# Copyright 2017 The TensorFlow Authors. All Rights Reserved. +# +# Licensed under the Apache License, Version 2.0 (the "License"); +# you may not use this file except in compliance with the License. +# You may obtain a copy of the License at +# +# http://www.apache.org/licenses/LICENSE-2.0 +# +# Unless required by applicable law or agreed to in writing, software +# distributed under the License is distributed on an "AS IS" BASIS, +# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. +# See the License for the specific language governing permissions and +# limitations under the License. +# ============================================================================ +# Copyright 2021 Huawei Technologies Co., Ltd +# +# Licensed under the Apache License, Version 2.0 (the "License"); +# you may not use this file except in compliance with the License. +# You may obtain a copy of the License at +# +# http://www.apache.org/licenses/LICENSE-2.0 +# +# Unless required by applicable law or agreed to in writing, software +# distributed under the License is distributed on an "AS IS" BASIS, +# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. +# See the License for the specific language governing permissions and +# limitations under the License. +# +""" +Created on Oct 26, 2020 + +modules of DIN: attention mechanism + +@author: Ziyao Geng +""" + + +import tensorflow as tf +from tensorflow.keras.regularizers import l2 +from tensorflow.keras.layers import Layer, BatchNormalization, Dense + + +class Attention_Layer(Layer): + def __init__(self, att_hidden_units, activation='prelu'): + """ + """ + super(Attention_Layer, self).__init__() + self.att_dense = [Dense(unit, activation=activation) for unit in att_hidden_units] + self.att_final_dense = Dense(1) + + def call(self, inputs): + # query: candidate item (None, d * 2), d is the dimension of embedding + # key: hist items (None, seq_len, d * 2) + # value: hist items (None, seq_len, d * 2) + # mask: (None, seq_len) + q, k, v, mask = inputs + q = tf.tile(q, multiples=[1, k.shape[1]]) # (None, seq_len * d * 2) + q = tf.reshape(q, shape=[-1, k.shape[1], k.shape[2]]) # (None, seq_len, d * 2) + + # q, k, out product should concat + info = tf.concat([q, k, q - k, q * k], axis=-1) + + # dense + for dense in self.att_dense: + info = dense(info) + + outputs = self.att_final_dense(info) # (None, seq_len, 1) + outputs = tf.squeeze(outputs, axis=-1) # (None, seq_len) + + paddings = tf.ones_like(outputs) * (-2 ** 32 + 1) # (None, seq_len) + outputs = tf.where(tf.equal(mask, 0), paddings, outputs) # (None, seq_len) + + # softmax + outputs = tf.nn.softmax(logits=outputs) # (None, seq_len) + outputs = tf.expand_dims(outputs, axis=1) # None, 1, seq_len) + + outputs = tf.matmul(outputs, v) # (None, 1, d * 2) + outputs = tf.squeeze(outputs, axis=1) + + return outputs + + +class Dice(Layer): + def __init__(self): + super(Dice, self).__init__() + self.bn = BatchNormalization(center=False, scale=False) + self.alpha = self.add_weight(shape=(), dtype=tf.float32, name='alpha') + + def call(self, x): + x_normed = self.bn(x) + x_p = tf.sigmoid(x_normed) + + return self.alpha * (1.0 - x_p) * x + x_p * x \ No newline at end of file diff --git a/TensorFlow2/built-in/keras_sample/DIN_ID2641_for_TensorFlow2.X/npu_convert_dropout.py b/TensorFlow2/built-in/keras_sample/DIN_ID2641_for_TensorFlow2.X/npu_convert_dropout.py new file mode 100644 index 0000000000000000000000000000000000000000..95f8689ce4da26c08f18a0fcb49c42eb7f1c8b06 --- /dev/null +++ b/TensorFlow2/built-in/keras_sample/DIN_ID2641_for_TensorFlow2.X/npu_convert_dropout.py @@ -0,0 +1,54 @@ +#!/usr/bin/env python3 +# Licensed under the Apache License, Version 2.0 (the "License"); +# you may not use this file except in compliance with the License. +# You may obtain a copy of the License at +# +# http://www.apache.org/licenses/LICENSE-2.0 +# +# Unless required by applicable law or agreed to in writing, software +# distributed under the License is distributed on an "AS IS" BASIS, +# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. +# See the License for the specific language governing permissions and +# limitations under the License. +# ============================================================================ +# Copyright 2021 Huawei Technologies Co., Ltd +# +# Licensed under the Apache License, Version 2.0 (the "License"); +# you may not use this file except in compliance with the License. +# You may obtain a copy of the License at +# +# http://www.apache.org/licenses/LICENSE-2.0 +# +# Unless required by applicable law or agreed to in writing, software +# distributed under the License is distributed on an "AS IS" BASIS, +# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. +# See the License for the specific language governing permissions and +# limitations under the License. +# + +from keras import backend +from keras.utils import control_flow_util +from keras.layers.core import Dropout +from tensorflow.python.ops import array_ops +from tensorflow.python.ops import nn +import npu_ops + +def dropout_call(self, inputs, training=None): + """Make Keras Dropout to execute NPU dropout""" + if training is None: + training = backend.learning_phase() + + def dropped_inputs(): + return npu_ops.dropout( + inputs, + noise_shape=self._get_noise_shape(inputs), + seed=self.seed, + keep_prob=1 - self.rate) + + output = control_flow_util.smart_cond(training, + dropped_inputs, + lambda : array_ops.identity(inputs)) + + return output + +Dropout.call = dropout_call diff --git a/TensorFlow2/built-in/keras_sample/DIN_ID2641_for_TensorFlow2.X/npu_ops.py b/TensorFlow2/built-in/keras_sample/DIN_ID2641_for_TensorFlow2.X/npu_ops.py new file mode 100644 index 0000000000000000000000000000000000000000..fa6f8f211c19e1bce9d78a90c7c11b6121efdbd7 --- /dev/null +++ b/TensorFlow2/built-in/keras_sample/DIN_ID2641_for_TensorFlow2.X/npu_ops.py @@ -0,0 +1,256 @@ +# Copyright 2016 The TensorFlow Authors. All Rights Reserved. +# +# Licensed under the Apache License, Version 2.0 (the "License"); +# you may not use this file except in compliance with the License. +# You may obtain a copy of the License at +# +# http://www.apache.org/licenses/LICENSE-2.0 +# +# Unless required by applicable law or agreed to in writing, software +# distributed under the License is distributed on an "AS IS" BASIS, +# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. +# See the License for the specific language governing permissions and +# limitations under the License. +# ============================================================================== +# ============================================================================ +# Copyright 2021 Huawei Technologies Co., Ltd +# +# Licensed under the Apache License, Version 2.0 (the "License"); +# you may not use this file except in compliance with the License. +# You may obtain a copy of the License at +# +# http://www.apache.org/licenses/LICENSE-2.0 +# +# Unless required by applicable law or agreed to in writing, software +# distributed under the License is distributed on an "AS IS" BASIS, +# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. +# See the License for the specific language governing permissions and +# limitations under the License. +# ============================================================================ + +"""Ops for collective operations implemented using hccl.""" +from __future__ import absolute_import +from __future__ import division +from __future__ import print_function + + +import numbers +from tensorflow.python.ops import array_ops +from tensorflow.python.framework import tensor_shape +from tensorflow.python.framework import ops +from tensorflow.python.eager import context + +from npu_device import gen_npu_ops + + +DEFAULT_GRAPH_SEED = 87654321 +_MAXINT32 = 2**31 - 1 +def LARSV2(input_weight, + input_grad, + weight_decay, + learning_rate, + hyperpara=0.001, + epsilon=0.00001, + use_clip=False, + name=None): + if context.executing_eagerly(): + raise RuntimeError("tf.LARSV2() is not compatible with " + "eager execution.") + + return gen_npu_ops.lars_v2(input_weight=input_weight, + input_grad=input_grad, + weight_decay=weight_decay, + learning_rate=learning_rate, + hyperpara=hyperpara, + epsilon=epsilon, + use_clip=use_clip, + name=name) + + +def _truncate_seed(seed): + return seed % _MAXINT32 # Truncate to fit into 32-bit integer + +def get_seed(op_seed): + global_seed = ops.get_default_graph().seed + + if global_seed is not None: + if op_seed is None: + op_seed = ops.get_default_graph()._last_id + + seeds = _truncate_seed(global_seed), _truncate_seed(op_seed) + else: + if op_seed is not None: + seeds = DEFAULT_GRAPH_SEED, _truncate_seed(op_seed) + else: + seeds = None, None + # Avoid (0, 0) as the C++ ops interpret it as nondeterminism, which would + # be unexpected since Python docs say nondeterminism is (None, None). + if seeds == (0, 0): + return (0, _MAXINT32) + return seeds + +def _get_noise_shape(x, noise_shape): + # If noise_shape is none return immediately. + if noise_shape is None: + return array_ops.shape(x) + + try: + # Best effort to figure out the intended shape. + # If not possible, let the op to handle it. + # In eager mode exception will show up. + noise_shape_ = tensor_shape.as_shape(noise_shape) + except (TypeError, ValueError): + return noise_shape + + if x.shape.dims is not None and len(x.shape.dims) == len(noise_shape_.dims): + new_dims = [] + for i, dim in enumerate(x.shape.dims): + if noise_shape_.dims[i].value is None and dim.value is not None: + new_dims.append(dim.value) + else: + new_dims.append(noise_shape_.dims[i].value) + return tensor_shape.TensorShape(new_dims) + + return noise_shape + +def dropout(x, keep_prob, noise_shape=None, seed=None, name=None): + """The gradient for `gelu`. + + Args: + x: A tensor with type is float. + keep_prob: A tensor, float, rate of every element reserved. + noise_shape: A 1-D tensor, with type int32, shape of keep/drop what random + generated. + seed: Random seed. + name: Layer name. + + Returns: + A tensor. + """ + if context.executing_eagerly(): + raise RuntimeError("tf.dropout() is not compatible with " + "eager execution.") + x = ops.convert_to_tensor(x, name="x") + if not x.dtype.is_floating: + raise ValueError("x has to be a floating point tensor since it's going to" + " be scaled. Got a %s tensor instead." % x.dtype) + if isinstance(keep_prob, numbers.Real) and not 0 < keep_prob <= 1: + raise ValueError("keep_prob must be a scalar tensor or a float in the " + "range (0, 1], got %g" % keep_prob) + if isinstance(keep_prob, float) and keep_prob == 1: + return x + seed, seed2 = get_seed(seed) + noise_shape = _get_noise_shape(x, noise_shape) + gen_out = gen_npu_ops.drop_out_gen_mask(noise_shape, keep_prob, seed, seed2, name) + result = gen_npu_ops.drop_out_do_mask(x, gen_out, keep_prob, name) + return result + +@ops.RegisterGradient("DropOutDoMask") +def _DropOutDoMaskGrad(op, grad): + result = gen_npu_ops.drop_out_do_mask(grad, op.inputs[1], op.inputs[2]) + return [result, None, None] + +def basic_lstm_cell(x, h, c, w, b, keep_prob, forget_bias, state_is_tuple, + activation, name=None): + if context.executing_eagerly(): + raise RuntimeError("tf.basic_lstm_cell() is not compatible with " + "eager execution.") + x = ops.convert_to_tensor(x, name="x") + h = ops.convert_to_tensor(h, name="h") + c = ops.convert_to_tensor(c, name="c") + w = ops.convert_to_tensor(w, name="w") + b = ops.convert_to_tensor(b, name="b") + result = gen_npu_ops.basic_lstm_cell(x, h, c, w, b, keep_prob, forget_bias, state_is_tuple, + activation, name) + return result + +@ops.RegisterGradient("BasicLSTMCell") +def basic_lstm_cell_grad(op, dct, dht, dit, djt, dft, dot, dtanhct): + + dgate, dct_1 = gen_npu_ops.basic_lstm_cell_c_state_grad(op.inputs[2], dht, dct, op.outputs[2], op.outputs[3], op.outputs[4], op.outputs[5], op.outputs[6], forget_bias=op.get_attr("forget_bias"), activation=op.get_attr("activation")) + dw, db = gen_npu_ops.basic_lstm_cell_weight_grad(op.inputs[0], op.inputs[1], dgate) + dxt, dht = gen_npu_ops.basic_lstm_cell_input_grad(dgate, op.inputs[3], keep_prob=op.get_attr("keep_prob")) + + return [dxt, dht, dct_1, dw, db] + +def adam_apply_one_assign(input0, input1, input2, input3, input4, + mul0_x, mul1_x, mul2_x, mul3_x, add2_y, name=None): + if context.executing_eagerly(): + raise RuntimeError("tf.adam_apply_one_assign() is not compatible with " + "eager execution.") + result = gen_npu_ops.adam_apply_one_assign(input0, input1, input2, input3, input4, + mul0_x, mul1_x, mul2_x, mul3_x, add2_y,name) + return result + +def adam_apply_one_with_decay_assign(input0, input1, input2, input3, input4, + mul0_x, mul1_x, mul2_x, mul3_x, mul4_x, add2_y, name=None): + if context.executing_eagerly(): + raise RuntimeError("tf.adam_apply_one_with_decay_assign() is not compatible with " + "eager execution.") + result = gen_npu_ops.adam_apply_one_with_decay_assign(input0, input1, input2, input3, input4, + mul0_x, mul1_x, mul2_x, mul3_x, mul4_x, add2_y, name) + return result + +@ops.RegisterGradient("DynamicGruV2") +def dynamic_gru_v2_grad(op, dy, doutput_h, dupdate, dreset, dnew, dhidden_new): + (x, weight_input, weight_hidden, bias_input, bias_hidden, seq_length, init_h) = op.inputs + (y, output_h, update, reset, new, hidden_new) = op.outputs + (dw_input, dw_hidden, db_input, db_hidden, dx, dh_prev) = gen_npu_ops.dynamic_gru_v2_grad(x, weight_input, weight_hidden, y, init_h, output_h, dy, doutput_h, update, reset, new, hidden_new, direction=op.get_attr("direction"), cell_depth=op.get_attr("cell_depth"), keep_prob=op.get_attr("keep_prob"), cell_clip=op.get_attr("cell_clip"), num_proj=op.get_attr("num_proj"), time_major=op.get_attr("time_major"), gate_order=op.get_attr("gate_order"), reset_after=op.get_attr("reset_after")) + + return (dx, dw_input, dw_hidden, db_input, db_hidden, seq_length, dh_prev) + +@ops.RegisterGradient("DynamicRnn") +def dynamic_rnn_grad(op, dy, dh, dc, di, dj, df, do, dtanhc): + (x, w, b, seq_length, init_h, init_c) = op.inputs + (y, output_h, output_c, i, j, f, o, tanhc) = op.outputs + (dw, db, dx, dh_prev, dc_prev) = gen_npu_ops.dynamic_rnn_grad(x, w, b, y, init_h[-1], init_c[-1], output_h, output_c, dy, dh[-1], dc[-1], i, j, f, o, tanhc, cell_type=op.get_attr("cell_type"), direction=op.get_attr("direction"), cell_depth=op.get_attr("cell_depth"), use_peephole=op.get_attr("use_peephole"), keep_prob=op.get_attr("keep_prob"), cell_clip=op.get_attr("cell_clip"), num_proj=op.get_attr("num_proj"), time_major=op.get_attr("time_major"), forget_bias=op.get_attr("forget_bias")) + + return (dx, dw, db, seq_length, dh_prev, dc_prev) + +def lamb_apply_optimizer_assign(input0,input1,input2,input3,mul0_x,mul1_x,mul2_x, + mul3_x,add2_y,steps,do_use_weight,weight_decay_rate,name=None): + if context.executing_eagerly(): + raise RuntimeError("tf.lamb_apply_optimizer_assign() is not compatible with eager execution") + update,nextv,nextm=gen_npu_ops.lamb_apply_optimizer_assign(input0,input1,input2,input3,mul0_x,mul1_x,mul2_x, + mul3_x,add2_y,steps,do_use_weight,weight_decay_rate,name) + return update,nextv,nextm + +def lamb_apply_weight_assign(input0,input1,input2,input3,input4,name=None): + if context.executing_eagerly(): + raise RuntimeError("tf.lamb_apply_weight_assign() is not compatible with eager execution") + result = gen_npu_ops.lamb_apply_weight_assign(input0,input1,input2,input3,input4,name) + return result + +def dropout_v3(x, keep_prob, noise_shape=None, seed=None, name=None): + """ The gradient for gelu + + Args: + x: A tensor with type is float + keep_prob: A tensor, float, rate of every element reserved + noise_shape: A 1-D tensor, with type int32, shape of keep/drop what random generated. + seed: Random seed. + name: Layer name. + + Returns: + A tensor. + """ + x = ops.convert_to_tensor(x,name="x") + if not x.dtype.is_floating: + raise ValueError("x has to be a floating point tensor since it's going to be scaled. Got a %s tensor instead." % x.dtype) + + if isinstance(keep_prob,numbers.Real) and not 0 < keep_prob <=1: + raise ValueError("Keep_prob must be a scalar tensor or a float in the range (0,1], got %g" % keep_prob) + + if isinstance(keep_prob,float) and keep_prob==1: + return x + + seed, seed2 = get_seed(seed) + noise_shape = _get_noise_shape(x,noise_shape) + gen_out = gen_npu_ops.drop_out_gen_mask_v3(noise_shape,keep_prob,seed,seed2,name) + result = gen_npu_ops.drop_out_do_mask_v3(x, gen_out, keep_prob, name) + return result + +@ops.RegisterGradient("DropOutDoMaskV3") +def _DropOutDoMaskV3Grad(op,grad): + result = gen_npu_ops.drop_out_do_mask_v3(grad, op.inputs[1], op.inputs[2]) + return [result, None, None] \ No newline at end of file diff --git a/TensorFlow2/built-in/keras_sample/DIN_ID2641_for_TensorFlow2.X/preprocess/1_convert_pd.py b/TensorFlow2/built-in/keras_sample/DIN_ID2641_for_TensorFlow2.X/preprocess/1_convert_pd.py new file mode 100644 index 0000000000000000000000000000000000000000..b5047be53e8e8062942c429b0b449547e61da453 --- /dev/null +++ b/TensorFlow2/built-in/keras_sample/DIN_ID2641_for_TensorFlow2.X/preprocess/1_convert_pd.py @@ -0,0 +1,72 @@ +# +# Copyright 2017 The TensorFlow Authors. All Rights Reserved. +# +# Licensed under the Apache License, Version 2.0 (the "License"); +# you may not use this file except in compliance with the License. +# You may obtain a copy of the License at +# +# http://www.apache.org/licenses/LICENSE-2.0 +# +# Unless required by applicable law or agreed to in writing, software +# distributed under the License is distributed on an "AS IS" BASIS, +# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. +# See the License for the specific language governing permissions and +# limitations under the License. +# ============================================================================ +# Copyright 2021 Huawei Technologies Co., Ltd +# +# Licensed under the Apache License, Version 2.0 (the "License"); +# you may not use this file except in compliance with the License. +# You may obtain a copy of the License at +# +# http://www.apache.org/licenses/LICENSE-2.0 +# +# Unless required by applicable law or agreed to in writing, software +# distributed under the License is distributed on an "AS IS" BASIS, +# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. +# See the License for the specific language governing permissions and +# limitations under the License. +# +""" +Created on May 24, 2020 + +json ---> pd --->pkl +meta只保留reviews文件中出现过的商品 + +@author: Ziyao Geng +""" + +import pickle +import pandas as pd + + +def to_df(file_path): + """ + 转化为DataFrame结构 + :param file_path: 文件路径 + :return: + """ + with open(file_path, 'r') as fin: + df = {} + i = 0 + for line in fin: + df[i] = eval(line) + i += 1 + df = pd.DataFrame.from_dict(df, orient='index') + return df + + +reviews_df = to_df('reviews_Electronics_5.json') + +# 改变列的顺序 +# reviews2_df = pd.read_json('reviews_Electronics_5.json', lines=True) + + +with open('reviews.pkl', 'wb') as f: + pickle.dump(reviews_df, f, pickle.HIGHEST_PROTOCOL) + +meta_df = to_df('meta_Electronics.json') +meta_df = meta_df[meta_df['asin'].isin(reviews_df['asin'].unique())] +meta_df = meta_df.reset_index(drop=True) +with open('meta.pkl', 'wb') as f: + pickle.dump(meta_df, f, pickle.HIGHEST_PROTOCOL) diff --git a/TensorFlow2/built-in/keras_sample/DIN_ID2641_for_TensorFlow2.X/preprocess/2_remap_id.py b/TensorFlow2/built-in/keras_sample/DIN_ID2641_for_TensorFlow2.X/preprocess/2_remap_id.py new file mode 100644 index 0000000000000000000000000000000000000000..4fd621d1ffb880eac2028972db4fe78212ef6108 --- /dev/null +++ b/TensorFlow2/built-in/keras_sample/DIN_ID2641_for_TensorFlow2.X/preprocess/2_remap_id.py @@ -0,0 +1,116 @@ +# +# Copyright 2017 The TensorFlow Authors. All Rights Reserved. +# +# Licensed under the Apache License, Version 2.0 (the "License"); +# you may not use this file except in compliance with the License. +# You may obtain a copy of the License at +# +# http://www.apache.org/licenses/LICENSE-2.0 +# +# Unless required by applicable law or agreed to in writing, software +# distributed under the License is distributed on an "AS IS" BASIS, +# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. +# See the License for the specific language governing permissions and +# limitations under the License. +# ============================================================================ +# Copyright 2021 Huawei Technologies Co., Ltd +# +# Licensed under the Apache License, Version 2.0 (the "License"); +# you may not use this file except in compliance with the License. +# You may obtain a copy of the License at +# +# http://www.apache.org/licenses/LICENSE-2.0 +# +# Unless required by applicable law or agreed to in writing, software +# distributed under the License is distributed on an "AS IS" BASIS, +# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. +# See the License for the specific language governing permissions and +# limitations under the License. +# +""" +Created on May 24, 2020 + +reviews_df保留'reviewerID'【用户ID】, 'asin'【产品ID】, 'unixReviewTime'【浏览时间】三列 +meta_df保留'asin'【产品ID】, 'categories'【种类】两列 + +reviews.pkl: 1689188 * 9 +['reviewerID', 'asin', 'reviewerName', 'helpful', 'reviewText', + 'overall', 'summary', 'unixReviewTime', 'reviewTime'] + + reviewerID asin ... unixReviewTime reviewTime +0 AO94DHGC771SJ 0528881469 ... 1370131200 06 2, 2013 +1 AMO214LNFCEI4 0528881469 ... 1290643200 11 25, 2010 + +meta.pkl: 63001 * 9 +['asin', 'imUrl', 'description', 'categories', 'title', 'price', + 'salesRank', 'related', 'brand'] + asin categories +0 0528881469 [[Electronics, GPS & Navigation, Vehicle GPS, ... + +@author: Ziyao Geng +""" + +import random +import pickle +import numpy as np +import pandas as pd + +random.seed(2020) + + +def build_map(df, col_name): + """ + 制作一个映射,键为列名,值为序列数字 + :param df: reviews_df / meta_df + :param col_name: 列名 + :return: 字典,键 + """ + key = sorted(df[col_name].unique().tolist()) + m = dict(zip(key, range(len(key)))) + df[col_name] = df[col_name].map(lambda x: m[x]) + return m, key + + +# reviews +reviews_df = pd.read_pickle('reviews.pkl') +reviews_df = reviews_df[['reviewerID', 'asin', 'unixReviewTime']] + +# meta +meta_df = pd.read_pickle('meta.pkl') +meta_df = meta_df[['asin', 'categories']] +# 类别只保留最后一个 +meta_df['categories'] = meta_df['categories'].map(lambda x: x[-1][-1]) + +# meta_df文件的物品ID映射 +asin_map, asin_key = build_map(meta_df, 'asin') +# meta_df文件物品种类映射 +cate_map, cate_key = build_map(meta_df, 'categories') +# reviews_df文件的用户ID映射 +revi_map, revi_key = build_map(reviews_df, 'reviewerID') + +# user_count: 192403 item_count: 63001 cate_count: 801 example_count: 1689188 +user_count, item_count, cate_count, example_count = \ + len(revi_map), len(asin_map), len(cate_map), reviews_df.shape[0] +# print('user_count: %d\titem_count: %d\tcate_count: %d\texample_count: %d' % +# (user_count, item_count, cate_count, example_count)) + +# 按物品id排序,并重置索引 +meta_df = meta_df.sort_values('asin') +meta_df = meta_df.reset_index(drop=True) + +# reviews_df文件物品id进行映射,并按照用户id、浏览时间进行排序,重置索引 +reviews_df['asin'] = reviews_df['asin'].map(lambda x: asin_map[x]) +reviews_df = reviews_df.sort_values(['reviewerID', 'unixReviewTime']) +reviews_df = reviews_df.reset_index(drop=True) +reviews_df = reviews_df[['reviewerID', 'asin', 'unixReviewTime']] + +# 各个物品对应的类别 +cate_list = np.array(meta_df['categories'], dtype='int32') + +# 保存所需数据为pkl文件 +with open('remap.pkl', 'wb') as f: + pickle.dump(reviews_df, f, pickle.HIGHEST_PROTOCOL) + pickle.dump(cate_list, f, pickle.HIGHEST_PROTOCOL) + pickle.dump((user_count, item_count, cate_count, example_count), + f, pickle.HIGHEST_PROTOCOL) + pickle.dump((asin_key, cate_key, revi_key), f, pickle.HIGHEST_PROTOCOL) diff --git a/TensorFlow2/built-in/keras_sample/DIN_ID2641_for_TensorFlow2.X/preprocess/__init__.py b/TensorFlow2/built-in/keras_sample/DIN_ID2641_for_TensorFlow2.X/preprocess/__init__.py new file mode 100644 index 0000000000000000000000000000000000000000..9772d6bd74cf0348a137ea4bce7fe8bd29ac9ca1 --- /dev/null +++ b/TensorFlow2/built-in/keras_sample/DIN_ID2641_for_TensorFlow2.X/preprocess/__init__.py @@ -0,0 +1,29 @@ +# +# Copyright 2017 The TensorFlow Authors. All Rights Reserved. +# +# Licensed under the Apache License, Version 2.0 (the "License"); +# you may not use this file except in compliance with the License. +# You may obtain a copy of the License at +# +# http://www.apache.org/licenses/LICENSE-2.0 +# +# Unless required by applicable law or agreed to in writing, software +# distributed under the License is distributed on an "AS IS" BASIS, +# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. +# See the License for the specific language governing permissions and +# limitations under the License. +# ============================================================================ +# Copyright 2021 Huawei Technologies Co., Ltd +# +# Licensed under the Apache License, Version 2.0 (the "License"); +# you may not use this file except in compliance with the License. +# You may obtain a copy of the License at +# +# http://www.apache.org/licenses/LICENSE-2.0 +# +# Unless required by applicable law or agreed to in writing, software +# distributed under the License is distributed on an "AS IS" BASIS, +# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. +# See the License for the specific language governing permissions and +# limitations under the License. +# diff --git a/TensorFlow2/built-in/keras_sample/DIN_ID2641_for_TensorFlow2.X/requirements.txt b/TensorFlow2/built-in/keras_sample/DIN_ID2641_for_TensorFlow2.X/requirements.txt new file mode 100644 index 0000000000000000000000000000000000000000..e69de29bb2d1d6434b8b29ae775ad8c2e48c5391 diff --git a/TensorFlow2/built-in/keras_sample/DIN_ID2641_for_TensorFlow2.X/run_1p.sh b/TensorFlow2/built-in/keras_sample/DIN_ID2641_for_TensorFlow2.X/run_1p.sh new file mode 100644 index 0000000000000000000000000000000000000000..bf5c6ac58cfb34a8e9c8e834502405c91e721315 --- /dev/null +++ b/TensorFlow2/built-in/keras_sample/DIN_ID2641_for_TensorFlow2.X/run_1p.sh @@ -0,0 +1,3 @@ +cur_path='pwd' +python3 ${cur_path}/train.py --epochs=5 --data_path=. --batch_size=4096 --ckpt_save_path="" --precision_mode="" > loss+perf_gpu.txt 2>&1 + diff --git a/TensorFlow2/built-in/keras_sample/DIN_ID2641_for_TensorFlow2.X/test/train_full_1p.sh b/TensorFlow2/built-in/keras_sample/DIN_ID2641_for_TensorFlow2.X/test/train_full_1p.sh new file mode 100644 index 0000000000000000000000000000000000000000..0b50cbd108afe12545ac09d3952bd98ae62b6cd6 --- /dev/null +++ b/TensorFlow2/built-in/keras_sample/DIN_ID2641_for_TensorFlow2.X/test/train_full_1p.sh @@ -0,0 +1,187 @@ +#!/bin/bash + +#当前路径,不需要修改 +cur_path=`pwd` +#export ASCEND_SLOG_PRINT_TO_STDOUT=1 +export NPU_CALCULATE_DEVICE=$ASCEND_DEVICE_ID +#集合通信参数,不需要修改 + +export RANK_SIZE=1 +export JOB_ID=10087 +RANK_ID_START=$ASCEND_DEVICE_ID + +# 数据集路径,保持为空,不需要修改 +data_path="" + +#基础参数,需要模型审视修改 +#网络名称,同目录名称 +Network="DIN_ID2641_for_TensorFlow2.X" +#训练epoch +train_epochs=5 +#训练batch_size +batch_size=4096 + + +############维测参数############## +# precision_mode="allow_mix_precision" +precision_mode="allow_fp32_to_fp16" +#维持参数,以下不需要修改 +over_dump=False +if [[ $over_dump == True ]];then + over_dump_path=$cur_path/overflow_dump + mkdir -p ${over_dump_path} +fi +data_dump_flag=False +data_dump_step="10" +profiling=False +use_mixlist=False +mixlist_file="${cur_path}/../configs/ops_info.json" +fusion_off_flag=False +fusion_off_file="${cur_path}/../configs/fusion_switch.cfg" +############维测参数############## + +############维测参数############## +for para in $* +do + if [[ $para == --precision_mode* ]];then + precision_mode=`echo ${para#*=}` + elif [[ $para == --over_dump* ]];then + over_dump=`echo ${para#*=}` + over_dump_path=${cur_path}/output/overflow_dump + mkdir -p ${over_dump_path} + elif [[ $para == --data_dump_flag* ]];then + data_dump_flag=`echo ${para#*=}` + data_dump_path=${cur_path}/output/data_dump + mkdir -p ${data_dump_path} + elif [[ $para == --data_dump_step* ]];then + data_dump_step=`echo ${para#*=}` + elif [[ $para == --profiling* ]];then + profiling=`echo ${para#*=}` + profiling_dump_path=${cur_path}/output/profiling + mkdir -p ${profiling_dump_path} + elif [[ $para == --data_path* ]];then + data_path=`echo ${para#*=}` + elif [[ $para == --use_mixlist* ]];then + use_mixlist=`echo ${para#*=}` + elif [[ $para == --mixlist_file* ]];then + mixlist_file=`echo ${para#*=}` + elif [[ $para == --fusion_off_flag* ]];then + fusion_off_flag=`echo ${para#*=}` + elif [[ $para == --fusion_off_file* ]];then + fusion_off_file=`echo ${para#*=}` + elif [[ $para == --log_steps* ]];then + log_steps=`echo ${para#*=}` + fi +done +############维测参数############## + +# 帮助信息,不需要修改 +if [[ $1 == --help || $1 == -h ]];then + echo"usage:./train_full_1p.sh基本信息

+ +**发布者(Publisher):Huawei** + +**应用领域(Application Domain):Recommendation** + +**版本(Version):1.1** + +**修改时间(Modified) :2022.4.11** + +**大小(Size):40KB** + +**框架(Framework):TensorFlow_2.6.2** + +**模型格式(Model Format):ckpt** + +**精度(Precision):Mixed** + +**处理器(Processor):昇腾910** + +**应用级别(Categories):Official** + +**描述(Description):基于TensorFlow框架的用于 CTR 预测的场感知分解机(FFMs)训练代码** + +概述

+ +## 简述 + +点击率 (CTR) 预测在计算广告中起着重要作用。 基于二次多项式映射和因式分解机 (FM) 的模型被广泛用于此任务。最近,FM 的一种变体,即场感知因子分解机 (FFMs),在一些全球 CTR 预测竞赛中优于现有模型。 + +本实验只用于测试,无实际用途,参考FFM库:https://github.com/ycjuan/libffm + +- 参考论文: + + https://www.csie.ntu.edu.tw/~cjlin/papers/ffm.pdf + +- 参考实现: + + https://github.com/ZiyaoGeng/Recommender-System-with-TF2.0/tree/master/FFM + +- 适配昇腾 AI 处理器的实现: + + skip + +- 通过Git获取对应commit\_id的代码方法如下: + + git clone {repository_url} # 克隆仓库的代码 + cd {repository_name} # 切换到模型的代码仓目录 + git checkout {branch} # 切换到对应分支 + git reset --hard {commit_id} # 代码设置到对应的commit_id + cd {code_path} # 切换到模型代码所在路径,若仓库下只有该模型,则无需切换 + + +## 默认配置 + +- 网络结构: + - 3-layers + - 507521520 total params + +- 训练超参(单卡): + - Batch size: 512 + - Train epochs: 5 + - Learning rate: 0.001 + - Read part: True + - Sample num: 1000000 + - Test size: 0.2 + - K: 10 + + +## 支持特性 + +| 特性列表 | 是否支持 | +| ---------- | -------- | +| 分布式训练 | 否 | +| 混合精度 | 是 | +| 数据并行 | 否 | + + +## 混合精度训练 + +昇腾910 AI处理器提供自动混合精度功能,可以针对全网中float32数据类型的算子,按照内置的优化策略,自动将部分float32的算子降低精度到float16,从而在精度损失很小的情况下提升系统性能并减少内存使用。 + +## 开启混合精度 + +拉起脚本中,传入--precision_mode='allow_mix_precision' + +``` + ./train_performance_1p_16bs.sh --help + +parameter explain: + --precision_mode precision mode(allow_fp32_to_fp16/force_fp16/must_keep_origin_dtype/allow_mix_precision) + --over_dump if or not over detection, default is False + --data_dump_flag data dump flag, default is False + --data_dump_step data dump step, default is 10 + --profiling if or not profiling for performance debug, default is False + --data_path source data of training + -h/--help show help message +``` + +相关代码示例: + +``` +flags.DEFINE_string(name='precision_mode', default= 'allow_fp32_to_fp16', + help='allow_fp32_to_fp16/force_fp16/ ' + 'must_keep_origin_dtype/allow_mix_precision.') + +npu_device.global_options().precision_mode=FLAGS.precision_mode +``` + +训练环境准备

+ +- 硬件环境和运行环境准备请参见《[CANN软件安装指南](https://support.huawei.com/enterprise/zh/ascend-computing/cann-pid-251168373?category=installation-update)》 +- 运行以下命令安装依赖。 +``` +pip3 install requirements.txt +``` +说明:依赖配置文件requirements.txt文件位于模型的根目录 + +快速上手

+ +## 数据集准备 + +1、用户自行准备好数据集,本网络使用的数据集是Criteo数据集 + +数据集目录参考如下: + +``` +├──Criteo +│ ├──demo.txt +│ ├──.DS_Store +│ ├──train.txt +``` + + + +## 模型训练 + +- 单击“立即下载”,并选择合适的下载方式下载源码包。 +- 开始训练。 + + 1. 启动训练之前,首先要配置程序运行相关环境变量。 + + 环境变量配置信息参见: + + [Ascend 910训练平台环境变量设置](https://gitee.com/ascend/modelzoo/wikis/Ascend%20910%E8%AE%AD%E7%BB%83%E5%B9%B3%E5%8F%B0%E7%8E%AF%E5%A2%83%E5%8F%98%E9%87%8F%E8%AE%BE%E7%BD%AE?sort_id=3148819) + + 2. 单卡训练 + + 2. 1单卡训练指令(脚本位于FFM_ID2632_for_TensorFlow2.X/test/train_full.sh),需要先使用cd命令进入test目录下,再使用下面的命令启动训练。请确保下面例子中的“--data_path”修改为用户的数据路径,这里选择将数据文件夹放在home目录下。 + + bash train_full_1p.sh --data_path=/home + + + + +高级参考

+ +## 脚本和示例代码 + +``` +|--LICENSE +|--README.md #说明文档 +|--criteo.py +|--model.py +|--modules.py +|--train.py #训练代码 +|--requirements.txt #所需依赖 +|--run_1p.sh +|--utils.py +|--test #训练脚本目录 +| |--train_full_1p.sh #全量训练脚本 +| |--train_performance_1p.sh #performance训练脚本 +``` + +## 脚本参数 + +``` +--data_path # the path to train data +--epochs # epochs of training +--ckpt_save_path # directory to ckpt +--batch_size # batch size for 1p +--log_steps # log frequency +--sample_num # sample num +--precision_mode # the path to save over dump data +--over_dump # if or not over detection, default is False +--data_dump_flag # data dump flag, default is False +--data_dump_step # data dump step, default is 10 +--profiling # if or not profiling for performance debug, default is False +--profiling_dump_path # the path to save profiling data +--over_dump_path # the path to save over dump data +--data_dump_path # the path to save dump data +--use_mixlist # use_mixlist flag, default is False +--fusion_off_flag # fusion_off flag, default is False +--mixlist_file # mixlist file name, default is ops_info.json +--fusion_off_file # fusion_off file name, default is fusion_switch.cfg +``` + +## 训练过程 + +通过“模型训练”中的训练指令启动单卡或者多卡训练。单卡和多卡通过运行不同脚本,支持单卡,8卡网络训练。模型存储路径为${cur_path}/output/$ASCEND_DEVICE_ID,包括训练的log以及checkpoints文件。以8卡训练为例,loss信息在文件${cur_path}/output/${ASCEND_DEVICE_ID}/train_${ASCEND_DEVICE_ID}.log中。 \ No newline at end of file diff --git a/TensorFlow2/built-in/keras_sample/FFM_ID2632_for_TensorFlow2.X/__init__.py b/TensorFlow2/built-in/keras_sample/FFM_ID2632_for_TensorFlow2.X/__init__.py new file mode 100644 index 0000000000000000000000000000000000000000..c1ea647fb81d7328ad59a4abef02a2b717f7c7b0 --- /dev/null +++ b/TensorFlow2/built-in/keras_sample/FFM_ID2632_for_TensorFlow2.X/__init__.py @@ -0,0 +1,37 @@ +# +# Copyright 2017 The TensorFlow Authors. All Rights Reserved. +# +# Licensed under the Apache License, Version 2.0 (the "License"); +# you may not use this file except in compliance with the License. +# You may obtain a copy of the License at +# +# http://www.apache.org/licenses/LICENSE-2.0 +# +# Unless required by applicable law or agreed to in writing, software +# distributed under the License is distributed on an "AS IS" BASIS, +# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. +# See the License for the specific language governing permissions and +# limitations under the License. +# ============================================================================ +# Copyright 2021 Huawei Technologies Co., Ltd +# +# Licensed under the Apache License, Version 2.0 (the "License"); +# you may not use this file except in compliance with the License. +# You may obtain a copy of the License at +# +# http://www.apache.org/licenses/LICENSE-2.0 +# +# Unless required by applicable law or agreed to in writing, software +# distributed under the License is distributed on an "AS IS" BASIS, +# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. +# See the License for the specific language governing permissions and +# limitations under the License. +# + +""" +Created on May 23, 2020 + +model: Deep interest network for click-through rate prediction + +@author: Ziyao Geng +""" \ No newline at end of file diff --git a/TensorFlow2/built-in/keras_sample/FFM_ID2632_for_TensorFlow2.X/criteo.py b/TensorFlow2/built-in/keras_sample/FFM_ID2632_for_TensorFlow2.X/criteo.py new file mode 100644 index 0000000000000000000000000000000000000000..12ca0459c3a3e2c93eebc53a69d67dd7376adb5e --- /dev/null +++ b/TensorFlow2/built-in/keras_sample/FFM_ID2632_for_TensorFlow2.X/criteo.py @@ -0,0 +1,113 @@ +# +# Copyright 2017 The TensorFlow Authors. All Rights Reserved. +# +# Licensed under the Apache License, Version 2.0 (the "License"); +# you may not use this file except in compliance with the License. +# You may obtain a copy of the License at +# +# http://www.apache.org/licenses/LICENSE-2.0 +# +# Unless required by applicable law or agreed to in writing, software +# distributed under the License is distributed on an "AS IS" BASIS, +# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. +# See the License for the specific language governing permissions and +# limitations under the License. +# ============================================================================ +# Copyright 2021 Huawei Technologies Co., Ltd +# +# Licensed under the Apache License, Version 2.0 (the "License"); +# you may not use this file except in compliance with the License. +# You may obtain a copy of the License at +# +# http://www.apache.org/licenses/LICENSE-2.0 +# +# Unless required by applicable law or agreed to in writing, software +# distributed under the License is distributed on an "AS IS" BASIS, +# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. +# See the License for the specific language governing permissions and +# limitations under the License. +# + +""" +Created on July 13, 2020 + +dataset锛歝riteo dataset sample +features锛? +- Label - Target variable that indicates if an ad was clicked (1) or not (0). +- I1-I13 - A total of 13 columns of integer features (mostly count features). +- C1-C26 - A total of 26 columns of categorical features. +The values of these features have been hashed onto 32 bits for anonymization purposes. + +@author: Ziyao Geng(zggzy1996@163.com) +""" + +import pandas as pd +import numpy as np + +from sklearn.preprocessing import LabelEncoder, KBinsDiscretizer +from sklearn.model_selection import train_test_split + +from utils import sparseFeature + + +def create_criteo_dataset(file, embed_dim=8, read_part=True, sample_num=100000, test_size=0.2,static=1): + """ + a example about creating criteo dataset + :param file: dataset's path + :param embed_dim: the embedding dimension of sparse features + :param read_part: whether to read part of it + :param sample_num: the number of instances if read_part is True + :param test_size: ratio of test dataset + :return: feature columns, train, test + """ + names = ['label', 'I1', 'I2', 'I3', 'I4', 'I5', 'I6', 'I7', 'I8', 'I9', 'I10', 'I11', + 'I12', 'I13', 'C1', 'C2', 'C3', 'C4', 'C5', 'C6', 'C7', 'C8', 'C9', 'C10', 'C11', + 'C12', 'C13', 'C14', 'C15', 'C16', 'C17', 'C18', 'C19', 'C20', 'C21', 'C22', + 'C23', 'C24', 'C25', 'C26'] + + if read_part: + data_df = pd.read_csv(file, sep='\t', iterator=True, header=None, + names=names) + data_df = data_df.get_chunk(sample_num) + + else: + data_df = pd.read_csv(file, sep='\t', header=None, names=names) + + sparse_features = ['C' + str(i) for i in range(1, 27)] + dense_features = ['I' + str(i) for i in range(1, 14)] + features = sparse_features + dense_features + + data_df[sparse_features] = data_df[sparse_features].fillna('-1') + data_df[dense_features] = data_df[dense_features].fillna(0) + + # Bin continuous data into intervals. + est = KBinsDiscretizer(n_bins=100, encode='ordinal', strategy='uniform') + data_df[dense_features] = est.fit_transform(data_df[dense_features]) + + for feat in sparse_features: + le = LabelEncoder() + data_df[feat] = le.fit_transform(data_df[feat]) + + # ==============Feature Engineering=================== + + # ==================================================== + feature_columns = [sparseFeature(feat, int(data_df[feat].max()) + 1, embed_dim=embed_dim) + for feat in features] + train, test = train_test_split(data_df, test_size=test_size) + if static==1: + print('=====================[DEBUG]======================',flush=True) + train_X = train[features].values[:77824].astype('int32') + train_y = train['label'].values[:77824].astype('int32') + print("train_X.shape",train_X.shape,flush=True) + print("train_y.shape",train_y.shape,flush=True) + test_X = test[features].values[:8192].astype('int32') + test_y = test['label'].values[:8192].astype('int32') + print("test_X.shape",test_X.shape,flush=True) + print("test_y.shape",test_y.shape,flush=True) + else: + train_X = train[features].values.astype('int32') + train_y = train['label'].values.astype('int32') + test_X = test[features].values.astype('int32') + test_y = test['label'].values.astype('int32') + + return feature_columns, (train_X, train_y), (test_X, test_y) \ No newline at end of file diff --git a/TensorFlow2/built-in/keras_sample/FFM_ID2632_for_TensorFlow2.X/model.py b/TensorFlow2/built-in/keras_sample/FFM_ID2632_for_TensorFlow2.X/model.py new file mode 100644 index 0000000000000000000000000000000000000000..dcc40f4d86f69d46ca3cea20a85b77e95f40082e --- /dev/null +++ b/TensorFlow2/built-in/keras_sample/FFM_ID2632_for_TensorFlow2.X/model.py @@ -0,0 +1,69 @@ +# +# Copyright 2017 The TensorFlow Authors. All Rights Reserved. +# +# Licensed under the Apache License, Version 2.0 (the "License"); +# you may not use this file except in compliance with the License. +# You may obtain a copy of the License at +# +# http://www.apache.org/licenses/LICENSE-2.0 +# +# Unless required by applicable law or agreed to in writing, software +# distributed under the License is distributed on an "AS IS" BASIS, +# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. +# See the License for the specific language governing permissions and +# limitations under the License. +# ============================================================================ +# Copyright 2021 Huawei Technologies Co., Ltd +# +# Licensed under the Apache License, Version 2.0 (the "License"); +# you may not use this file except in compliance with the License. +# You may obtain a copy of the License at +# +# http://www.apache.org/licenses/LICENSE-2.0 +# +# Unless required by applicable law or agreed to in writing, software +# distributed under the License is distributed on an "AS IS" BASIS, +# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. +# See the License for the specific language governing permissions and +# limitations under the License. +# +""" +Created on August 26, 2020 +Updated on May 19, 2021 + +model: Field-aware Factorization Machines for CTR Prediction + +@author: Ziyao Geng(zggzy1996@163.com) +""" + +import tensorflow as tf +from tensorflow.keras import Model +from tensorflow.keras.layers import Input, Layer +from tensorflow.keras.regularizers import l2 + +from modules import FFM_Layer + + +class FFM(Model): + def __init__(self, feature_columns, k, w_reg=1e-6, v_reg=1e-6): + """ + FFM architecture + :param feature_columns: A list. sparse column feature information. + :param k: the latent vector + :param w_reg: the regularization coefficient of parameter w + :param field_reg_reg: the regularization coefficient of parameter v + """ + super(FFM, self).__init__() + self.sparse_feature_columns = feature_columns + self.ffm = FFM_Layer(self.sparse_feature_columns, k, w_reg, v_reg) + + def call(self, inputs, **kwargs): + ffm_out = self.ffm(inputs) + outputs = tf.nn.sigmoid(ffm_out) + return outputs + + def summary(self, **kwargs): + sparse_inputs = Input(shape=(len(self.sparse_feature_columns),), dtype=tf.int32) + tf.keras.Model(inputs=sparse_inputs, outputs=self.call(sparse_inputs)).summary() + + diff --git a/TensorFlow2/built-in/keras_sample/FFM_ID2632_for_TensorFlow2.X/modelzoo_level.txt b/TensorFlow2/built-in/keras_sample/FFM_ID2632_for_TensorFlow2.X/modelzoo_level.txt new file mode 100644 index 0000000000000000000000000000000000000000..a829ab59b97a1022dd6fc33b59b7ae0d55009432 --- /dev/null +++ b/TensorFlow2/built-in/keras_sample/FFM_ID2632_for_TensorFlow2.X/modelzoo_level.txt @@ -0,0 +1,3 @@ +FuncStatus:OK +PerfStatus:NOK +PrecisionStatus:OK \ No newline at end of file diff --git a/TensorFlow2/built-in/keras_sample/FFM_ID2632_for_TensorFlow2.X/modules.py b/TensorFlow2/built-in/keras_sample/FFM_ID2632_for_TensorFlow2.X/modules.py new file mode 100644 index 0000000000000000000000000000000000000000..72b8ca1eb4fbdb7faa80a8f19f9718cda1785ac2 --- /dev/null +++ b/TensorFlow2/built-in/keras_sample/FFM_ID2632_for_TensorFlow2.X/modules.py @@ -0,0 +1,89 @@ +# +# Copyright 2017 The TensorFlow Authors. All Rights Reserved. +# +# Licensed under the Apache License, Version 2.0 (the "License"); +# you may not use this file except in compliance with the License. +# You may obtain a copy of the License at +# +# http://www.apache.org/licenses/LICENSE-2.0 +# +# Unless required by applicable law or agreed to in writing, software +# distributed under the License is distributed on an "AS IS" BASIS, +# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. +# See the License for the specific language governing permissions and +# limitations under the License. +# ============================================================================ +# Copyright 2021 Huawei Technologies Co., Ltd +# +# Licensed under the Apache License, Version 2.0 (the "License"); +# you may not use this file except in compliance with the License. +# You may obtain a copy of the License at +# +# http://www.apache.org/licenses/LICENSE-2.0 +# +# Unless required by applicable law or agreed to in writing, software +# distributed under the License is distributed on an "AS IS" BASIS, +# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. +# See the License for the specific language governing permissions and +# limitations under the License. +# + +""" +Created on May 19, 2021 + +modules of FFM + +@author: Ziyao Geng(zggzy1996@163.com) +""" + +import tensorflow as tf +from tensorflow.keras.layers import Layer +from tensorflow.keras.regularizers import l2 + + +class FFM_Layer(Layer): + def __init__(self, sparse_feature_columns, k, w_reg=1e-6, v_reg=1e-6): + """ + + :param dense_feature_columns: A list. sparse column feature information. + :param k: A scalar. The latent vector + :param w_reg: A scalar. The regularization coefficient of parameter w + :param v_reg: A scalar. The regularization coefficient of parameter v + """ + super(FFM_Layer, self).__init__() + self.sparse_feature_columns = sparse_feature_columns + self.k = k + self.w_reg = w_reg + self.v_reg = v_reg + self.index_mapping = [] + self.feature_length = 0 + for feat in self.sparse_feature_columns: + self.index_mapping.append(self.feature_length) + self.feature_length += feat['feat_num'] + self.field_num = len(self.sparse_feature_columns) + + def build(self, input_shape): + self.w0 = self.add_weight(name='w0', shape=(1,), + initializer=tf.zeros_initializer(), + trainable=True) + self.w = self.add_weight(name='w', shape=(self.feature_length, 1), + initializer='random_normal', + regularizer=l2(self.w_reg), + trainable=True) + self.v = self.add_weight(name='v', + shape=(self.feature_length, self.field_num, self.k), + initializer='random_normal', + regularizer=l2(self.v_reg), + trainable=True) + + def call(self, inputs, **kwargs): + inputs = inputs + tf.convert_to_tensor(self.index_mapping) + # first order + first_order = self.w0 + tf.reduce_sum(tf.nn.embedding_lookup(self.w, inputs), axis=1) # (batch_size, 1) + # field second order + second_order = 0 + latent_vector = tf.reduce_sum(tf.nn.embedding_lookup(self.v, inputs), axis=1) # (batch_size, field_num, k) + for i in range(self.field_num): + for j in range(i+1, self.field_num): + second_order += tf.reduce_sum(latent_vector[:, i] * latent_vector[:, j], axis=1, keepdims=True) + return first_order + second_order \ No newline at end of file diff --git a/TensorFlow2/built-in/keras_sample/FFM_ID2632_for_TensorFlow2.X/requirements.txt b/TensorFlow2/built-in/keras_sample/FFM_ID2632_for_TensorFlow2.X/requirements.txt new file mode 100644 index 0000000000000000000000000000000000000000..e69de29bb2d1d6434b8b29ae775ad8c2e48c5391 diff --git a/TensorFlow2/built-in/keras_sample/FFM_ID2632_for_TensorFlow2.X/run_1p.sh b/TensorFlow2/built-in/keras_sample/FFM_ID2632_for_TensorFlow2.X/run_1p.sh new file mode 100644 index 0000000000000000000000000000000000000000..2a1601ec17b3cd849557d2340f431634db4f0261 --- /dev/null +++ b/TensorFlow2/built-in/keras_sample/FFM_ID2632_for_TensorFlow2.X/run_1p.sh @@ -0,0 +1,3 @@ +cur_path='pwd' +python3 ${cur_path}/train.py --epochs=5 --data_path=. --batch_size=4096 --sample_num=10000 --ckpt_save_path="" --precision_mode="" > loss+perf_gpu.txt 2>&1 + diff --git a/TensorFlow2/built-in/keras_sample/FFM_ID2632_for_TensorFlow2.X/test/train_full_1p.sh b/TensorFlow2/built-in/keras_sample/FFM_ID2632_for_TensorFlow2.X/test/train_full_1p.sh new file mode 100644 index 0000000000000000000000000000000000000000..cec5bd845f0056a3db104f2dff2d18a51bf22490 --- /dev/null +++ b/TensorFlow2/built-in/keras_sample/FFM_ID2632_for_TensorFlow2.X/test/train_full_1p.sh @@ -0,0 +1,193 @@ +#!/bin/bash + +#当前路径,不需要修改 +cur_path=`pwd` +#export ASCEND_SLOG_PRINT_TO_STDOUT=1 +export NPU_CALCULATE_DEVICE=$ASCEND_DEVICE_ID +#集合通信参数,不需要修改 + +export RANK_SIZE=1 +export JOB_ID=10087 +RANK_ID_START=$ASCEND_DEVICE_ID + +# 数据集路径,保持为空,不需要修改 +data_path="" + +#基础参数,需要模型审视修改 +#网络名称,同目录名称 +Network="FFM_ID2632_for_TensorFlow2.X" +#训练epoch +train_epochs=10 +#训练batch_size +batch_size=4096 + +sample_num=1000000 + +############维测参数############## +precision_mode="allow_mix_precision" +#维持参数,以下不需要修改 +over_dump=False +if [[ $over_dump == True ]];then + over_dump_path=$cur_path/overflow_dump + mkdir -p ${over_dump_path} +fi +data_dump_flag=False +data_dump_step="10" +profiling=False +use_mixlist=False +mixlist_file="${cur_path}/../configs/ops_info.json" +fusion_off_flag=False +fusion_off_file="${cur_path}/../configs/fusion_switch.cfg" +############维测参数############## + +############维测参数############## +for para in $* +do + if [[ $para == --precision_mode* ]];then + precision_mode=`echo ${para#*=}` + elif [[ $para == --over_dump* ]];then + over_dump=`echo ${para#*=}` + over_dump_path=${cur_path}/output/overflow_dump + mkdir -p ${over_dump_path} + elif [[ $para == --data_dump_flag* ]];then + data_dump_flag=`echo ${para#*=}` + data_dump_path=${cur_path}/output/data_dump + mkdir -p ${data_dump_path} + elif [[ $para == --data_dump_step* ]];then + data_dump_step=`echo ${para#*=}` + elif [[ $para == --profiling* ]];then + profiling=`echo ${para#*=}` + profiling_dump_path=${cur_path}/output/profiling + mkdir -p ${profiling_dump_path} + elif [[ $para == --data_path* ]];then + data_path=`echo ${para#*=}` + elif [[ $para == --use_mixlist* ]];then + use_mixlist=`echo ${para#*=}` + elif [[ $para == --mixlist_file* ]];then + mixlist_file=`echo ${para#*=}` + elif [[ $para == --fusion_off_flag* ]];then + fusion_off_flag=`echo ${para#*=}` + elif [[ $para == --fusion_off_file* ]];then + fusion_off_file=`echo ${para#*=}` + elif [[ $para == --log_steps* ]];then + log_steps=`echo ${para#*=}` + fi +done +############维测参数############## + +# 帮助信息,不需要修改 +if [[ $1 == --help || $1 == -h ]];then + echo"usage:./train_full_1p.sh基本信息

+ +**发布者(Publisher):Huawei** + +**应用领域(Application Domain):Recommendation** + +**版本(Version):1.1** + +**修改时间(Modified) :2022.04.21** + +**大小(Size):16M** + +**框架(Framework):TensorFlow_2.6.2** + +**模型格式(Model Format):ckpt** + +**精度(Precision):Mixed** + +**处理器(Processor):昇腾910** + +**应用级别(Categories):Official** + +**描述(Description):基于TensorFlow2.X框架的推荐算法CTR预估模型的训练代码** + + +概述

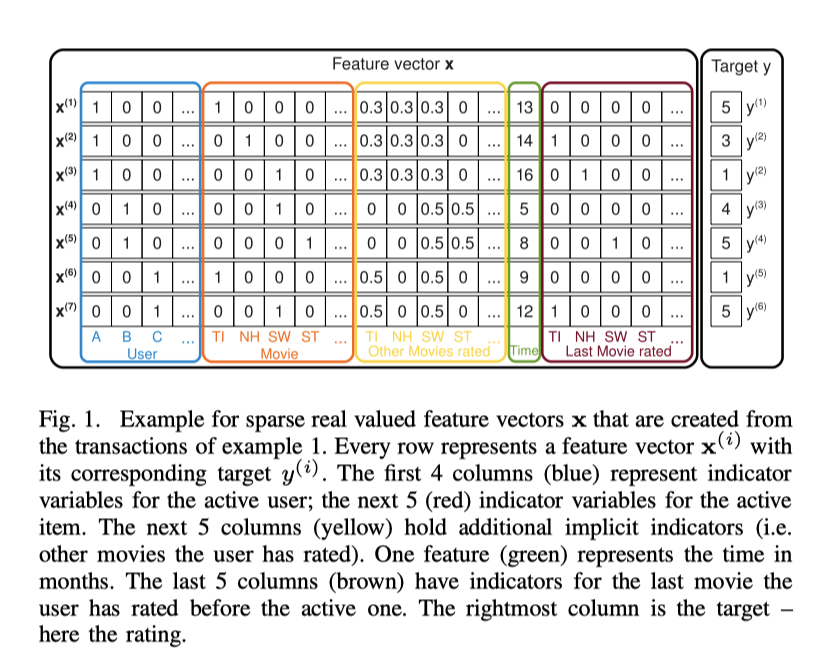

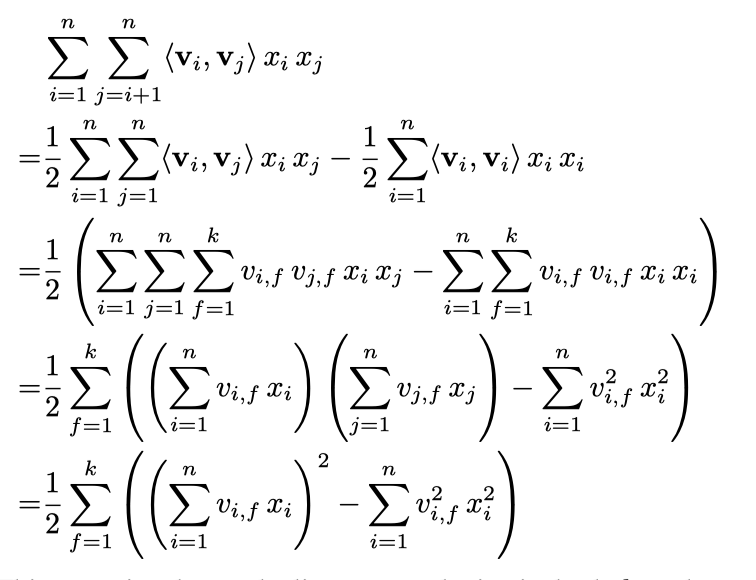

+ +## 简述 + + 因子分解机(Factorization Machine, FM)是由Steffen Rendle提出的一种基于矩阵分解的机器学习算法。目前,被广泛的应用于广告预估模型中,相比LR而言,效果强了不少。是一种不错的CTR预估模型,也是我们现在在使用的广告点击率预估模型,比起著名的Logistic Regression, FM能够把握一些组合的高阶特征,因此拥有更强的表现力。 + + + - 参考论文: + https://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=5694074(https://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=5694074) + + - 参考实现: + https://github.com/ZiyaoGeng/Recommender-System-with-TF2.0/tree/master/FM(https://github.com/ZiyaoGeng/Recommender-System-with-TF2.0/tree/master/FM) + + + - 适配昇腾 AI 处理器的实现: + skip + + - 通过Git获取对应commit\_id的代码方法如下: + ``` + git clone {repository_url} # 克隆仓库的代码 + cd {repository_name} # 切换到模型的代码仓目录 + git checkout {branch} # 切换到对应分支 + git reset --hard {commit_id} # 代码设置到对应的commit_id + cd {code_path} # 切换到模型代码所在路径,若仓库下只有该模型,则无需切换 + ``` + + + + +## 默认配置 + + +- 网络结构 + - SVM模型与factorization模型的结合,可以在非常稀疏的数据中进行合理的参数轨迹。 + - 考虑到多维特征之间的交叉关系,其中参数的训练使用的是矩阵分解的方法。 + - 在FM中,每个评分记录被放在一个矩阵的一行中,从列数看特征矩阵x的前面u列即为User矩阵,每个User对应一列,接下来的i列即为item特征矩阵,之后数列是多余的非显式的特征关系。后面一列表示时间关系,最后i列则表示同一个user在上一条记录中的结果,用于表示用户的历史行为。 + +- 训练超参(单卡): + - file:Criteo文件; + - read_part:是否读取部分数据,True(full脚本为False); + - sample_num:读取部分时,样本数量,1000000; + - test_size:测试集比例,0.2; + - k:隐因子,8; + - dnn_dropout:Dropout, 0.5; + - hidden_unit:DNN的隐藏单元,[256, 128, 64]; + - learning_rate:学习率,0.001; + - batch_size:4096; + - epoch:10; + + +## 支持特性 + +| 特性列表 | 是否支持 | +|-------|------| +| 分布式训练 | 否 | +| 混合精度 | 是 | +| 数据并行 | 否 | + +## 混合精度训练 + +昇腾910 AI处理器提供自动混合精度功能,可以针对全网中float32数据类型的算子,按照内置的优化策略,自动将部分float32的算子降低精度到float16,从而在精度损失很小的情况下提升系统性能并减少内存使用。 + +## 开启混合精度 +相关代码示例。 + +``` + config_proto = tf.ConfigProto(allow_soft_placement=True) + custom_op = config_proto.graph_options.rewrite_options.custom_optimizers.add() + custom_op.name = 'NpuOptimizer' + custom_op.parameter_map["use_off_line"].b = True + custom_op.parameter_map["precision_mode"].s = tf.compat.as_bytes("allow_mix_precision") + config_proto.graph_options.rewrite_options.remapping = RewriterConfig.OFF + session_config = npu_config_proto(config_proto=config_proto) +``` + +训练环境准备

+ +- 硬件环境和运行环境准备请参见《[CANN软件安装指南](https://support.huawei.com/enterprise/zh/ascend-computing/cann-pid-251168373?category=installation-update)》 +- 运行以下命令安装依赖。 +``` +pip3 install requirements.txt +``` +说明:依赖配置文件requirements.txt文件位于模型的根目录 + + +快速上手

+ +## 数据集准备 + + 采用Criteo数据集进行测试。数据集的处理见../data_process文件,主要分为: +1. 考虑到Criteo文件过大,因此可以通过read_part和sample_sum读取部分数据进行测试; +2. 对缺失数据进行填充; +3. 对密集数据I1-I13进行离散化分桶(bins=100),对稀疏数据C1-C26进行重新编码LabelEncoder; +4. 整理得到feature_columns; +5. 切分数据集,最后返回feature_columns, (train_X, train_y), (test_X, test_y); + + +## 模型训练 +- 单击“立即下载”,并选择合适的下载方式下载源码包。 +- 开始训练。 + + 1. 启动训练之前,首先要配置程序运行相关环境变量。 + + 环境变量配置信息参见: + + [Ascend 910训练平台环境变量设置](https://gitee.com/ascend/modelzoo/wikis/Ascend%20910%E8%AE%AD%E7%BB%83%E5%B9%B3%E5%8F%B0%E7%8E%AF%E5%A2%83%E5%8F%98%E9%87%8F%E8%AE%BE%E7%BD%AE?sort_id=3148819) + + + 2. 单卡训练 + + 2.1 设置单卡训练参数(脚本位于FM_ID2631_for_TensorFlow2.X/test/train_full_1p.sh),示例如下。 + + + ``` + batch_size=4096 + #训练step + train_epochs=10 + #训练epoch + ``` + + 2.2 单卡训练指令(FM_ID2631_for_TensorFlow2.X/test) + + ``` + 于终端中运行export ASCEND_DEVICE_ID=0 (0~7)以指定单卡训练时使用的卡 + bash train_full_1p.sh --data_path=xx + 数据集应为txt类型,配置data_path时需指定为Criteo这一层,例:--data_path=/home/data/Criteo + ├─data + ├──Criteo + │ ├──demo.txt + │ ├──.DS_Store + │ ├──train.txt + + ``` + +迁移学习指导

+ +- 数据集准备。 + + 1. 获取数据。 + 请参见“快速上手”中的数据集准备 + +- 模型训练 + + 请参考“快速上手”章节 + +高级参考

+ +## 脚本和示例代码 + + + |--modelzoo_level.txt #状态文件 + |--LICENSE + |--README.md #说明文档 + |--criteo.py + |--model.py #模型结构代码 + |--modules.py + |--train.py #训练代码 + |--requirements.txt #所需依赖 + |--run_1p.sh + |--utils.py + |--test #训练脚本目录 + | |--train_full_1p.sh #全量训练脚本 + | |--train_performance_1p.sh #performance训练脚本 + + +## 脚本参数 + +``` +batch_size 训练batch_size +epochs 训练epoch数 +precision_mode default="allow_mix_precision", type=str,help='the path to save over dump data' +over_dump type=ast.literal_eval,help='if or not over detection, default is False' +data_dump_flag type=ast.literal_eval,help='data dump flag, default is False' +data_dump_step data dump step, default is 10 +profiling type=ast.literal_eval help='if or not profiling for performance debug, default is False' +profiling_dump_path type=str, help='the path to save profiling data' +over_dump_path type=str, help='the path to save over dump data' +data_dump_path type=str, help='the path to save dump data' +use_mixlist type=ast.literal_eval,help='use_mixlist flag, default is False' +fusion_off_flag type=ast.literal_eval,help='fusion_off flag, default is False' +mixlist_file type=str,help='mixlist file name, default is ops_info.json' +fusion_off_file type=str,help='fusion_off file name, default is fusion_switch.cfg' +auto_tune help='auto_tune flag, default is False' +``` + +## 训练过程 + +通过“模型训练”中的训练指令启动单卡训练。 +将训练脚本(train_full_1p.sh)中的data_path设置为训练数据集的路径。具体的流程参见“模型训练”的示例。 diff --git a/TensorFlow2/built-in/keras_sample/FM_ID2631_for_TensorFlow2.X/README_BAK.md b/TensorFlow2/built-in/keras_sample/FM_ID2631_for_TensorFlow2.X/README_BAK.md new file mode 100644 index 0000000000000000000000000000000000000000..18d7e7a75bd84f77ef5eb28fc53d024e2ca9e597 --- /dev/null +++ b/TensorFlow2/built-in/keras_sample/FM_ID2631_for_TensorFlow2.X/README_BAK.md @@ -0,0 +1,70 @@ +## FM + +### 1. 论文 +Factorization Machines + +**创新**:**经典因子分解机模型** + + + +### 2. 模型结构 + +

基本信息

+ +**发布者(Publisher):Huawei** + +**应用领域(Application Domain):Image Classification** + +**版本(Version):1.1** + +**修改时间(Modified) :2022.4.8** + +**大小(Size):210KB** + +**框架(Framework):TensorFlow_2.6** + +**模型格式(Model Format):ckpt** + +**精度(Precision):Mixed** + +**处理器(Processor):昇腾910** + +**应用级别(Categories):Official** + +**描述(Description):基于TensorFlow框架的Octave Convolution网络训练代码** + +概述

+ +- OctConv全称为:**Octave Convolution**是一种即插即用的卷积单元,可以直接替换常规卷积,而无需对网络架构进行任何调整。这种卷积可以存储和处理在较低空间分辨率下空间变化“较慢”的特征图,从而降低内存和计算成本。 + +- 参考论文: + + https://arxiv.org/abs/1904.05049 + +- 参考实现: + + https://github.com/tuanzhangCS/octconv_resnet + +- 适配昇腾 AI 处理器的实现: + + skip + +- 通过Git获取对应commit\_id的代码方法如下: + + git clone {repository_url} # 克隆仓库的代码 + cd {repository_name} # 切换到模型的代码仓目录 + git checkout {branch} # 切换到对应分支 + git reset --hard {commit_id} # 代码设置到对应的commit_id + cd {code_path} # 切换到模型代码所在路径,若仓库下只有该模型,则无需切换 + + +## 默认配置 +- 网络结构 + - ResNet v1 + - Stacks of 2 x (3 x 3) Conv2D-BN-ReLU + Last ReLU is after the shortcut connection. + At the beginning of each stage, the feature map size is halved (downsampled) + by a convolutional layer with strides=2, while the number of filters is + doubled. Within each stage, the layers have the same number filters and the + same number of filters. + Features maps sizes: + stage 0: 32x32, 16 + stage 1: 16x16, 32 + stage 2: 8x8, 64 + The Number of parameters is 0.27M. + +- 训练超参(单卡): + - Batch size: 32 + - Train epoch: 200 + - block_num: 3 + + +## 支持特性 + +| 特性列表 | 是否支持 | +|-------|------| +| 分布式训练 | 否 | +| 混合精度 | 否 | +| 数据并行 | 否 | + + +## 混合精度训练 + +昇腾910 AI处理器提供自动混合精度功能,可以针对全网中float32数据类型的算子,按照内置的优化策略,自动将部分float32的算子降低精度到float16,从而在精度损失很小的情况下提升系统性能并减少内存使用。 + +## 开启混合精度 +拉起脚本中,默认开启混合精度传入,即precision_mode='allow_mix_precision' + +``` + ./train_performance_1p.sh --help + +parameter explain: + --precision_mode precision mode(allow_fp32_to_fp16/force_fp16/must_keep_origin_dtype/allow_mix_precision) + --over_dump if or not over detection, default is False + --data_dump_flag data dump flag, default is False + --data_dump_step data dump step, default is 10 + --profiling if or not profiling for performance debug, default is False + --data_path source data of training + --max_step # of step for training + --learning_rate learning rate + --batch batch size + --modeldir model dir + --save_interval save interval for ckpt + --loss_scale enable loss scale ,default is False + -h/--help show help message +``` + +相关代码示例: + +``` +custom_op.parameter_map["precision_mode"].s = tf.compat.as_bytes(args.precision_mode) +``` + +训练环境准备

+ +1. 硬件环境和运行环境准备请参见《[CANN软件安装指南](https://support.huawei.com/enterprise/zh/category/ai-computing-platform-pid-1557196528909)》 +2. 宿主机上需要安装Docker并登录[Ascend Hub中心](https://ascendhub.huawei.com/#/detail?name=ascend-tensorflow-arm)获取镜像。 + + 当前模型支持的镜像列表如[表1](#zh-cn_topic_0000001074498056_table1519011227314)所示。 + + **表 1** 镜像列表 + + + +| + | ++ | ++ | +

|---|---|---|

|

+ + | ++ | +

快速上手

+ +## 数据集准备 + +1、用户自行准备好数据集,包括训练数据集和验证数据集。使用的数据集是wikipedia + +2、训练的数据集放在train目录,验证的数据集放在eval目录 + +3、bert 预训练的模型及数据集可以参考"简述->开源代码路径处理" + +数据集目录参考如下: + +``` +├─data +│ └─cifar-10-batches-py +│ ├──batchex.meta +│ ├──data_batch_1 +│ ├──data_batch_2 +│ ├──data_batch_3 +│ ├──data_batch_4 +│ ├──data_batch_5 +│ ├──readme.html +│ └─test_batch +``` +## 模型训练 +- 单击“立即下载”,并选择合适的下载方式下载源码包。 +- 开始训练。 + + 1. 启动训练之前,首先要配置程序运行相关环境变量。 + + 环境变量配置信息参见: + + [Ascend 910训练平台环境变量设置](https://gitee.com/ascend/modelzoo/wikis/Ascend%20910%E8%AE%AD%E7%BB%83%E5%B9%B3%E5%8F%B0%E7%8E%AF%E5%A2%83%E5%8F%98%E9%87%8F%E8%AE%BE%E7%BD%AE?sort_id=3148819) + + + 2. 单卡训练 + + 2. 1单卡训练指令(脚本位于BertLarge_TF2.x_for_Tensorflow/test/train_full_1p_16bs.sh),请确保下面例子中的“--data_path”修改为用户的data的路径,这里选择将data文件夹放在home目录下。训练默认开启混合精度,即precision_mode='allow_mix_precision' + + bash train_full_1p.sh --data_path=/home/tfrecord + +高级参考