diff --git a/cv/ocr/dbnet/pytorch/.gitignore b/cv/ocr/dbnet/pytorch/.gitignore

new file mode 100755

index 0000000000000000000000000000000000000000..628f7f2699eb1265cba9873ad70f89b60f6a7eb2

--- /dev/null

+++ b/cv/ocr/dbnet/pytorch/.gitignore

@@ -0,0 +1,49 @@

+

+# Byte-compiled / optimized / DLL files

+__pycache__/

+*.py[cod]

+*$py.class

+

+# C extensions

+*.so

+

+# PyTorch checkpoint

+*.pth

+

+# Distribution / packaging

+.Python

+build/

+develop-eggs/

+dist/

+downloads/

+eggs/

+.eggs/

+lib/

+lib64/

+parts/

+sdist/

+var/

+wheels/

+*.egg-info/

+.installed.cfg

+OCR_Detect/

+*.egg

+MANIFEST

+

+# PyInstaller

+# Usually these files are written by a python script from a template

+# before PyInstaller builds the exe, so as to inject date/other infos into it.

+*.manifest

+*.spec

+

+# Installer logs

+pip-log.txt

+pip-delete-this-directory.txt

+

+# Unit test / coverage reports

+htmlcov/

+.tox/

+.coverage

+.coverage.*

+.cache

+

diff --git a/cv/ocr/dbnet/pytorch/CITATION.cff b/cv/ocr/dbnet/pytorch/CITATION.cff

new file mode 100755

index 0000000000000000000000000000000000000000..7d1d93a7c68daf442bc6540b197b401e7a38b91c

--- /dev/null

+++ b/cv/ocr/dbnet/pytorch/CITATION.cff

@@ -0,0 +1,9 @@

+cff-version: 1.2.0

+message: "If you use this software, please cite it as below."

+title: "OpenMMLab Text Detection, Recognition and Understanding Toolbox"

+authors:

+ - name: "MMOCR Contributors"

+version: 0.3.0

+date-released: 2020-08-15

+repository-code: "https://github.com/open-mmlab/mmocr"

+license: Apache-2.0

diff --git a/cv/ocr/dbnet/pytorch/EGG-INFO/PKG-INFO b/cv/ocr/dbnet/pytorch/EGG-INFO/PKG-INFO

new file mode 100755

index 0000000000000000000000000000000000000000..9280d7ec8910f9759c9d8490c4df803cfb4e7583

--- /dev/null

+++ b/cv/ocr/dbnet/pytorch/EGG-INFO/PKG-INFO

@@ -0,0 +1,380 @@

+Metadata-Version: 2.1

+Name: dbnet_det

+Version: 2.25.0

+Summary: OpenMMLab Detection Toolbox and Benchmark

+Home-page: https://github.com/open-mmlab/dbnet_detection

+Author: dbnet_detection Contributors

+Author-email: openmmlab@gmail.com

+License: Apache License 2.0

+Keywords: computer vision,object detection

+Classifier: Development Status :: 5 - Production/Stable

+Classifier: License :: OSI Approved :: Apache Software License

+Classifier: Operating System :: OS Independent

+Classifier: Programming Language :: Python :: 3

+Classifier: Programming Language :: Python :: 3.6

+Classifier: Programming Language :: Python :: 3.7

+Classifier: Programming Language :: Python :: 3.8

+Classifier: Programming Language :: Python :: 3.9

+Description-Content-Type: text/markdown

+Provides-Extra: all

+Provides-Extra: tests

+Provides-Extra: build

+Provides-Extra: optional

+

+

+

+

+

+

+

+[](https://pypi.org/project/dbnet_det)

+[](https://dbnet_detection.readthedocs.io/en/latest/)

+[](https://github.com/open-mmlab/dbnet_detection/actions)

+[](https://codecov.io/gh/open-mmlab/dbnet_detection)

+[](https://github.com/open-mmlab/dbnet_detection/blob/master/LICENSE)

+[](https://github.com/open-mmlab/dbnet_detection/issues)

+[](https://github.com/open-mmlab/dbnet_detection/issues)

+

+[📘Documentation](https://dbnet_detection.readthedocs.io/en/stable/) |

+[🛠️Installation](https://dbnet_detection.readthedocs.io/en/stable/get_started.html) |

+[👀Model Zoo](https://dbnet_detection.readthedocs.io/en/stable/model_zoo.html) |

+[🆕Update News](https://dbnet_detection.readthedocs.io/en/stable/changelog.html) |

+[🚀Ongoing Projects](https://github.com/open-mmlab/dbnet_detection/projects) |

+[🤔Reporting Issues](https://github.com/open-mmlab/dbnet_detection/issues/new/choose)

+

+

+

+English | [简体中文](README_zh-CN.md)

+

+

+

+## Introduction

+

+dbnet_detection is an open source object detection toolbox based on PyTorch. It is

+a part of the [OpenMMLab](https://openmmlab.com/) project.

+

+The master branch works with **PyTorch 1.5+**.

+

+ +

+

+

+

+Major features

+

+- **Modular Design**

+

+ We decompose the detection framework into different components and one can easily construct a customized object detection framework by combining different modules.

+

+- **Support of multiple frameworks out of box**

+

+ The toolbox directly supports popular and contemporary detection frameworks, *e.g.* Faster RCNN, Mask RCNN, RetinaNet, etc.

+

+- **High efficiency**

+

+ All basic bbox and mask operations run on GPUs. The training speed is faster than or comparable to other codebases, including [Detectron2](https://github.com/facebookresearch/detectron2), [maskrcnn-benchmark](https://github.com/facebookresearch/maskrcnn-benchmark) and [SimpleDet](https://github.com/TuSimple/simpledet).

+

+- **State of the art**

+

+ The toolbox stems from the codebase developed by the *dbnet_det* team, who won [COCO Detection Challenge](http://cocodataset.org/#detection-leaderboard) in 2018, and we keep pushing it forward.

+

+

+

+Apart from dbnet_detection, we also released a library [dbnet_cv](https://github.com/open-mmlab/dbnet_cv) for computer vision research, which is heavily depended on by this toolbox.

+

+## What's New

+

+**2.25.0** was released in 1/6/2022:

+

+- Support dedicated `dbnet_detWandbHook` hook

+- Support [ConvNeXt](configs/convnext), [DDOD](configs/ddod), [SOLOv2](configs/solov2)

+- Support [Mask2Former](configs/mask2former) for instance segmentation

+- Rename [config files of Mask2Former](configs/mask2former)

+

+Please refer to [changelog.md](docs/en/changelog.md) for details and release history.

+

+For compatibility changes between different versions of dbnet_detection, please refer to [compatibility.md](docs/en/compatibility.md).

+

+## Installation

+

+Please refer to [Installation](docs/en/get_started.md/#Installation) for installation instructions.

+

+## Getting Started

+

+Please see [get_started.md](docs/en/get_started.md) for the basic usage of dbnet_detection. We provide [colab tutorial](demo/dbnet_det_Tutorial.ipynb) and [instance segmentation colab tutorial](demo/dbnet_det_InstanceSeg_Tutorial.ipynb), and other tutorials for:

+

+- [with existing dataset](docs/en/1_exist_data_model.md)

+- [with new dataset](docs/en/2_new_data_model.md)

+- [with existing dataset_new_model](docs/en/3_exist_data_new_model.md)

+- [learn about configs](docs/en/tutorials/config.md)

+- [customize_datasets](docs/en/tutorials/customize_dataset.md)

+- [customize data pipelines](docs/en/tutorials/data_pipeline.md)

+- [customize_models](docs/en/tutorials/customize_models.md)

+- [customize runtime settings](docs/en/tutorials/customize_runtime.md)

+- [customize_losses](docs/en/tutorials/customize_losses.md)

+- [finetuning models](docs/en/tutorials/finetune.md)

+- [export a model to ONNX](docs/en/tutorials/pytorch2onnx.md)

+- [export ONNX to TRT](docs/en/tutorials/onnx2tensorrt.md)

+- [weight initialization](docs/en/tutorials/init_cfg.md)

+- [how to xxx](docs/en/tutorials/how_to.md)

+

+## Overview of Benchmark and Model Zoo

+

+Results and models are available in the [model zoo](docs/en/model_zoo.md).

+

+

+ Architectures

+

+

+

+

+ |

+ Object Detection

+ |

+

+ Instance Segmentation

+ |

+

+ Panoptic Segmentation

+ |

+

+ Other

+ |

+

+

+ |

+

+ |

+

+

+ |

+

+

+ |

+

+

+ Contrastive Learning

+

+

+ Distillation

+

+

+ |

+

+

+

+

+

+

+

+ Components

+

+

+

+

+ |

+ Backbones

+ |

+

+ Necks

+ |

+

+ Loss

+ |

+

+ Common

+ |

+

+

+ |

+

+ |

+

+

+ |

+

+

+ |

+

+

+ |

+

+

+

+

+

+

+Some other methods are also supported in [projects using dbnet_detection](./docs/en/projects.md).

+

+## FAQ

+

+Please refer to [FAQ](docs/en/faq.md) for frequently asked questions.

+

+## Contributing

+

+We appreciate all contributions to improve dbnet_detection. Ongoing projects can be found in out [GitHub Projects](https://github.com/open-mmlab/dbnet_detection/projects). Welcome community users to participate in these projects. Please refer to [CONTRIBUTING.md](.github/CONTRIBUTING.md) for the contributing guideline.

+

+## Acknowledgement

+

+dbnet_detection is an open source project that is contributed by researchers and engineers from various colleges and companies. We appreciate all the contributors who implement their methods or add new features, as well as users who give valuable feedbacks.

+We wish that the toolbox and benchmark could serve the growing research community by providing a flexible toolkit to reimplement existing methods and develop their own new detectors.

+

+## Citation

+

+If you use this toolbox or benchmark in your research, please cite this project.

+

+```

+@article{dbnet_detection,

+ title = {{dbnet_detection}: Open MMLab Detection Toolbox and Benchmark},

+ author = {Chen, Kai and Wang, Jiaqi and Pang, Jiangmiao and Cao, Yuhang and

+ Xiong, Yu and Li, Xiaoxiao and Sun, Shuyang and Feng, Wansen and

+ Liu, Ziwei and Xu, Jiarui and Zhang, Zheng and Cheng, Dazhi and

+ Zhu, Chenchen and Cheng, Tianheng and Zhao, Qijie and Li, Buyu and

+ Lu, Xin and Zhu, Rui and Wu, Yue and Dai, Jifeng and Wang, Jingdong

+ and Shi, Jianping and Ouyang, Wanli and Loy, Chen Change and Lin, Dahua},

+ journal= {arXiv preprint arXiv:1906.07155},

+ year={2019}

+}

+```

+

+## License

+

+This project is released under the [Apache 2.0 license](LICENSE).

+

+## Projects in OpenMMLab

+

+- [DBNET_CV](https://github.com/open-mmlab/dbnet_cv): OpenMMLab foundational library for computer vision.

+- [MIM](https://github.com/open-mmlab/mim): MIM installs OpenMMLab packages.

+- [MMClassification](https://github.com/open-mmlab/mmclassification): OpenMMLab image classification toolbox and benchmark.

+- [dbnet_detection](https://github.com/open-mmlab/dbnet_detection): OpenMMLab detection toolbox and benchmark.

+- [dbnet_detection3D](https://github.com/open-mmlab/dbnet_detection3d): OpenMMLab's next-generation platform for general 3D object detection.

+- [MMRotate](https://github.com/open-mmlab/mmrotate): OpenMMLab rotated object detection toolbox and benchmark.

+- [MMSegmentation](https://github.com/open-mmlab/mmsegmentation): OpenMMLab semantic segmentation toolbox and benchmark.

+- [MMOCR](https://github.com/open-mmlab/mmocr): OpenMMLab text detection, recognition, and understanding toolbox.

+- [MMPose](https://github.com/open-mmlab/mmpose): OpenMMLab pose estimation toolbox and benchmark.

+- [MMHuman3D](https://github.com/open-mmlab/mmhuman3d): OpenMMLab 3D human parametric model toolbox and benchmark.

+- [MMSelfSup](https://github.com/open-mmlab/mmselfsup): OpenMMLab self-supervised learning toolbox and benchmark.

+- [MMRazor](https://github.com/open-mmlab/mmrazor): OpenMMLab model compression toolbox and benchmark.

+- [MMFewShot](https://github.com/open-mmlab/mmfewshot): OpenMMLab fewshot learning toolbox and benchmark.

+- [MMAction2](https://github.com/open-mmlab/mmaction2): OpenMMLab's next-generation action understanding toolbox and benchmark.

+- [MMTracking](https://github.com/open-mmlab/mmtracking): OpenMMLab video perception toolbox and benchmark.

+- [MMFlow](https://github.com/open-mmlab/mmflow): OpenMMLab optical flow toolbox and benchmark.

+- [MMEditing](https://github.com/open-mmlab/mmediting): OpenMMLab image and video editing toolbox.

+- [MMGeneration](https://github.com/open-mmlab/mmgeneration): OpenMMLab image and video generative models toolbox.

+- [MMDeploy](https://github.com/open-mmlab/mmdeploy): OpenMMLab model deployment framework.

diff --git a/cv/ocr/dbnet/pytorch/EGG-INFO/SOURCES.txt b/cv/ocr/dbnet/pytorch/EGG-INFO/SOURCES.txt

new file mode 100755

index 0000000000000000000000000000000000000000..4f42864d415c099c85025f0159e89eb707bdcd5e

--- /dev/null

+++ b/cv/ocr/dbnet/pytorch/EGG-INFO/SOURCES.txt

@@ -0,0 +1,62 @@

+README.md

+setup.py

+dbnet_det/__init__.py

+dbnet_det/version.py

+dbnet_det.egg-info/PKG-INFO

+dbnet_det.egg-info/SOURCES.txt

+dbnet_det.egg-info/dependency_links.txt

+dbnet_det.egg-info/not-zip-safe

+dbnet_det.egg-info/requires.txt

+dbnet_det.egg-info/top_level.txt

+dbnet_det/core/__init__.py

+dbnet_det/core/bbox/__init__.py

+dbnet_det/core/bbox/transforms.py

+dbnet_det/core/evaluation/__init__.py

+dbnet_det/core/evaluation/bbox_overlaps.py

+dbnet_det/core/evaluation/class_names.py

+dbnet_det/core/evaluation/eval_hooks.py

+dbnet_det/core/evaluation/mean_ap.py

+dbnet_det/core/evaluation/panoptic_utils.py

+dbnet_det/core/evaluation/recall.py

+dbnet_det/core/mask/__init__.py

+dbnet_det/core/mask/structures.py

+dbnet_det/core/mask/utils.py

+dbnet_det/core/utils/__init__.py

+dbnet_det/core/utils/dist_utils.py

+dbnet_det/core/utils/misc.py

+dbnet_det/core/visualization/__init__.py

+dbnet_det/core/visualization/image.py

+dbnet_det/core/visualization/palette.py

+dbnet_det/datasets/__init__.py

+dbnet_det/datasets/builder.py

+dbnet_det/datasets/coco.py

+dbnet_det/datasets/custom.py

+dbnet_det/datasets/dataset_wrappers.py

+dbnet_det/datasets/utils.py

+dbnet_det/datasets/api_wrappers/__init__.py

+dbnet_det/datasets/api_wrappers/coco_api.py

+dbnet_det/datasets/api_wrappers/panoptic_evaluation.py

+dbnet_det/datasets/pipelines/__init__.py

+dbnet_det/datasets/pipelines/compose.py

+dbnet_det/datasets/pipelines/formatting.py

+dbnet_det/datasets/pipelines/loading.py

+dbnet_det/datasets/pipelines/test_time_aug.py

+dbnet_det/datasets/pipelines/transforms.py

+dbnet_det/datasets/samplers/__init__.py

+dbnet_det/datasets/samplers/class_aware_sampler.py

+dbnet_det/datasets/samplers/distributed_sampler.py

+dbnet_det/datasets/samplers/group_sampler.py

+dbnet_det/datasets/samplers/infinite_sampler.py

+dbnet_det/models/__init__.py

+dbnet_det/models/builder.py

+dbnet_det/models/backbones/__init__.py

+dbnet_det/models/backbones/resnet.py

+dbnet_det/models/detectors/__init__.py

+dbnet_det/models/detectors/base.py

+dbnet_det/models/detectors/single_stage.py

+dbnet_det/models/utils/__init__.py

+dbnet_det/models/utils/res_layer.py

+dbnet_det/utils/__init__.py

+dbnet_det/utils/logger.py

+dbnet_det/utils/profiling.py

+dbnet_det/utils/util_distribution.py

\ No newline at end of file

diff --git a/cv/ocr/dbnet/pytorch/EGG-INFO/dependency_links.txt b/cv/ocr/dbnet/pytorch/EGG-INFO/dependency_links.txt

new file mode 100755

index 0000000000000000000000000000000000000000..8b137891791fe96927ad78e64b0aad7bded08bdc

--- /dev/null

+++ b/cv/ocr/dbnet/pytorch/EGG-INFO/dependency_links.txt

@@ -0,0 +1 @@

+

diff --git a/cv/ocr/dbnet/pytorch/EGG-INFO/not-zip-safe b/cv/ocr/dbnet/pytorch/EGG-INFO/not-zip-safe

new file mode 100755

index 0000000000000000000000000000000000000000..8b137891791fe96927ad78e64b0aad7bded08bdc

--- /dev/null

+++ b/cv/ocr/dbnet/pytorch/EGG-INFO/not-zip-safe

@@ -0,0 +1 @@

+

diff --git a/cv/ocr/dbnet/pytorch/EGG-INFO/requires.txt b/cv/ocr/dbnet/pytorch/EGG-INFO/requires.txt

new file mode 100755

index 0000000000000000000000000000000000000000..22c49defa6c6641da95b0ac68789965cdac53292

--- /dev/null

+++ b/cv/ocr/dbnet/pytorch/EGG-INFO/requires.txt

@@ -0,0 +1,59 @@

+matplotlib

+numpy

+pycocotools

+six

+terminaltables

+

+[all]

+cython

+numpy

+cityscapesscripts

+imagecorruptions

+scipy

+sklearn

+timm

+matplotlib

+pycocotools

+six

+terminaltables

+asynctest

+codecov

+flake8

+interrogate

+isort==4.3.21

+kwarray

+mmtrack

+onnx==1.7.0

+onnxruntime>=1.8.0

+protobuf<=3.20.1

+pytest

+ubelt

+xdoctest>=0.10.0

+yapf

+

+[build]

+cython

+numpy

+

+[optional]

+cityscapesscripts

+imagecorruptions

+scipy

+sklearn

+timm

+

+[tests]

+asynctest

+codecov

+flake8

+interrogate

+isort==4.3.21

+kwarray

+mmtrack

+onnx==1.7.0

+onnxruntime>=1.8.0

+protobuf<=3.20.1

+pytest

+ubelt

+xdoctest>=0.10.0

+yapf

diff --git a/cv/ocr/dbnet/pytorch/EGG-INFO/top_level.txt b/cv/ocr/dbnet/pytorch/EGG-INFO/top_level.txt

new file mode 100755

index 0000000000000000000000000000000000000000..2d2f43c40746f3804a679a386877c3d40e319f48

--- /dev/null

+++ b/cv/ocr/dbnet/pytorch/EGG-INFO/top_level.txt

@@ -0,0 +1 @@

+dbnet_det

diff --git a/cv/ocr/dbnet/pytorch/LICENSE b/cv/ocr/dbnet/pytorch/LICENSE

new file mode 100755

index 0000000000000000000000000000000000000000..3076a4378396deea4db311adbe1fbfd8b8b05920

--- /dev/null

+++ b/cv/ocr/dbnet/pytorch/LICENSE

@@ -0,0 +1,203 @@

+Copyright (c) MMOCR Authors. All rights reserved.

+

+ Apache License

+ Version 2.0, January 2004

+ http://www.apache.org/licenses/

+

+ TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

+

+ 1. Definitions.

+

+ "License" shall mean the terms and conditions for use, reproduction,

+ and distribution as defined by Sections 1 through 9 of this document.

+

+ "Licensor" shall mean the copyright owner or entity authorized by

+ the copyright owner that is granting the License.

+

+ "Legal Entity" shall mean the union of the acting entity and all

+ other entities that control, are controlled by, or are under common

+ control with that entity. For the purposes of this definition,

+ "control" means (i) the power, direct or indirect, to cause the

+ direction or management of such entity, whether by contract or

+ otherwise, or (ii) ownership of fifty percent (50%) or more of the

+ outstanding shares, or (iii) beneficial ownership of such entity.

+

+ "You" (or "Your") shall mean an individual or Legal Entity

+ exercising permissions granted by this License.

+

+ "Source" form shall mean the preferred form for making modifications,

+ including but not limited to software source code, documentation

+ source, and configuration files.

+

+ "Object" form shall mean any form resulting from mechanical

+ transformation or translation of a Source form, including but

+ not limited to compiled object code, generated documentation,

+ and conversions to other media types.

+

+ "Work" shall mean the work of authorship, whether in Source or

+ Object form, made available under the License, as indicated by a

+ copyright notice that is included in or attached to the work

+ (an example is provided in the Appendix below).

+

+ "Derivative Works" shall mean any work, whether in Source or Object

+ form, that is based on (or derived from) the Work and for which the

+ editorial revisions, annotations, elaborations, or other modifications

+ represent, as a whole, an original work of authorship. For the purposes

+ of this License, Derivative Works shall not include works that remain

+ separable from, or merely link (or bind by name) to the interfaces of,

+ the Work and Derivative Works thereof.

+

+ "Contribution" shall mean any work of authorship, including

+ the original version of the Work and any modifications or additions

+ to that Work or Derivative Works thereof, that is intentionally

+ submitted to Licensor for inclusion in the Work by the copyright owner

+ or by an individual or Legal Entity authorized to submit on behalf of

+ the copyright owner. For the purposes of this definition, "submitted"

+ means any form of electronic, verbal, or written communication sent

+ to the Licensor or its representatives, including but not limited to

+ communication on electronic mailing lists, source code control systems,

+ and issue tracking systems that are managed by, or on behalf of, the

+ Licensor for the purpose of discussing and improving the Work, but

+ excluding communication that is conspicuously marked or otherwise

+ designated in writing by the copyright owner as "Not a Contribution."

+

+ "Contributor" shall mean Licensor and any individual or Legal Entity

+ on behalf of whom a Contribution has been received by Licensor and

+ subsequently incorporated within the Work.

+

+ 2. Grant of Copyright License. Subject to the terms and conditions of

+ this License, each Contributor hereby grants to You a perpetual,

+ worldwide, non-exclusive, no-charge, royalty-free, irrevocable

+ copyright license to reproduce, prepare Derivative Works of,

+ publicly display, publicly perform, sublicense, and distribute the

+ Work and such Derivative Works in Source or Object form.

+

+ 3. Grant of Patent License. Subject to the terms and conditions of

+ this License, each Contributor hereby grants to You a perpetual,

+ worldwide, non-exclusive, no-charge, royalty-free, irrevocable

+ (except as stated in this section) patent license to make, have made,

+ use, offer to sell, sell, import, and otherwise transfer the Work,

+ where such license applies only to those patent claims licensable

+ by such Contributor that are necessarily infringed by their

+ Contribution(s) alone or by combination of their Contribution(s)

+ with the Work to which such Contribution(s) was submitted. If You

+ institute patent litigation against any entity (including a

+ cross-claim or counterclaim in a lawsuit) alleging that the Work

+ or a Contribution incorporated within the Work constitutes direct

+ or contributory patent infringement, then any patent licenses

+ granted to You under this License for that Work shall terminate

+ as of the date such litigation is filed.

+

+ 4. Redistribution. You may reproduce and distribute copies of the

+ Work or Derivative Works thereof in any medium, with or without

+ modifications, and in Source or Object form, provided that You

+ meet the following conditions:

+

+ (a) You must give any other recipients of the Work or

+ Derivative Works a copy of this License; and

+

+ (b) You must cause any modified files to carry prominent notices

+ stating that You changed the files; and

+

+ (c) You must retain, in the Source form of any Derivative Works

+ that You distribute, all copyright, patent, trademark, and

+ attribution notices from the Source form of the Work,

+ excluding those notices that do not pertain to any part of

+ the Derivative Works; and

+

+ (d) If the Work includes a "NOTICE" text file as part of its

+ distribution, then any Derivative Works that You distribute must

+ include a readable copy of the attribution notices contained

+ within such NOTICE file, excluding those notices that do not

+ pertain to any part of the Derivative Works, in at least one

+ of the following places: within a NOTICE text file distributed

+ as part of the Derivative Works; within the Source form or

+ documentation, if provided along with the Derivative Works; or,

+ within a display generated by the Derivative Works, if and

+ wherever such third-party notices normally appear. The contents

+ of the NOTICE file are for informational purposes only and

+ do not modify the License. You may add Your own attribution

+ notices within Derivative Works that You distribute, alongside

+ or as an addendum to the NOTICE text from the Work, provided

+ that such additional attribution notices cannot be construed

+ as modifying the License.

+

+ You may add Your own copyright statement to Your modifications and

+ may provide additional or different license terms and conditions

+ for use, reproduction, or distribution of Your modifications, or

+ for any such Derivative Works as a whole, provided Your use,

+ reproduction, and distribution of the Work otherwise complies with

+ the conditions stated in this License.

+

+ 5. Submission of Contributions. Unless You explicitly state otherwise,

+ any Contribution intentionally submitted for inclusion in the Work

+ by You to the Licensor shall be under the terms and conditions of

+ this License, without any additional terms or conditions.

+ Notwithstanding the above, nothing herein shall supersede or modify

+ the terms of any separate license agreement you may have executed

+ with Licensor regarding such Contributions.

+

+ 6. Trademarks. This License does not grant permission to use the trade

+ names, trademarks, service marks, or product names of the Licensor,

+ except as required for reasonable and customary use in describing the

+ origin of the Work and reproducing the content of the NOTICE file.

+

+ 7. Disclaimer of Warranty. Unless required by applicable law or

+ agreed to in writing, Licensor provides the Work (and each

+ Contributor provides its Contributions) on an "AS IS" BASIS,

+ WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

+ implied, including, without limitation, any warranties or conditions

+ of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

+ PARTICULAR PURPOSE. You are solely responsible for determining the

+ appropriateness of using or redistributing the Work and assume any

+ risks associated with Your exercise of permissions under this License.

+

+ 8. Limitation of Liability. In no event and under no legal theory,

+ whether in tort (including negligence), contract, or otherwise,

+ unless required by applicable law (such as deliberate and grossly

+ negligent acts) or agreed to in writing, shall any Contributor be

+ liable to You for damages, including any direct, indirect, special,

+ incidental, or consequential damages of any character arising as a

+ result of this License or out of the use or inability to use the

+ Work (including but not limited to damages for loss of goodwill,

+ work stoppage, computer failure or malfunction, or any and all

+ other commercial damages or losses), even if such Contributor

+ has been advised of the possibility of such damages.

+

+ 9. Accepting Warranty or Additional Liability. While redistributing

+ the Work or Derivative Works thereof, You may choose to offer,

+ and charge a fee for, acceptance of support, warranty, indemnity,

+ or other liability obligations and/or rights consistent with this

+ License. However, in accepting such obligations, You may act only

+ on Your own behalf and on Your sole responsibility, not on behalf

+ of any other Contributor, and only if You agree to indemnify,

+ defend, and hold each Contributor harmless for any liability

+ incurred by, or claims asserted against, such Contributor by reason

+ of your accepting any such warranty or additional liability.

+

+ END OF TERMS AND CONDITIONS

+

+ APPENDIX: How to apply the Apache License to your work.

+

+ To apply the Apache License to your work, attach the following

+ boilerplate notice, with the fields enclosed by brackets "[]"

+ replaced with your own identifying information. (Don't include

+ the brackets!) The text should be enclosed in the appropriate

+ comment syntax for the file format. We also recommend that a

+ file or class name and description of purpose be included on the

+ same "printed page" as the copyright notice for easier

+ identification within third-party archives.

+

+ Copyright 2021 MMOCR Authors. All rights reserved.

+

+ Licensed under the Apache License, Version 2.0 (the "License");

+ you may not use this file except in compliance with the License.

+ You may obtain a copy of the License at

+

+ http://www.apache.org/licenses/LICENSE-2.0

+

+ Unless required by applicable law or agreed to in writing, software

+ distributed under the License is distributed on an "AS IS" BASIS,

+ WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ See the License for the specific language governing permissions and

+ limitations under the License.

diff --git a/cv/ocr/dbnet/pytorch/README.md b/cv/ocr/dbnet/pytorch/README.md

new file mode 100755

index 0000000000000000000000000000000000000000..d92a6cfcab2e892ede674e6526ed1daab5f844ea

--- /dev/null

+++ b/cv/ocr/dbnet/pytorch/README.md

@@ -0,0 +1,69 @@

+# DBnet

+## Model description

+Recently, segmentation-based methods are quite popular in scene text detection, as the segmentation results can more accurately describe scene text of various shapes such as curve text. However, the post-processing of binarization is essential for segmentation-based detection, which converts probability maps produced by a segmentation method into bounding boxes/regions of text. In this paper, we propose a module named Differentiable Binarization (DB), which can perform the binarization process in a segmentation network. Optimized along with a DB module, a segmentation network can adaptively set the thresholds for binarization, which not only simplifies the post-processing but also enhances the performance of text detection. Based on a simple segmentation network, we validate the performance improvements of DB on five benchmark datasets, which consistently achieves state-of-the-art results, in terms of both detection accuracy and speed. In particular, with a light-weight backbone, the performance improvements by DB are significant so that we can look for an ideal tradeoff between detection accuracy and efficiency.

+## Step 2: Preparing datasets

+

+```shell

+$ mkdir data

+$ cd data

+```

+ICDAR 2015

+Please [ICDAR 2015](https://rrc.cvc.uab.es/?ch=4&com=downloads) download ICDAR 2015 here

+ch4_training_images.zip、ch4_test_images.zip、ch4_training_localization_transcription_gt.zip、Challenge4_Test_Task1_GT.zip

+

+```shell

+mkdir icdar2015 && cd icdar2015

+mkdir imgs && mkdir annotations

+

+mv ch4_training_images imgs/training

+mv ch4_test_images imgs/test

+

+mv ch4_training_localization_transcription_gt annotations/training

+mv Challenge4_Test_Task1_GT annotations/test

+```

+Please [instances_training.json](https://download.openmmlab.com/mmocr/data/icdar2015/instances_training.json) download instances_training.json here

+Please [instances_test.json](https://download.openmmlab.com/mmocr/data/icdar2015/instances_test.json) download instances_test.json here

+

+```shell

+

+icdar2015/

+├── imgs

+│ ├── test

+│ └── training

+├── instances_test.json

+└── instances_training.json

+

+```

+### Build Extension

+

+```shell

+$ DBNET_CV_WITH_OPS=1 python3 setup.py build && cp build/lib.linux*/dbnet_cv/_ext.cpython* dbnet_cv

+```

+### Install packages

+

+```shell

+$ pip3 install -r requirements.txt

+```

+

+### Training on single card

+```shell

+$ python3 train.py configs/textdet/dbnet/dbnet_mobilenetv3_fpnc_1200e_icdar2015.py

+```

+

+### Training on mutil-cards

+```shell

+$ bash dist_train.sh configs/textdet/dbnet/dbnet_mobilenetv3_fpnc_1200e_icdar2015.py 8

+```

+

+## Results on BI-V100

+

+| approach| GPUs | train mem | train FPS |

+| :-----: |:-------:| :-------: |:--------: |

+| dbnet | BI100x8 | 5426 | 54.375 |

+

+|0_hmean-iou:recall: | 0_hmean-iou:precision: | 0_hmean-iou:hmean:|

+| :-----: | :-------: | :-------: |

+| 0.7111 | 0.8062 | 0.7557 |

+

+## Reference

+https://github.com/open-mmlab/mmocr

diff --git a/cv/ocr/dbnet/pytorch/configs/_base_/default_runtime.py b/cv/ocr/dbnet/pytorch/configs/_base_/default_runtime.py

new file mode 100755

index 0000000000000000000000000000000000000000..de7f9650ce73ba7ca633652b50df021b67498362

--- /dev/null

+++ b/cv/ocr/dbnet/pytorch/configs/_base_/default_runtime.py

@@ -0,0 +1,17 @@

+# yapf:disable

+log_config = dict(

+ interval=5,

+ hooks=[

+ dict(type='TextLoggerHook')

+ ])

+# yapf:enable

+dist_params = dict(backend='nccl')

+log_level = 'INFO'

+load_from = None

+resume_from = None

+workflow = [('train', 1)]

+

+# disable opencv multithreading to avoid system being overloaded

+opencv_num_threads = 0

+# set multi-process start method as `fork` to speed up the training

+mp_start_method = 'fork'

diff --git a/cv/ocr/dbnet/pytorch/configs/_base_/det_datasets/icdar2015.py b/cv/ocr/dbnet/pytorch/configs/_base_/det_datasets/icdar2015.py

new file mode 100755

index 0000000000000000000000000000000000000000..f711c06dce76d53b8737288c8de318e6f90ce585

--- /dev/null

+++ b/cv/ocr/dbnet/pytorch/configs/_base_/det_datasets/icdar2015.py

@@ -0,0 +1,18 @@

+dataset_type = 'IcdarDataset'

+data_root = 'data/icdar2015'

+

+train = dict(

+ type=dataset_type,

+ ann_file=f'{data_root}/instances_training.json',

+ img_prefix=f'{data_root}/imgs',

+ pipeline=None)

+

+test = dict(

+ type=dataset_type,

+ ann_file=f'{data_root}/instances_test.json',

+ img_prefix=f'{data_root}/imgs',

+ pipeline=None)

+

+train_list = [train]

+

+test_list = [test]

diff --git a/cv/ocr/dbnet/pytorch/configs/_base_/det_models/dbnet_mobilenetv3_fpnc.py b/cv/ocr/dbnet/pytorch/configs/_base_/det_models/dbnet_mobilenetv3_fpnc.py

new file mode 100755

index 0000000000000000000000000000000000000000..e6bff598fce56decf6d5e92596d67165035b09f8

--- /dev/null

+++ b/cv/ocr/dbnet/pytorch/configs/_base_/det_models/dbnet_mobilenetv3_fpnc.py

@@ -0,0 +1,32 @@

+model = dict(

+ type='DBNet',

+ backbone=dict(

+ type='dbnet_det.MobileNetV3',

+ arch='large',

+ # num_stages=3,

+ out_indices=(3, 6, 12, 16),

+ norm_cfg=dict(type='BN', requires_grad=True),

+ init_cfg=dict(type='Pretrained', checkpoint='https://download.openmmlab.com/pretrain/third_party/mobilenet_v3_large-bc2c3fd3.pth')

+ ),

+ # backbone=dict(

+ # type='dbnet_det.ResNet',

+ # depth=18,

+ # num_stages=4,

+ # out_indices=(0, 1, 2, 3),

+ # frozen_stages=-1,

+ # norm_cfg=dict(type='BN', requires_grad=True),

+ # # init_cfg=dict(type='Pretrained', checkpoint='torchvision://resnet18'),

+ # norm_eval=False,

+ # style='caffe'),

+ neck=dict(

+ type='FPNC', in_channels=[24, 40, 112, 960], lateral_channels=256),

+ bbox_head=dict(

+ type='DBHead',

+ in_channels=256,

+ loss=dict(type='DBLoss', alpha=5.0, beta=10.0, bbce_loss=False),

+ postprocessor=dict(type='DBPostprocessor', text_repr_type='quad')),

+ train_cfg=None,

+ test_cfg=None)

+

+

+

diff --git a/cv/ocr/dbnet/pytorch/configs/_base_/det_models/dbnet_r18_fpnc.py b/cv/ocr/dbnet/pytorch/configs/_base_/det_models/dbnet_r18_fpnc.py

new file mode 100755

index 0000000000000000000000000000000000000000..b26391f4d4b2eb34beae81cce56c2816979bc730

--- /dev/null

+++ b/cv/ocr/dbnet/pytorch/configs/_base_/det_models/dbnet_r18_fpnc.py

@@ -0,0 +1,31 @@

+model = dict(

+ type='DBNet',

+ backbone=dict(

+ type='dbnet_det.ResNet',

+ depth=18,

+ num_stages=4,

+ out_indices=(0, 1, 2, 3),

+ frozen_stages=-1,

+ norm_cfg=dict(type='BN', requires_grad=True),

+ # init_cfg=dict(type='Pretrained', checkpoint='torchvision://resnet18'),

+ norm_eval=False,

+ style='caffe'),

+ neck=dict(

+ type='FPNC', in_channels=[64, 128, 256, 512], lateral_channels=256),

+ bbox_head=dict(

+ type='DBHead',

+ in_channels=256,

+ loss=dict(type='DBLoss', alpha=5.0, beta=10.0, bbce_loss=False),

+ postprocessor=dict(type='DBPostprocessor', text_repr_type='quad')),

+ train_cfg=None,

+ test_cfg=None)

+

+

+

+# backbone=dict(

+# type='MobileNetV3',

+# arch='small',

+# out_indices=(0, 1, 12),

+# norm_cfg=dict(type='BN', requires_grad=True),

+# init_cfg=dict(type='Pretrained', checkpoint='open-mmlab://contrib/mobilenet_v3_small')

+# ),

\ No newline at end of file

diff --git a/cv/ocr/dbnet/pytorch/configs/_base_/det_models/dbnet_r50dcnv2_fpnc.py b/cv/ocr/dbnet/pytorch/configs/_base_/det_models/dbnet_r50dcnv2_fpnc.py

new file mode 100755

index 0000000000000000000000000000000000000000..32b09f507a8aaabf16f86d9569a22090578084e8

--- /dev/null

+++ b/cv/ocr/dbnet/pytorch/configs/_base_/det_models/dbnet_r50dcnv2_fpnc.py

@@ -0,0 +1,23 @@

+model = dict(

+ type='DBNet',

+ backbone=dict(

+ type='dbnet_det.ResNet',

+ depth=50,

+ num_stages=4,

+ out_indices=(0, 1, 2, 3),

+ frozen_stages=-1,

+ norm_cfg=dict(type='BN', requires_grad=True),

+ norm_eval=False,

+ style='pytorch',

+ dcn=dict(type='DCNv2', deform_groups=1, fallback_on_stride=False),

+ # init_cfg=dict(type='Pretrained', checkpoint='torchvision://resnet50'),

+ stage_with_dcn=(False, True, True, True)),

+ neck=dict(

+ type='FPNC', in_channels=[256, 512, 1024, 2048], lateral_channels=256),

+ bbox_head=dict(

+ type='DBHead',

+ in_channels=256,

+ loss=dict(type='DBLoss', alpha=5.0, beta=10.0, bbce_loss=True),

+ postprocessor=dict(type='DBPostprocessor', text_repr_type='quad')),

+ train_cfg=None,

+ test_cfg=None)

diff --git a/cv/ocr/dbnet/pytorch/configs/_base_/det_pipelines/dbnet_pipeline.py b/cv/ocr/dbnet/pytorch/configs/_base_/det_pipelines/dbnet_pipeline.py

new file mode 100755

index 0000000000000000000000000000000000000000..aeb944871ac6d12bf5fc9a694076942afa63a7d8

--- /dev/null

+++ b/cv/ocr/dbnet/pytorch/configs/_base_/det_pipelines/dbnet_pipeline.py

@@ -0,0 +1,88 @@

+img_norm_cfg = dict(

+ mean=[123.675, 116.28, 103.53], std=[58.395, 57.12, 57.375], to_rgb=True)

+

+train_pipeline_r18 = [

+ dict(type='LoadImageFromFile', color_type='color_ignore_orientation'),

+ dict(

+ type='LoadTextAnnotations',

+ with_bbox=True,

+ with_mask=True,

+ poly2mask=False),

+ dict(type='ColorJitter', brightness=32.0 / 255, saturation=0.5),

+ dict(type='Normalize', **img_norm_cfg),

+ dict(

+ type='ImgAug',

+ args=[['Fliplr', 0.5],

+ dict(cls='Affine', rotate=[-10, 10]), ['Resize', [0.5, 3.0]]]),

+ dict(type='EastRandomCrop', target_size=(640, 640)),

+ dict(type='DBNetTargets', shrink_ratio=0.4),

+ dict(type='Pad', size_divisor=32),

+ dict(

+ type='CustomFormatBundle',

+ keys=['gt_shrink', 'gt_shrink_mask', 'gt_thr', 'gt_thr_mask'],

+ visualize=dict(flag=False, boundary_key='gt_shrink')),

+ dict(

+ type='Collect',

+ keys=['img', 'gt_shrink', 'gt_shrink_mask', 'gt_thr', 'gt_thr_mask'])

+]

+

+test_pipeline_1333_736 = [

+ dict(type='LoadImageFromFile', color_type='color_ignore_orientation'),

+ dict(

+ type='MultiScaleFlipAug',

+ img_scale=(1280, 736), # used by Resize

+ flip=False,

+ transforms=[

+ dict(type='Resize', keep_ratio=True),

+ dict(type='Normalize', **img_norm_cfg),

+ dict(type='Pad', size_divisor=32),

+ dict(type='ImageToTensor', keys=['img']),

+ dict(type='Collect', keys=['img']),

+ ])

+]

+

+# for dbnet_r50dcnv2_fpnc

+img_norm_cfg_r50dcnv2 = dict(

+ mean=[122.67891434, 116.66876762, 104.00698793],

+ std=[58.395, 57.12, 57.375],

+ to_rgb=True)

+

+train_pipeline_r50dcnv2 = [

+ dict(type='LoadImageFromFile', color_type='color_ignore_orientation'),

+ dict(

+ type='LoadTextAnnotations',

+ with_bbox=True,

+ with_mask=True,

+ poly2mask=False),

+ dict(type='ColorJitter', brightness=32.0 / 255, saturation=0.5),

+ dict(type='Normalize', **img_norm_cfg_r50dcnv2),

+ dict(

+ type='ImgAug',

+ args=[['Fliplr', 0.5],

+ dict(cls='Affine', rotate=[-10, 10]), ['Resize', [0.5, 3.0]]]),

+ dict(type='EastRandomCrop', target_size=(640, 640)),

+ dict(type='DBNetTargets', shrink_ratio=0.4),

+ dict(type='Pad', size_divisor=32),

+ dict(

+ type='CustomFormatBundle',

+ keys=['gt_shrink', 'gt_shrink_mask', 'gt_thr', 'gt_thr_mask'],

+ visualize=dict(flag=False, boundary_key='gt_shrink')),

+ dict(

+ type='Collect',

+ keys=['img', 'gt_shrink', 'gt_shrink_mask', 'gt_thr', 'gt_thr_mask'])

+]

+

+test_pipeline_4068_1024 = [

+ dict(type='LoadImageFromFile', color_type='color_ignore_orientation'),

+ dict(

+ type='MultiScaleFlipAug',

+ img_scale=(4068, 1024), # used by Resize

+ flip=False,

+ transforms=[

+ dict(type='Resize', keep_ratio=True),

+ dict(type='Normalize', **img_norm_cfg_r50dcnv2),

+ dict(type='Pad', size_divisor=32),

+ dict(type='ImageToTensor', keys=['img']),

+ dict(type='Collect', keys=['img']),

+ ])

+]

diff --git a/cv/ocr/dbnet/pytorch/configs/_base_/schedules/schedule_adam_1200e.py b/cv/ocr/dbnet/pytorch/configs/_base_/schedules/schedule_adam_1200e.py

new file mode 100755

index 0000000000000000000000000000000000000000..6be9df0078e9261f0223eca9e2d5dafb5edc69ac

--- /dev/null

+++ b/cv/ocr/dbnet/pytorch/configs/_base_/schedules/schedule_adam_1200e.py

@@ -0,0 +1,8 @@

+# optimizer

+optimizer = dict(type='AdamW', lr=1e-3,betas=(0.9, 0.999), weight_decay=0.05)

+optimizer_config = dict(grad_clip=None)

+# learning policy

+lr_config = dict(policy='poly', power=0.9)

+# running settings

+runner = dict(type='EpochBasedRunner', max_epochs=1200)

+checkpoint_config = dict(interval=50)

\ No newline at end of file

diff --git a/cv/ocr/dbnet/pytorch/configs/_base_/schedules/schedule_sgd_1200e.py b/cv/ocr/dbnet/pytorch/configs/_base_/schedules/schedule_sgd_1200e.py

new file mode 100755

index 0000000000000000000000000000000000000000..bc7fbf69b42b11ea9b8ae4d14216d2fcf20e717c

--- /dev/null

+++ b/cv/ocr/dbnet/pytorch/configs/_base_/schedules/schedule_sgd_1200e.py

@@ -0,0 +1,8 @@

+# optimizer

+optimizer = dict(type='SGD', lr=0.007, momentum=0.9, weight_decay=0.0001)

+optimizer_config = dict(grad_clip=None)

+# learning policy

+lr_config = dict(policy='poly', power=0.9, min_lr=1e-7, by_epoch=True)

+# running settings

+runner = dict(type='EpochBasedRunner', max_epochs=1200)

+checkpoint_config = dict(interval=100)

diff --git a/cv/ocr/dbnet/pytorch/configs/textdet/dbnet/README.md b/cv/ocr/dbnet/pytorch/configs/textdet/dbnet/README.md

new file mode 100755

index 0000000000000000000000000000000000000000..d2007c72ec2b45e70d30c6edea128b7e0be2baca

--- /dev/null

+++ b/cv/ocr/dbnet/pytorch/configs/textdet/dbnet/README.md

@@ -0,0 +1,33 @@

+# DBNet

+

+> [Real-time Scene Text Detection with Differentiable Binarization](https://arxiv.org/abs/1911.08947)

+

+

+

+## Abstract

+

+Recently, segmentation-based methods are quite popular in scene text detection, as the segmentation results can more accurately describe scene text of various shapes such as curve text. However, the post-processing of binarization is essential for segmentation-based detection, which converts probability maps produced by a segmentation method into bounding boxes/regions of text. In this paper, we propose a module named Differentiable Binarization (DB), which can perform the binarization process in a segmentation network. Optimized along with a DB module, a segmentation network can adaptively set the thresholds for binarization, which not only simplifies the post-processing but also enhances the performance of text detection. Based on a simple segmentation network, we validate the performance improvements of DB on five benchmark datasets, which consistently achieves state-of-the-art results, in terms of both detection accuracy and speed. In particular, with a light-weight backbone, the performance improvements by DB are significant so that we can look for an ideal tradeoff between detection accuracy and efficiency. Specifically, with a backbone of ResNet-18, our detector achieves an F-measure of 82.8, running at 62 FPS, on the MSRA-TD500 dataset.

+

+

+

+

+

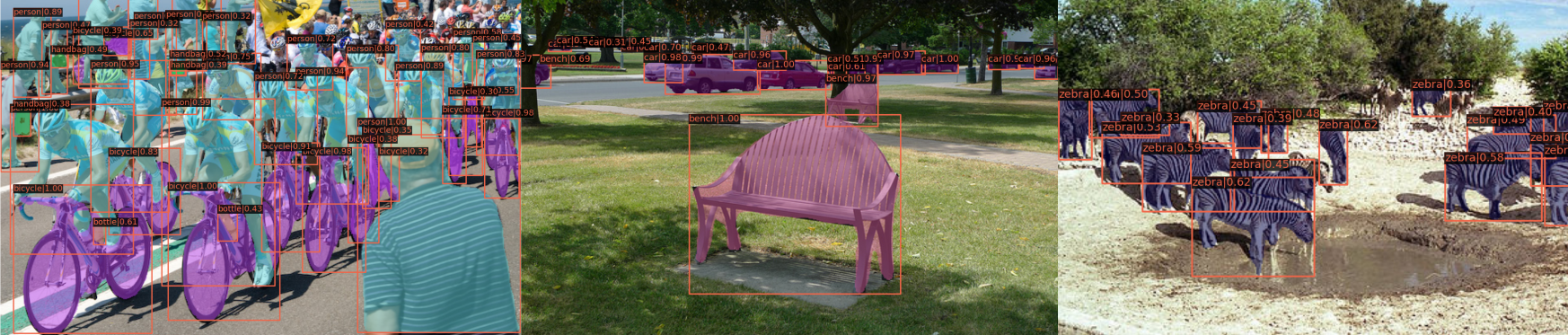

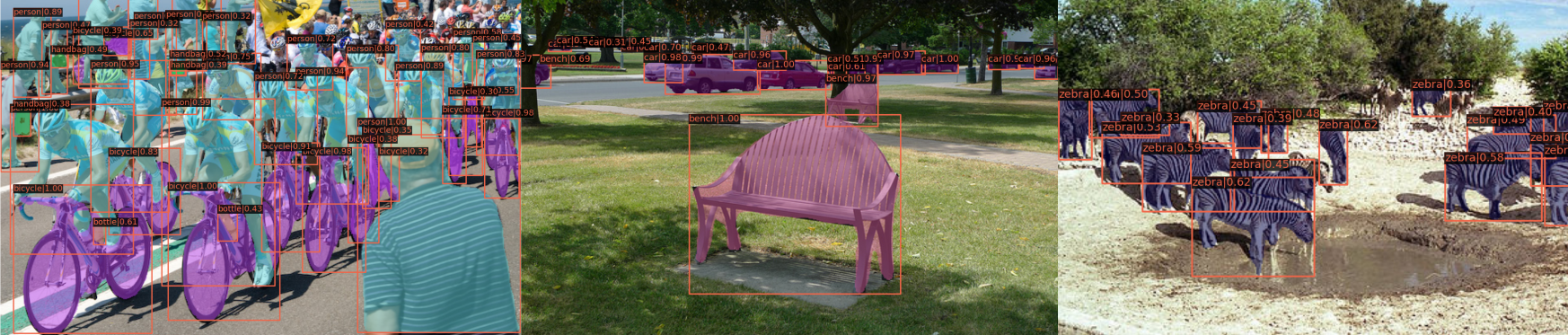

+  +

+  +

+

+

+ +

+