# k8s 高可用集群 ansible 剧本部署

**Repository Path**: liruilonger/k8s_deploy_ansible

## Basic Information

- **Project Name**: k8s 高可用集群 ansible 剧本部署

- **Description**: 一个完整的 k8s 高可用 集群 ansible 部署剧本

- **Primary Language**: Unknown

- **License**: Apache-2.0

- **Default Branch**: master

- **Homepage**: None

- **GVP Project**: No

## Statistics

- **Stars**: 14

- **Forks**: 15

- **Created**: 2023-01-27

- **Last Updated**: 2025-06-02

## Categories & Tags

**Categories**: Uncategorized

**Tags**: None

## README

## K8s(v1.25.1) 高可用集群(3 Master + 5 Node) Ansible 剧本部署(CRI使用docker,cri-docker)

** 我所渴求的,無非是將心中脫穎語出的本性付諸生活,為何竟如此艱難呢 ------赫尔曼·黑塞《德米安》**

## 写在前面

***

+ 分享一个 k8s 高可用集群部署的 Ansible 剧本

+ 以及涉及到的一些工具的安装

+ 博文内容涉及:

+ 从零开始 一个 k8s 高可用 集群部署 Ansible剧本编写,

+ 编写后搭建 k8s 高可用 集群

+ 一些集群常用的 监控,备份工具安装,包括:

+ `cadvisor` 监控工具部署

+ `metrics-server` 监控工具部署

+ `Ingress—nginx` Ingress 控制器部署

+ `Metallb` 软 LoadBalancer 部署

+ `local-path-storage` 基于本地存储的SC 分配器部署

+ ` prometheus ` 监控工具部署

+ `ETCD` 快照备份定时任务编写运行

+ `Velero` 集群容灾备份工具部署

+ 理解不足小伙伴帮忙指正

** 我所渴求的,無非是將心中脫穎語出的本性付諸生活,為何竟如此艱難呢 ------赫尔曼·黑塞《德米安》**

***

部署完的 集群 node 信息查看,感觉版本有点高,生产不太建议安装这么高的版本,有些开源工具都安装不了,各种问问题...

```bash

┌──[root@vms100.liruilongs.github.io]-[~/ansible]

└─$kubectl get nodes

NAME STATUS ROLES AGE VERSION

vms100.liruilongs.github.io Ready control-plane 10d v1.25.1

vms101.liruilongs.github.io Ready control-plane 10d v1.25.1

vms102.liruilongs.github.io Ready control-plane 10d v1.25.1

vms103.liruilongs.github.io Ready 10d v1.25.1

vms105.liruilongs.github.io Ready 10d v1.25.1

vms106.liruilongs.github.io Ready 10d v1.25.1

vms107.liruilongs.github.io Ready 10d v1.25.1

vms108.liruilongs.github.io Ready 10d v1.25.1

```

部署涉及机器

+ master1: 192.168.26.100

+ master2: 192.168.26.101

+ master3: 192.168.26.102

+ work Node1: 192.168.26.103

+ work Node2: 192.168.26.105

+ work Node3: 192.168.26.106

+ work Node4: 192.168.26.107

+ work Node5: 192.168.26.108

哈 104 不吉利,跳过去了.....

# 集群部署

## 部署拓扑

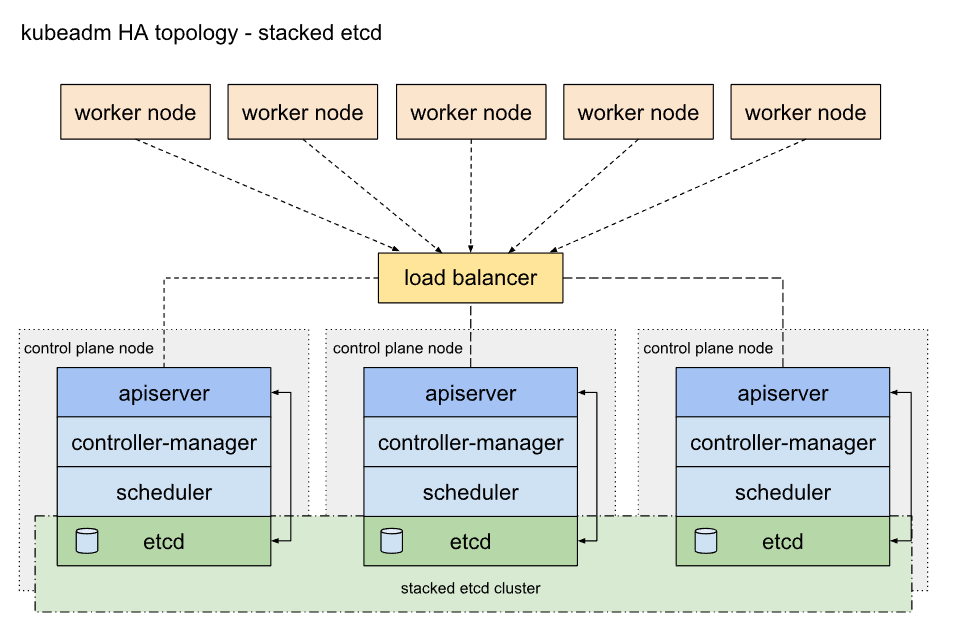

下图为 k8s 官网文档中 HA 拓扑图,这里使用下面的拓扑方式部署

堆叠(Stacked)HA 集群拓扑, 其中 `etcd 分布式数据存储集群堆叠在 kubeadm 管理的控制平面节点上`,作为控制平面的一个组件运行。

部署后的 etcd 分布

```bash

┌──[root@vms100.liruilongs.github.io]-[~/ansible/kubescape]

└─$ETCDCTL_API=3 etcdctl --endpoints https://127.0.0.1:2379 --cert="/etc/kubernetes/pki/etcd/server.crt" --key="/etc/kubernetes/pki/etcd/server.key" --cacert="/etc/kubernetes/pki/etcd/ca.crt" member list -

w table

+------------------+---------+-----------------------------+-----------------------------+-----------------------------+

| ID | STATUS | NAME | PEER ADDRS | CLIENT ADDRS |

+------------------+---------+-----------------------------+-----------------------------+-----------------------------+

| ee392e5273e89e2 | started | vms100.liruilongs.github.io | https://192.168.26.100:2380 | https://192.168.26.100:2379 |

| 11486647d7f3a17b | started | vms102.liruilongs.github.io | https://192.168.26.102:2380 | https://192.168.26.102:2379 |

| e00e3877df8f76f4 | started | vms101.liruilongs.github.io | https://192.168.26.101:2380 | https://192.168.26.101:2379 |

+------------------+---------+-----------------------------+-----------------------------+-----------------------------+

```

每个控制平面节点运行 `kube-apiserver`、`kube-scheduler` 和 `kube-controller-manager` 实例。 `kube-apiserver` 使用负载均衡器暴露给工作节点。

每个控制平面节点创建一个`本地 etcd 成员(member)`,这个 `etcd 成员只与该节点的 kube-apiserver 通信`。 这同样适用于本地 kube-controller-manager 和 kube-scheduler 实例。

这种拓扑将控制平面和 etcd 成员耦合在同一节点上。相对使用外部 etcd 集群, 设置起来更简单,而且更易于副本管理。

然而,`堆叠集群`存在`耦合失败的风险`。如果`一个节点发生故障`,则 etcd 成员和控制平面实例都将丢失, 并且冗余会受到影响。你可以通过添加更多控制平面节点来降低此风险。

因此,你应该为 HA 集群运行`至少三个堆叠的控制平面节点`。

```bash

┌──[root@vms100.liruilongs.github.io]-[~/ansible/kubescape]

└─$ETCDCTL_API=3 etcdctl --endpoints https://127.0.0.1:2379 --cert="/etc/kubernetes/pki/etcd/server.crt" --key="/etc/kubernetes/pki/etcd/server.key" --cacert="/etc/kubernetes/pki/etcd/ca.crt" endpoint status --cluster -w table

+-----------------------------+------------------+---------+---------+-----------+-----------+------------+

| ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | RAFT TERM | RAFT INDEX |

+-----------------------------+------------------+---------+---------+-----------+-----------+------------+

| https://192.168.26.100:2379 | ee392e5273e89e2 | 3.5.4 | 37 MB | false | 100 | 3152364 |

| https://192.168.26.102:2379 | 11486647d7f3a17b | 3.5.4 | 36 MB | false | 100 | 3152364 |

| https://192.168.26.101:2379 | e00e3877df8f76f4 | 3.5.4 | 36 MB | true | 100 | 3152364 |

+-----------------------------+------------------+---------+---------+-----------+-----------+------------+

┌──[root@vms100.liruilongs.github.io]-[~/ansible/kubescape]

└─$

```

当使用 kubeadm init 和 kubeadm join --control-plane 时, 在控制平面节点上会自动创建本地 etcd 成员。ETCD 一点要定期备份

部署中涉及太多文件,篇幅问题,文件无法展示,以上传 git 仓库,获取 git 仓库地址方式,关注 公总好 **山河已无恙**,回复 **k8s-ha-deploy** 即可获得地址。

## 免密配置

这里假设我们拿到的是安装了系统的新机。这里我的机器,通过 克隆的的生成的 虚机,所以配置了 YUM 源,如果没有,需要先配置一台虚机,剩下的通过 Ansible 的批量配置下 YUM ,所以对应 YUM 的配置这个教程没有涉及,YUM 主要配置为 阿里云的 就可以。配置文件小伙伴可以通过 git 获取。

在安装 Ansible 之前,方便操作,我们需要批量配置下免密,这个使用 `expect` 的方式实现,用直接写好了 脚本直接执行,需要配置的主机 单独列出读取。

安装 expect

```bash

┌──[root@vms100.liruilongs.github.io]-[~]

└─$yum -y install expect

```

列出部署主机

```bash

┌──[root@vms100.liruilongs.github.io]-[~]

└─$cat host_list

192.168.26.100

192.168.26.101

192.168.26.102

192.168.26.103

192.168.26.105

192.168.26.106

192.168.26.107

192.168.26.108

```

免密脚本,直接执行即可,如果主机清单文件名相同,直接读取即可

```bash

#!/bin/bash

#@File : mianmi.sh

#@Time : 2022/08/20 17:45:53

#@Author : Li Ruilong

#@Version : 1.0

#@Desc : None

#@Contact : 1224965096@qq.com

/usr/bin/expect <<-EOF

spawn ssh-keygen

expect "(/root/.ssh/id_rsa)" {send "\r"}

expect {

"(empty for no passphrase)" {send "\r"}

"already" {send "y\r"}

}

expect {

"again" {send "\r"}

"(empty for no passphrase)" {send "\r"}

}

expect {

"again" {send "\r"}

"#" {send "\r"}

}

expect "#"

expect eof

EOF

for IP in $( cat host_list )

do

if [ -n IP ];then

/usr/bin/expect <<-EOF

spawn ssh-copy-id root@$IP

expect {

"*yes/no*" { send "yes\r"}

"*password*" { send "redhat\r" }

}

expect {

"*password" { send "redhat\r"}

"#" { send "\r"}

}

expect "#"

expect eof

EOF

fi

done

```

OK,配置完免密之后,需要安装 Ansible, 运维工具,一定要装一个,机器太多了很费人。

## Ansible 安装

```bash

┌──[root@vms100.liruilongs.github.io]-[~]

└─$yum -y install ansible

```

### ansible 配置

这里的主机清单,随便配置一个无效的,建议不要在配置文件中配置正确的主机清单,防止执行剧本到错误的主机节点。

```bash

┌──[root@vms100.liruilongs.github.io]-[~/ansible]

└─$vim ansible.cfg

┌──[root@vms100.liruilongs.github.io]-[~/ansible]

└─$cat ansible.cfg

[defaults]

# 主机清单文件,就是要控制的主机列表

inventory=inventory

# 连接受管机器的远程的用户名

remote_user=root

# 角色目录

roles_path=roles

# 设置用户的su 提权

[privilege_escalation]

become=True

become_method=sudo

become_user=root

become_ask_pass=False

```

拷贝前面的文件测试下网络情况

```bash

┌──[root@vms100.liruilongs.github.io]-[~/ansible]

└─$cat host_list

192.168.26.100

192.168.26.101

192.168.26.102

192.168.26.103

192.168.26.105

192.168.26.106

192.168.26.107

192.168.26.108

```

```bash

┌──[root@vms100.liruilongs.github.io]-[~/ansible]

└─$ansible all -m ping -i host_list

```

测试没问题,需要编写下 主机清单文件

```yaml

┌──[root@vms100.liruilongs.github.io]-[~/ansible]

└─$cat host.yaml

ansible:

children:

ansible_master:

hosts:

192.168.26.100:

ansible_node:

hosts:

192.168.26.[101:103]:

192.168.26.[105:108]:

k8s:

children:

k8s_master:

hosts:

192.168.26.[100:102]:

k8s_node:

hosts:

192.168.26.103:

192.168.26.[105:108]:

```

检查清单文件,这里的分组就不多讲了。

```bash

┌──[root@vms100.liruilongs.github.io]-[~/ansible]

└─$ansible-inventory -i host.yaml --graph

@all:

|--@ansible:

| |--@ansible_master:

| | |--192.168.26.100

| |--@ansible_node:

| | |--192.168.26.101

| | |--192.168.26.102

| | |--192.168.26.103

| | |--192.168.26.105

| | |--192.168.26.106

| | |--192.168.26.107

| | |--192.168.26.108

|--@k8s:

| |--@k8s_master:

| | |--192.168.26.100

| | |--192.168.26.101

| | |--192.168.26.102

| |--@k8s_node:

| | |--192.168.26.103

| | |--192.168.26.105

| | |--192.168.26.106

| | |--192.168.26.107

| | |--192.168.26.108

|--@ungrouped:

```

测试一下清单文件

```bash

┌──[root@vms100.liruilongs.github.io]-[~/ansible]

└─$ansible all --list-hosts -i host.yaml

hosts (8):

192.168.26.100

192.168.26.101

192.168.26.102

192.168.26.103

192.168.26.105

192.168.26.106

192.168.26.107

192.168.26.108

```

为了使用 `tab` 键,这里我们建一些清单组的空文件。

```bash

┌──[root@vms100.liruilongs.github.io]-[~/ansible]

└─$touch ansible_master ansible_node k8s_master k8s_node

┌──[root@vms100.liruilongs.github.io]-[~/ansible]

└─$ls

ansible.cfg ansible_node host_list_no_ansible k8s_master ps1_mod.yaml

ansible_master host_list host.yaml k8s_node

┌──[root@vms100.liruilongs.github.io]-[~/ansible]

└─$

```

为了方便演示,看到当前的执行目录,配置下 `PS1`

```yaml

┌──[root@vms100.liruilongs.github.io]-[~/ansible]

└─$cat ps1_mod.yaml

---

- name: all modify PS1

hosts: ansible_node

tasks:

- name: PS1 modify

shell: echo 'PS1="\[\033[1;32m\]┌──[\[\033[1;34m\]\u@\H\[\033[1;32m\]]-[\[\033[0;1m\]\w\[\033[1;32m\]] \n\[\033[1;32m\]└─\[\033[1;34m\]\$\[\033[0m\]"' >> /root/.bashrc

- name: PS1 disply

shell: cat /root/.bashrc | grep PS1

```

执行剧本并测试。

```bash

┌──[root@vms100.liruilongs.github.io]-[~/ansible]

└─$ansible-playbook ps1_mod.yaml -i host.yaml -vv

```

## k8s 安装前环境准备

### 检查机器 UUID 和 网卡MAC 地址

```bash

┌──[root@vms100.liruilongs.github.io]-[~/ansible]

└─$ansible k8s -m shell -a "ip link | grep ether| awk '{print $2 }' " -i host.yaml

┌──[root@vms100.liruilongs.github.io]-[~/ansible]

└─$ansible k8s -m shell -a "cat /sys/class/dmi/id/product_uuid " -i host.yaml

```

剩下的 操作这个编写一个 k8s 部署环境的初始化的 剧本任务 `k8s_init_deploy.yaml`,涉及各项通过剧本任务引用实现

### hosts 文件准备

没有 DNS ,如果有 DNS 服务器,则不需要

```bash

┌──[root@vms100.liruilongs.github.io]-[~/ansible/file]

└─$cat hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.26.100 vms100.liruilongs.github.io vms100

192.168.26.101 vms101.liruilongs.github.io vms101

192.168.26.102 vms102.liruilongs.github.io vms102

192.168.26.103 vms103.liruilongs.github.io vms103

192.168.26.105 vms105.liruilongs.github.io vms105

192.168.26.106 vms106.liruilongs.github.io vms106

192.168.26.107 vms107.liruilongs.github.io vms107

192.168.26.108 vms108.liruilongs.github.io vms108

```

把 `hosts` 文件替换为所有机器的 `hosts` ,编写剧本任务

```yaml

┌──[root@vms100.liruilongs.github.io]-[~/ansible]

└─$cat k8s_init_deploy.yaml

---

- name: copy "/etc/hosts"

copy:

src: ./file/hosts

dest: /etc/hosts

force: yes

```

### 防火墙,交换分区,SElinux 设置

编写剧本任务,在之前的 初始化剧本补充。

```yaml

┌──[root@vms100.liruilongs.github.io]-[~/ansible]

└─$cat k8s_init_deploy.yaml

---

# 拷贝 hosts 文件,没有 DNS 需要

- name: copy "/etc/hosts"

copy:

src: ./file/hosts

dest: /etc/hosts

force: yes

# 关闭防火墙,这里设置为 trusted ,以后可能处理漏洞使用

- name: firewalld setting trusted

firewalld:

zone: trusted

permanent: yes

state: enabled

# 关闭 SELinux

- name: Disable SELinux

selinux:

state: disabled

# 禁用交换分区

- name: Disable swapoff

shell: /usr/sbin/swapoff -a

# 删除 swap 配置

- name: delete /etc/fstab

shell: sed -i '/swap/d' /etc/fstab

```

这里我们通过 任务引用的方式来执行,并且打一个 init 的标签。方便之后单独执行,引入到当前的 k8s 部署剧本 ` k8s_deploy.yaml`

```yaml

┌──[root@vms100.liruilongs.github.io]-[~/ansible]

└─$cat k8s_deploy.yaml

---

- name: k8s deploy

hosts: k8s

tasks:

- name: init k8s

include_tasks:

file: k8s_init_deploy.yaml

tags:

- init_k8s

```

` k8s_deploy.yaml` 为部署 k8s 所有操作的剧本。可以先执行下,完成前面的部署工作。

```bash

┌──[root@vms100.liruilongs.github.io]-[~/ansible]

└─$ansible-playbook k8s_deploy.yaml -i host.yaml -f 4

```

有 8 个 机器,这里的 ` -f 4` 为并行执行的意思。` -i host.yaml` 指定主机清单

## 安装容器运行时 CRI docker

这里选择 Dokcer 作为 CRI ,但是Docker 本身没有实现 CRI,在 k8s 在 1.24 的移除了 `docker` 和 K8s 的桥梁 `Dockershim` ,所以不能直接使用,需要安装 `cri-docker` .

**这里需要注意: **

部署 `cri-docker` 要重载沙箱(pause)镜像,cri-dockerd 适配器能够接受指定用作 Pod 的基础容器的容器镜像(“pause 镜像”)作为命令行参数。 要使用的命令行参数是 `--pod-infra-container-image`。 如果不指定,会直接从 谷歌的镜像库拉取,即使在 `kubeadm init` 指定了 镜像库也不行(我部署是不行),同时,如果部署 HA ,使用 `keepalived + Haproxy` 的方式,并且通过 静态 Pod 的方式部署,在 启动 `kubelet` 的时候 静态pod 也会直接从 谷歌的镜像库拉取。

对应 cgroup 驱动的使用,按照官方官网推荐的来。 如果系统通过 `systemd` 来引导,就用 `systemd`

### CRI 安装的初始化工作

转发 IPv4 并让 iptables 看到桥接流量, 相关文件准备,需要注意

```bash

┌──[root@vms100.liruilongs.github.io]-[~/ansible/file/modules-load.d]

└─$cat k8s.conf

overlay

br_netfilter

┌──[root@vms100.liruilongs.github.io]-[~/ansible/file/sysctl.d]

└─$cat k8s.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

```

docker 配置文件准备,这里的配置项 `"data-root": "/docker/data",` 为 docker 数据目录,最好找一个大一点的地方。如果修改的话,剧本的创建目录要同步修改。

```json

┌──[root@vms100.liruilongs.github.io]-[~/ansible]

└─$cat file/daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"data-root": "/docker/data",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2",

"storage-opts": [

"overlay2.override_kernel_check=true"

],

"experimental": false,

"debug": false,

"max-concurrent-downloads": 10,

"registry-mirrors": [

"https://2tefyfv7.mirror.aliyuncs.com",

"https://docker.mirrors.ustc.edu.cn"

]

}

```

当前需要复制的配置文件

```bash

┌──[root@vms100.liruilongs.github.io]-[~/ansible]

└─$ls file/*

file/daemon.json file/hosts

file/modules-load.d:

k8s.conf

file/sysctl.d:

k8s.conf

```

`cri-dockerd rpm `包下载,下载到 Anasible 控制节点,复制到其他的节点。

```bash

PS C:\Users\山河已无恙\Downloads> curl -o cri-dockerd-0.3.0-3.el7.x86_64.rpm https://github.com/Mirantis/cri-dockerd/releases/download/v0.3.0/cri-dockerd-0.3.0-3.el7.x86_64.rpm

PS C:\Users\山河已无恙\Downloads> scp .\cri-dockerd-0.3.0-3.el7.x86_64.rpm root@192.168.26.100:/root/ansible/install_package

root@192.168.26.100's password:

cri-dockerd-0.3.0-3.el7.x86_64.rpm 100% 9195KB 81.7MB/s 00:00

PS C:\Users\山河已无恙\Downloads>

```

存放位置

```bash

┌──[root@vms100.liruilongs.github.io]-[~/ansible]

└─$cd install_package/;ls

cri-dockerd-0.3.0-3.el7.x86_64.rpm

```

CRI docker, 安装 任务剧本

```yaml

┌──[root@vms100.liruilongs.github.io]-[~/ansible]

└─$cat k8s_cri_deploy.yaml

# ansible 2.9.27

# #@File : k8s_cri_deploy.yaml

# #@Time : 2023/01/19 22:32:47

# #@Author : Li Ruilong

# #@Version : 1.0

# #@Desc : 安装 CRI , 这里我们选择 Docker,需要安装 cri-docker

# #@Contact : liruilonger@gmail.com

---

# 转发 IPv4 并让 iptables 看到桥接流量

- name: Forwarding IPv4 and letting iptables see bridged traffic 1

copy:

src: ./file/modules-load.d/k8s.conf

dest: /etc/modules-load.d/k8s.conf

- name: Forwarding IPv4 and letting iptables see bridged traffic 2

shell: modprobe overlay && modprobe br_netfilter

# 永久设置所需的 sysctl 参数

- name: sysctl params required by setup, params persist across reboots

copy:

src: ./file/sysctl.d/k8s.conf

dest: /etc/sysctl.d/k8s.conf

# 刷新内核参数

- name: Apply sysctl params without reboot

shell: sysctl --system

# 安装 docker

- name: install docker-ce docker-ce-cli containerd.io

yum:

name:

- docker-ce

- docker-ce-cli

- containerd.io

state: present

# 创建 dockers 数据目录

- name: create docker data dir

file:

path: /docker/data

state: directory

# 创建 docker 配置目录

- name: create docker data dir

file:

path: /etc/docker

state: directory

# 修改 dockers 配置

- name: modify docker config

copy:

src: ./file/daemon.json

dest: /etc/docker/daemon.json

# 复制 cri-docker rpm 安装包

- name: copy install cri-docker rpm

# https://github.com/Mirantis/cri-dockerd/releases/download/v0.3.0/cri-dockerd-0.3.0-3.el7.x86_64.rpm

copy:

src: ./install_package/cri-dockerd-0.3.0-3.el7.x86_64.rpm

dest: /tmp/cri-dockerd-0.3.0-3.el7.x86_64.rpm

# 安装 cri-docker

- name: install cri-docker

yum:

name: /tmp/cri-dockerd-0.3.0-3.el7.x86_64.rpm

state: present

# 修改 cri-docker service 文件,否则 沙箱会从谷歌的仓库拉去

- name: modify cri-dockerd service file

copy:

src: ./file/cri-docker.service

dest: /usr/lib/systemd/system/cri-docker.service

# 配置开机自启,启动 docker

- name: start docker, setting enable

service:

name: docker

state: restarted

enabled: yes

# 配置开机自启,启动 cri-docker

- name: start cri-docker, setting enable

service:

name: cri-docker

state: restarted

enabled: yes

# 配置开机自启,启动 cri-docker.socket

- name: start cri-docker,socket setting enable

service:

name: cri-docker.socket

enabled: yes

# 配置校验

- name: check init cri

include_tasks:

file: k8s_cri_deploy_check.yaml

tags: cri_check

┌──[root@vms100.liruilongs.github.io]-[~/ansible]

└─$

```

安装完的校验单独放到了 `k8s_cri_deploy_check.yaml` 位置,也是通过剧本引用的方式,并且打了 `cri_check` 标签。这个如果希望看到检查数据,需要执行剧本时加上 `-vv`,这里只是写了几个基本的校验,还可以对其他的做校验

```yaml

┌──[root@vms100.liruilongs.github.io]-[~/ansible]

└─$cat k8s_cri_deploy_check.yaml

# ansible 2.9.27

# #@File : k8s_cri_deploy_check.yaml

# #@Time : 2023/01/19 23:02:47

# #@Author : Li Ruilong

# #@Version : 1.0

# #@Desc : cri(docker) 配置检查剧本任务

# #@Contact : liruilonger@gmail.com

---

- name: check br_netfilter, overlay

shell: (lsmod | grep br_netfilter ;lsmod | grep overlay)

- name: charck ipv4 sysctl param

shell: sysctl net.bridge.bridge-nf-call-iptables net.bridge.bridge-nf-call-ip6tables net.ipv4.ip_forward

- name: chrck docker config

shell: docker info

```

当前的 k8s 安装剧本,引入了 `k8s_cri_deploy.yaml` 的安装,并且 打标签 cri。

```yaml

┌──[root@vms100.liruilongs.github.io]-[~/ansible]

└─$cat k8s_deploy.yaml

# ansible 2.9.27

# #@File : k8s_deploy.yaml

# #@Time : 2023/01/19 23:02:47

# #@Author : Li Ruilong

# #@Version : 1.0

# #@Desc : k8s 安装剧本

# #@Contact : liruilonger@gmail.com

---

- name: k8s deploy 1

hosts: k8s

tasks:

# 初始化 K8s 安装环境

- name: init k8s

include_tasks:

file: k8s_init_deploy.yaml

tags:

- init_k8s

# 安装 CRI docker ,cri-docker

- name: CRI deploy (docker,cri-docker)

include_tasks:

file: k8s_cri_deploy.yaml

tags: cri

```

可以执行测试下

```bash

┌──[root@vms100.liruilongs.github.io]-[~/ansible]

└─$ansible-playbook k8s_deploy.yaml -i host.yaml -f 4

```

## 安装 kubeadm、kubelet 和 kubectl

```bash

┌──[root@vms100.liruilongs.github.io]-[~/ansible]

└─$cat k8s_kubeadm_kubelet_kubectl_deploy.yaml

# ansible 2.9.27

# #@File : k8s_kubeadm_kubelet_kubectl_deploy.yaml

# #@Time : 2023/01/19 23:30:47

# #@Author : Li Ruilong

# #@Version : 1.0

# #@Desc : 安装 kubeadm、kubelet 和 kubectl

# #@Contact : liruilonger@gmail.com

---

# 安装 kubeadm、kubelet 和 kubectl

- name: install kubeadm、kubelet and kubectl

yum:

name:

- kubelet-1.25.1-0

- kubeadm-1.25.1-0

- kubectl-1.25.1-0

state: present

# 启动 kubelet 并设置开启自启

- name: start kubelet,setting enable

service:

name: kubelet

state: started

enabled: yes

┌──[root@vms100.liruilongs.github.io]-[~/ansible]

└─$

```

添加的部署脚本

```yaml

┌──[root@vms100.liruilongs.github.io]-[~/ansible]

└─$cat k8s_deploy.yaml

# ansible 2.9.27

# #@File : k8s_deploy.yaml

# #@Time : 2023/01/19 23:02:47

# #@Author : Li Ruilong

# #@Version : 1.0

# #@Desc : k8s 安装剧本

# #@Contact : liruilonger@gmail.com

---

- name: k8s deploy 1

hosts: k8s

tasks:

# 初始化 K8s 安装环境

- name: init k8s

include_tasks:

file: k8s_init_deploy.yaml

tags:

- init_k8s

# 安装 CRI docker ,cri-docker

- name: CRI deploy (docker,cri-docker)

include_tasks:

file: k8s_cri_deploy.yaml

tags: cri

# 安装 kubeadm,kubelet,kubectl

- name: install kubeadm,kubelet,kubectl

include_tasks:

file: k8s_kubeadm_kubelet_kubectl_deploy.yaml

tags: install_k8s

┌──[root@vms100.liruilongs.github.io]-[~/ansible]

└─$

```

## HA 涉及的 keepalived、HAproxy 静态 Pod 准备

HA 涉及的 keepalived、HAproxy 通过 静态Pod 的方式运行,当前集群设置三个master 节点,kubelet 和 api-service 交互通过 keepalived 提供的 VIP 访问。然后由 HAprxy 把VIP地址反向代理到各 master 的 api-service 服务

涉及的到文件太多,这个不做展示

```bash

┌──[root@vms100.liruilongs.github.io]-[~/ansible]

└─$tree -h file/

file/

├── [ 25] haproxy

│ └── [1.8K] haproxy.cfg

├── [ 55] keepalived

│ ├── [ 370] check_apiserver.sh

│ └── [ 521] keepalived.conf

└── [ 49] manifests

├── [ 543] haproxy.yaml

└── [ 676] keepalived.yaml

```

对应的 任务剧本 `manifests_keepalived_haproxy.yaml`

```yaml

┌──[root@vms100.liruilongs.github.io]-[~/ansible]

└─$cat manifests_keepalived_haproxy.yaml

# ansible 2.9.27

# # #@File : manifests_keepalived_haproxy.yaml

# # #@Time : 2023/01/19 23:02:47

# # #@Author : Li Ruilong

# # #@Version : 1.0

# # #@Desc : HA 静态 pod 相关 配置文件 YAML 文件准备

# # #@Contact : liruilonger@gmail.com

---

# 创建 静态 pod 目录

- name: create manifests dir

file:

path: /etc/kubernetes/manifests/

state: directory

force: true

# 复制 keepalived haproxy 对应的 静态 pod yaml 文件

- name: copy manifests pod, haproxy and keepalived

copy:

src: ./file/manifests/keepalived.yaml

dest: /etc/kubernetes/manifests/keepalived.yaml

- name: copy manifests pod, haproxy and keepalived

copy:

src: ./file/manifests/haproxy.yaml

dest: /etc/kubernetes/manifests/haproxy.yaml

# 创建 haproxy 配置文件 目录

- name: create haproxy dir

file:

path: /etc/haproxy

state: directory

force: true

- name: copy /etc/haproxy/haproxy.cfg

copy:

src: ./file/haproxy/haproxy.cfg

dest: /etc/haproxy/haproxy.cfg

# 创建 keepalived 配置文件 目录

- name: create keepalived dir

file:

path: /etc/keepalived

state: directory

force: true

- name: copy /etc/keepalived/keepalived.conf

copy:

src: ./file/keepalived/keepalived.conf

dest: /etc/keepalived/keepalived.conf

- name: copy /etc/keepalived/check_apiserver.sh

copy:

src: ./file/keepalived/check_apiserver.sh

dest: /etc/keepalived/check_apiserver.sh

┌──[root@vms100.liruilongs.github.io]-[~/ansible]

└─$

```

涉及到 `HAproxy` 和 `keepalived` 的 配置文件以及静态pod yaml文件的定义,篇幅原因,这里不做展示,可以参考官方文档给出的,如果实在不想找, 也可以通过 git 地址获取。

这个时候的部署剧本

```yaml

┌──[root@vms100.liruilongs.github.io]-[~/ansible]

└─$cat k8s_deploy.yaml

# ansible 2.9.27

# #@File : k8s_deploy.yaml

# #@Time : 2023/01/19 23:02:47

# #@Author : Li Ruilong

# #@Version : 1.0

# #@Desc : k8s 安装剧本

# #@Contact : liruilonger@gmail.com

---

- name: k8s deploy 1

hosts: k8s

tasks:

# 初始化 K8s 安装环境

- name: init k8s

include_tasks:

file: k8s_init_deploy.yaml

tags:

- init_k8s

# 安装 CRI docker ,cri-docker

- name: CRI deploy (docker,cri-docker)

include_tasks:

file: k8s_cri_deploy.yaml

tags: cri

# 安装 kubeadm,kubelet,kubectl

- name: install kubeadm,kubelet,kubectl

include_tasks:

file: k8s_kubeadm_kubelet_kubectl_deploy.yaml

tags: install_k8s

- name: k8s deploy 2

hosts: k8s_master

tasks:

- name: manifests_keepalived_haproxy.yaml

include_tasks:

file: manifests_keepalived_haproxy.yaml

tags: install_k8s

```

## 初始化集群添加的控制节点,工作节点

先在一个 maste 节点执行,剩下的 master 节点通过添加的加入

```bash

┌──[root@vms100.liruilongs.github.io]-[~/ansible]

└─$kubeadm init --image-repository "registry.aliyuncs.com/google_containers" --control-plane-endpoint "192.168.26.99:30033" --upload-certs --kubernetes-version=v1.25.1 --pod-network-cidr=10.244.0.0/16 --cri-socket /var/run/cri-dockerd.sock

W0121 02:49:21.251663 106843 initconfiguration.go:119] Usage of CRI endpoints without URL scheme is deprecated and can cause kubelet errors in the future. Automatically prepending scheme "unix" to the "criSocket" with value "/var/run/cri-dockerd.sock". Please update your configuration!

[init] Using Kubernetes version: v1.25.1

[preflight] Running pre-flight checks

[WARNING Firewalld]: firewalld is active, please ensure ports [6443 10250] are open or your cluster may not function correctly

[preflight] Pulling images required for setting up a Kubernetes cluster

......................

[addons] Applied essential addon: CoreDNS

W0121 02:49:31.282997 106843 endpoint.go:57] [endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join 192.168.26.99:30033 --token hdse4x.7d8vdil1s7tdp8dj \

--discovery-token-ca-cert-hash sha256:11bf0456c84c2a176eb380a98844eed80a296cdb6cc557c863991c63de594108 \

--control-plane --certificate-key 61b1a22aa5dbbd93660709aa343452d80ec98bf2efef6915b3a36ae97a8eefed

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.26.99:30033 --token hdse4x.7d8vdil1s7tdp8dj \

--discovery-token-ca-cert-hash sha256:11bf0456c84c2a176eb380a98844eed80a296cdb6cc557c863991c63de594108

```

第一个控制节点 准备 kubectl 客户端

```bash

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

```

### CNI 插件准备

k8s 版本 和 CNI 的版本关系,以及安装相关可以通过下的地址查看。

https://projectcalico.docs.tigera.io/archive/v3.24/getting-started/kubernetes/self-managed-onprem/onpremises

https://projectcalico.docs.tigera.io/archive/v3.24/getting-started/kubernetes/requirements

**这里需要注意的是:**

好像 bpf 文件系统,需要高版本的 内核才支撑。我最开始的内核版本为 3.10 的版本,所有不支持,需要把 bpf 的挂载删掉。

我本地测试发现 `calico` v3.20 到 v3.25 都是需要的做处理的。 涉及的镜像最好导入进去,网络情况不同,拉取很费事。

```bash

┌──[root@vms100.liruilongs.github.io]-[~/ansible/calico]

└─$cat calico.v3.23.yaml | grep -A 3 -e bpffs$

- name: bpffs

mountPath: /sys/fs/bpf

- name: cni-log-dir

mountPath: /var/log/calico/cni

--

- name: bpffs

hostPath:

path: /sys/fs/bpf

type: Directory

```

需要删除的部分

```bash

。。。。。

- name: bpffs

mountPath: /sys/fs/bpf

。。。。。

- name: bpffs

hostPath:

path: /sys/fs/bpf

type: Directory

```

下载 YAML 文件部署

```bash

┌──[root@vms100.liruilongs.github.io]-[~/ansible/calico]

└─$wget --no-check-certificate https://docs.projectcalico.org/manifests/calico.yaml

--2023-01-21 02:56:01-- https://docs.projectcalico.org/manifests/calico.yaml

正在解析主机 docs.projectcalico.org (docs.projectcalico.org)... 18.139.194.139, 52.74.166.77, 2406:da18:880:3801::c8, ...

正在连接 docs.projectcalico.org (docs.projectcalico.org)|18.139.194.139|:443... 已连接。

警告: 无法验证 docs.projectcalico.org 的由 “/C=US/O=Let's Encrypt/CN=R3” 颁发的证书:

颁发的证书已经过期。

已发出 HTTP 请求,正在等待回应... 200 OK

长度:238089 (233K) [text/yaml]

正在保存至: “calico.yaml”

100%[=======================================================================================================================================================================>] 238,089 592KB/s 用时 0.4s

2023-01-21 02:56:02 (592 KB/s) - 已保存 “calico.yaml” [238089/238089])

┌──[root@vms100.liruilongs.github.io]-[~/ansible/calico]

└─$vim calico.yaml

```

修改 `CALICO_IPV4POOL_CIDR` 为之前指定的 地址

```bash

┌──[root@vms100.liruilongs.github.io]-[~/ansible/calico]

└─$cat calico.yaml | grep -C 3 IPV4POOL_CIDR

# The default IPv4 pool to create on startup if none exists. Pod IPs will be

# chosen from this range. Changing this value after installation will have

# no effect. This should fall within `--cluster-cidr`.

- name: CALICO_IPV4POOL_CIDR

value: "10.244.0.0/16"

# Disable file logging so `kubectl logs` works.

- name: CALICO_DISABLE_FILE_LOGGING

┌──[root@vms100.liruilongs.github.io]-[~/ansible/calico]

└─$

┌──[root@vms100.liruilongs.github.io]-[~/ansible]

└─$kubectl apply -f ./calico/calico.yaml

┌──[root@vms100.liruilongs.github.io]-[~/ansible]

└─$source <(kubectl completion bash) >> /etc/profile

```

### 其他控制节点添加

这个只有3个控制节点,所以通过 命令行单独处理,多的话可以使用 ansible

```bash

┌──[root@vms101.liruilongs.github.io]-[~]

└─$kubeadm join 192.168.26.99:30033 --token hdse4x.7d8vdil1s7tdp8dj --discovery-token-ca-cert-hash sha256:11bf0456c84c2a176eb380a98844eed80a296cdb6cc557c863991c63de594108 --control-plane --certificate-key 61b1a22aa5dbbd93660709aa343452d80ec98bf2efef6915b3a36ae97a8eefed --cri-socket /var/run/cri-dockerd.sock

......

```

```bash

┌──[root@vms102.liruilongs.github.io]-[~]

└─$kubeadm join 192.168.26.99:30033 --token hdse4x.7d8vdil1s7tdp8dj --discovery-token-ca-cert-hash sha256:11bf0456c84c2a176eb380a98844eed80a296cdb6cc557c863991c63de594108 --control-plane --certificate-key 61b1a22aa5dbbd93660709aa343452d80ec98bf2efef6915b3a36ae97a8eefed --cri-socket /var/run/cri-dockerd.sock

```

拷贝 kubeconfig 文件并 配置 命令补全。每个 master 节点执行。

```bash

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

source <(kubectl completion bash) >> /etc/profile

┌──[root@vms101.liruilongs.github.io]-[~]

└─$

```

### 工作节点添加

工作节点通过 ansible 并行批量添加。

```bash

┌──[root@vms100.liruilongs.github.io]-[~/ansible]

└─$ansible k8s_node -m shell -a "kubeadm join 192.168.26.99:30033 --token hdse4x.7d8vdil1s7tdp8dj --discovery-token-ca-cert-hash sha256:11bf0456c84c2a176eb380a98844eed80a296cdb6cc557c863991c63de594108 --cri-socket /var/run/cri-dockerd.sock " -i host.yaml

```

## 查看节点状态

```bash

┌──[root@vms100.liruilongs.github.io]-[~/ansible/calico]

└─$kubectl get nodes

NAME STATUS ROLES AGE VERSION

vms100.liruilongs.github.io Ready control-plane 17h v1.25.1

vms101.liruilongs.github.io Ready control-plane 15h v1.25.1

vms102.liruilongs.github.io Ready control-plane 15h v1.25.1

vms103.liruilongs.github.io Ready 15h v1.25.1

vms105.liruilongs.github.io Ready 15h v1.25.1

vms106.liruilongs.github.io Ready 15h v1.25.1

vms107.liruilongs.github.io Ready 15h v1.25.1

vms108.liruilongs.github.io Ready 15h v1.25.1

┌──[root@vms100.liruilongs.github.io]-[~/ansible/calico]

└─$

```

到这里集群就算是安装完成,但是对于生产环境,我们需要安装一个常用的插件,集群备份,监控工具,Ingress 控制器等。这部分属于可选项。

# 部署后的可选操作

## 安装一些常用插件和工具

一些常用的工具和 kubelct 插件,这里我直接从旧的集群里拷贝过来。安装相对简单,没有网络的可以,下载二进制包,然后配置成 kubectl 插件。有的话可以先下载 krew ,通过 krew 下载其他的插件

```bash

┌──[root@vms100.liruilongs.github.io]-[/usr/local/bin]

└─$tree -h

.

├── [ 45M] helm

├── [ 15M] helmify

├── [9.2M] kubectl-ketall

├── [ 11M] kubectl-krew

├── [ 44M] kubectl-kubepug

├── [8.8M] kubectl-rakkess

├── [ 18M] kubectl-score

├── [ 57M] kubectl-spy

├── [ 31M] kubectl-tree

├── [ 57M] kubectl-virt

└── [ 14M] kustomize

0 directories, 11 files

```

工具介绍:

+ helm: HELM chart 包管理器

+ kustomize: YAML 文件整合管理工具,用于管理整合生成 资源 YAML文件

+ helmify: YAML 文件转 HELM chart 包工具,可以把 YAML 文件转化为 charts 包

+ kubectl-ketall: 查看所有集群资源的 kubelct 插件工具

+ kubectl-krew: kubelet 插件管理工具,用于自动的安装升级 kubectl 插件。

+ kubectl-kubepug: 集群API资源版本 查看,用于升级检查

+ kubectl-rakkess: 集群 RBAC 权限查看工具,用于查看整个集群授权

+ kubectl-score: API资源定义建议工具,用于给出一些 API 资源的优化建议。

+ kubectl-spy: 集群 API 资源动态监控工具,可以看到具体的YAML 字段变动。

+ kubectl-tree: 集群 API 资源 层级关系,用于展示 API 资源的 树状关系

+ kubectl-virt: 集群虚机环境管理工具,用于管理接入集群中的虚拟机。

## 安装一些监控工具

### cadvisor 安装

SPS 1.25不被支持,需要提前去掉,或者看看下官网的通过 `kustomize` 修改后安装

```bash

┌──[root@vms100.liruilongs.github.io]-[~/ansible/cadvisor]

└─$kubectl apply -f cadvisor.yaml

namespace/cadvisor created

serviceaccount/cadvisor created

clusterrole.rbac.authorization.k8s.io/cadvisor created

clusterrolebinding.rbac.authorization.k8s.io/cadvisor created

daemonset.apps/cadvisor created

```

### 安装 metrics-server 监控工具

```bash

┌──[root@vms100.liruilongs.github.io]-[~/ansible/cadvisor]

└─$kubectl apply -f metrics-server.yaml

serviceaccount/metrics-server created

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrole.rbac.authorization.k8s.io/system:metrics-server created

rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created

clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created

service/metrics-server created

deployment.apps/metrics-server created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

```

## 安装 ingress-nginx 用于 Ingress

为了让 Ingress 资源工作,集群必须有一个正在运行的 Ingress 控制器。

与作为 kube-controller-manager 可执行文件的一部分运行的其他类型的控制器不同, Ingress 控制器不是随集群自动启动的。 基于此页面,你可选择最适合你的集群的 ingress 控制器实现。

Kubernetes 作为一个项目,目前支持和维护 AWS、 GCE 和 Nginx Ingress 控制器

```bash

┌──[root@vms100.liruilongs.github.io]-[~/ansible/ingress_nginx]

└─$helm upgrade --install ingress-nginx ingress-nginx --repo https://kubernetes.github.io/ingress-nginx --namespace ingress-nginx --create-namespace

```

如果没有网,可以把 yaml 文件和 对应的 镜像导入进去。安装完成有个 Service 类型是 LB ,所有我们还的安装一个软 LB

```bash

┌──[root@vms100.liruilongs.github.io]-[~/ansible/ingress_nginx]

└─$kubectl apply -f deploy.yaml

namespace/ingress-nginx created

serviceaccount/ingress-nginx created

serviceaccount/ingress-nginx-admission created

role.rbac.authorization.k8s.io/ingress-nginx created

role.rbac.authorization.k8s.io/ingress-nginx-admission created

clusterrole.rbac.authorization.k8s.io/ingress-nginx created

clusterrole.rbac.authorization.k8s.io/ingress-nginx-admission created

rolebinding.rbac.authorization.k8s.io/ingress-nginx created

rolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created

configmap/ingress-nginx-controller created

service/ingress-nginx-controller created

service/ingress-nginx-controller-admission created

deployment.apps/ingress-nginx-controller created

job.batch/ingress-nginx-admission-create created

job.batch/ingress-nginx-admission-patch created

ingressclass.networking.k8s.io/nginx created

validatingwebhookconfiguration.admissionregistration.k8s.io/ingress-nginx-admission created

```

## 安装 Metallb 用于 LoadBalancer

Metallb 实现 LoadBalancer

Metallb可以通过k8s原生的方式提供LB类型的Service支持

```bash

kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/v0.13.7/config/manifests/metallb-native.yaml

```

```bash

┌──[root@vms100.liruilongs.github.io]-[~/ansible/metallb]

└─$kubectl apply -f metallb-native.yaml

namespace/metallb-system created

customresourcedefinition.apiextensions.k8s.io/addresspools.metallb.io created

customresourcedefinition.apiextensions.k8s.io/bfdprofiles.metallb.io created

customresourcedefinition.apiextensions.k8s.io/bgpadvertisements.metallb.io created

customresourcedefinition.apiextensions.k8s.io/bgppeers.metallb.io created

customresourcedefinition.apiextensions.k8s.io/communities.metallb.io created

customresourcedefinition.apiextensions.k8s.io/ipaddresspools.metallb.io created

customresourcedefinition.apiextensions.k8s.io/l2advertisements.metallb.io created

serviceaccount/controller created

serviceaccount/speaker created

role.rbac.authorization.k8s.io/controller created

role.rbac.authorization.k8s.io/pod-lister created

clusterrole.rbac.authorization.k8s.io/metallb-system:controller created

clusterrole.rbac.authorization.k8s.io/metallb-system:speaker created

rolebinding.rbac.authorization.k8s.io/controller created

rolebinding.rbac.authorization.k8s.io/pod-lister created

clusterrolebinding.rbac.authorization.k8s.io/metallb-system:controller created

clusterrolebinding.rbac.authorization.k8s.io/metallb-system:speaker created

secret/webhook-server-cert created

service/webhook-service created

deployment.apps/controller created

daemonset.apps/speaker created

validatingwebhookconfiguration.admissionregistration.k8s.io/metallb-webhook-configuration created

```

创建 IP池

```bash

┌──[root@vms100.liruilongs.github.io]-[~/ansible/metallb]

└─$kubectl apply -f pool.yaml

ipaddresspool.metallb.io/first-pool created

┌──[root@vms100.liruilongs.github.io]-[~/ansible/metallb]

└─$cat pool.yaml

apiVersion: metallb.io/v1beta1

kind: IPAddressPool

metadata:

name: first-pool

namespace: metallb-system

spec:

addresses:

- 192.168.26.220-192.168.26.249

┌──[root@vms100.liruilongs.github.io]-[~/ansible/metallb]

└─$

```

```bash

┌──[root@vms100.liruilongs.github.io]-[~/ansible/metallb]

└─$cat l2a.yaml

apiVersion: metallb.io/v1beta1

kind: L2Advertisement

metadata:

name: example

namespace: metallb-system

┌──[root@vms100.liruilongs.github.io]-[~/ansible/metallb]

└─$kubectl apply -f l2a.yaml

l2advertisement.metallb.io/example unchanged

┌──[root@vms100.liruilongs.github.io]-[~/ansible/metallb]

└─$

```

## 安装一个本地存储的 SC

我们需要安装一个 SC 分配器,以后可能会用到,这个也可以以后安装 : https://github.com/rancher/local-path-provisioner

```bash

┌──[root@vms100.liruilongs.github.io]-[~/ansible/helm]

└─$kubectl apply -f local-path-storage.yaml

namespace/local-path-storage unchanged

serviceaccount/local-path-provisioner-service-account unchanged

clusterrole.rbac.authorization.k8s.io/local-path-provisioner-role unchanged

clusterrolebinding.rbac.authorization.k8s.io/local-path-provisioner-bind unchanged

deployment.apps/local-path-provisioner unchanged

storageclass.storage.k8s.io/local-path unchanged

configmap/local-path-config unchanged

```

## 安装 prometheus

需要指标监控,所有需要普罗米修斯

kube-prometheus-stack-30.0.1

https://github.com/prometheus-community/helm-charts/releases/download/kube-prometheus-stack-30.0.1/kube-prometheus-stack-30.0.1.tgz

镜像拉不了的问题,直接替换不好找,这里把 charts 包下载下来,然后通过 `helm template` 转化为具体的 yaml 文件。替换对应的镜像。但是这样还一个问题,一些 CRD 不会预先安装,尤其是多 master 的情况,这里你可以多试几次,说不定就可以了,github上有人提了,貌似没有很好的解决方案,我的解决办法是先用 helm 安装,然后卸载,卸载的时候不会卸载 crd,然后在运行 生成的 yaml 文件。

```bash

┌──[root@vms100.liruilongs.github.io]-[~/ansible/helm]

└─$cd kube-prometheus-stack

┌──[root@vms100.liruilongs.github.io]-[~/ansible/helm/kube-prometheus-stack]

└─$ls

Chart.lock charts Chart.yaml CONTRIBUTING.md crds README.md templates values.yaml

┌──[root@vms100.liruilongs.github.io]-[~/ansible/helm/kube-prometheus-stack]

└─$helm install kube-prometheus-stack .

┌──[root@vms100.liruilongs.github.io]-[~/ansible/helm/kube-prometheus-stack]

└─$helm template . > ../kube-prometheus-stack.yaml

```

执行应用

```bash

┌──[root@vms100.liruilongs.github.io]-[~/ansible/helm]

└─$kubectl apply -f kube-prometheus-stack.yaml

```

执行完需要修改svc 为 NodePort 当然如果有 Ingress 控制器,或者 LB ,可以配置其他类型。

```bash

┌──[root@vms100.liruilongs.github.io]-[~/ansible/helm]

└─$kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

alertmanager-operated ClusterIP None 9093/TCP,9094/TCP,9094/UDP 27m

kubernetes ClusterIP 10.96.0.1 443/TCP 40h

prometheus-operated ClusterIP None 9090/TCP 27m

release-name-grafana NodePort 10.111.188.209 80:30203/TCP 30m

release-name-kube-promethe-alertmanager ClusterIP 10.97.17.175 9093/TCP 30m

release-name-kube-promethe-operator ClusterIP 10.107.60.174 443/TCP 30m

release-name-kube-promethe-prometheus ClusterIP 10.108.163.61 9090/TCP 30m

release-name-kube-state-metrics ClusterIP 10.102.37.208 8080/TCP 30m

release-name-prometheus-node-exporter ClusterIP 10.100.5.155 9100/TCP 30m

```

登录用户名和密码获取

```bash

┌──[root@vms100.liruilongs.github.io]-[~/ansible/helm]

└─$kubectl get secrets release-name-grafana -o jsonpath='{.data.admin-user}' | base64 -d

admin┌──[root@vms100.liruilongs.github.io]-[~/ansible/helm]

└─$kubectl get secrets release-name-grafana -o jsonpath='{.data.admin-password}' | base64 -d

prom-operator┌──[root@vms100.liruilongs.github.io]-[~/ansible/helm]

└─$

```

## ETCD 快照文件定时备份

生产环境的 ETCD 一定要做备份,要不出了问题只能跑路了......

### service 服务编写

这里我们写了一个脚本,通过 systemd.service 的方式运行。存放位置见注释

```bash

┌──[root@vms81.liruilongs.github.io]-[~/back]

└─$systemctl cat etcd-backup

# /usr/lib/systemd/system/etcd-backup.service

[Unit]

Description= "ETCD 备份"

After=network-online.target

[Service]

Type=oneshot

Environment=ETCDCTL_API=3

ExecStart=/usr/bin/bash /usr/lib/systemd/system/etcd_back.sh

[Install]

WantedBy=multi-user.target

```

### 定时任务编写

定时备份通过 systemd.timer 的方式实现

```bash

┌──[root@vms81.liruilongs.github.io]-[~/back]

└─$systemctl cat etcd-backup.timer

# /usr/lib/systemd/system/etcd-backup.timer

[Unit]

Description="每天备份一次 ETCD"

[Timer]

OnBootSec=3s

OnCalendar=*-*-* 00:00:00

Unit=etcd-backup.service

[Install]

WantedBy=multi-user.target

```

### 备份脚本编写

具体的 ETCD 快照备份脚本

```bash

┌──[root@vms81.liruilongs.github.io]-[~/back]

└─$cat /usr/lib/systemd/system/etcd_back.sh

#!/bin/bash

#@File : erct_break.sh

#@Time : 2023/01/27 23:00:27

#@Author : Li Ruilong

#@Version : 1.0

#@Desc : ETCD 备份

#@Contact : 1224965096@qq.com

if [ ! -d /root/back/ ];then

mkdir -p /root/back/

fi

STR_DATE=$(date +%Y%m%d%H%M)

ETCDCTL_API=3 etcdctl \

--endpoints="https://127.0.0.1:2379" \

--cert="/etc/kubernetes/pki/etcd/server.crt" \

--key="/etc/kubernetes/pki/etcd/server.key" \

--cacert="/etc/kubernetes/pki/etcd/ca.crt" \

snapshot save /root/back/snap-${STR_DATE}.db

ETCDCTL_API=3 etcdctl --write-out=table snapshot status /root/back/snap-${STR_DATE}.db

```

运行方式

```bash

┌──[root@vms100.liruilongs.github.io]-[~/ansible]

└─$systemctl enable etcd-backup.service --now

┌──[root@vms100.liruilongs.github.io]-[~/ansible]

└─$systemctl enable etcd-backup.timer --now

```

查看备份情况

```bash

┌──[root@vms100.liruilongs.github.io]-[~/ansible]

└─$ls -lh /root/back/

总用量 311M

-rw-r--r-- 1 root root 27M 1月 28 00:17 snap-202301280017.db

-rw-r--r-- 1 root root 27M 1月 29 00:00 snap-202301290000.db

-rw-r--r-- 1 root root 27M 2月 1 21:43 snap-202302012143.db

-rw-r--r-- 1 root root 27M 2月 2 00:00 snap-202302020000.db

-rw-r--r-- 1 root root 29M 2月 3 00:00 snap-202302030000.db

-rw-r--r-- 1 root root 29M 2月 4 00:00 snap-202302040000.db

-rw-r--r-- 1 root root 36M 2月 5 00:00 snap-202302050000.db

┌──[root@vms100.liruilongs.github.io]-[~/ansible]

└─$

```

## 安装 Velero 集群备份

Velero 用于集群 安全备份和恢复、执行灾难恢复以及迁移 Kubernetes 集群资源和持久卷。

### 客户端

```bash

┌──[root@vms100.liruilongs.github.io]-[~/ansible/velero]

└─$wget --no-check-certificate https://github.com/vmware-tanzu/velero/releases/download/v1.10.1-rc.1/velero-v1.10.1-rc.1-linux-amd64.tar.gz

┌──[root@vms100.liruilongs.github.io]-[~/ansible/velero]

└─$ls

velero-v1.10.1-rc.1-linux-amd64.tar.gz

┌──[root@vms100.liruilongs.github.io]-[~/ansible/velero]

└─$tar -zxvf velero-v1.10.1-rc.1-linux-amd64.tar.gz

┌──[root@vms100.liruilongs.github.io]-[~/ansible/velero]

└─$cd velero-v1.10.1-rc.1-linux-amd64/

┌──[root@vms100.liruilongs.github.io]-[~/ansible/velero/velero-v1.10.1-rc.1-linux-amd64]

└─$cp velero /usr/local/bin/

┌──[root@vms100.liruilongs.github.io]-[~/ansible/velero/velero-v1.10.1-rc.1-linux-amd64]

└─$velero version

Client:

Version: v1.10.1-rc.1

Git commit: e4d2a83917cd848e5f4e6ebc445fd3d262de10fa

```

### 服务端安装

配置登录相关的帐密文件

```bash

┌──[root@vms100.liruilongs.github.io]-[~/ansible/velero/velero-v1.10.1-rc.1-linux-amd64]

└─$vim credentials-velero

┌──[root@vms100.liruilongs.github.io]-[~/ansible/velero/velero-v1.10.1-rc.1-linux-amd64]

└─$cat credentials-velero

[default]

aws_access_key_id = minio

aws_secret_access_key = minio123

```

启动服务器和本地存储服务。在 Velero 目录中,在上面的客户端的安装包里,解压出来就可以看到

修改下 yaml 文件,这个YAM 文件在上面下载的 安装包里。

```yaml

┌──[root@vms100.liruilongs.github.io]-[~/ansible/velero/velero-v1.10.1-rc.1-linux-amd64]

└─$cat examples/minio/00-minio-deployment.yaml

# Copyright 2017 the Velero contributors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

---

apiVersion: v1

kind: Namespace

metadata:

name: velero

---

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: velero

name: minio

labels:

component: minio

spec:

strategy:

type: Recreate

selector:

matchLabels:

component: minio

template:

metadata:

labels:

component: minio

spec:

volumes:

- name: storage

emptyDir: {}

- name: config

emptyDir: {}

containers:

- name: minio

image: quay.io/minio/minio:latest

imagePullPolicy: IfNotPresent

args:

- server

- /storage

- --console-address=:9090

- --config-dir=/config

env:

- name: MINIO_ROOT_USER

value: "minio"

- name: MINIO_ROOT_PASSWORD

value: "minio123"

ports:

- containerPort: 9000

- containerPort: 9090

volumeMounts:

- name: storage

mountPath: "/storage"

- name: config

mountPath: "/config"

---

apiVersion: v1

kind: Service

metadata:

namespace: velero

name: minio

labels:

component: minio

spec:

# ClusterIP is recommended for production environments.

# Change to NodePort if needed per documentation,

# but only if you run Minio in a test/trial environment, for example with Minikube.

type: NodePort

ports:

- port: 9000

name: api

targetPort: 9000

protocol: TCP

- port: 9099

name: console

targetPort: 9090

protocol: TCP

selector:

component: minio

---

apiVersion: batch/v1

kind: Job

metadata:

namespace: velero

name: minio-setup

labels:

component: minio

spec:

template:

metadata:

name: minio-setup

spec:

restartPolicy: OnFailure

volumes:

- name: config

emptyDir: {}

containers:

- name: mc

image: minio/mc:latest

imagePullPolicy: IfNotPresent

command:

- /bin/sh

- -c

- "mc --config-dir=/config config host add velero http://minio:9000 minio minio123 && mc --config-dir=/config mb -p velero/velero"

volumeMounts:

- name: config

mountPath: "/config"

```

不建议使用 empty dir 的方式。如果集群挂掉,很有可能无法重启 pod。以及对应的 容器。建议使用单独的容器部署挂载目录,

直接应用上面的 YAML 文件,访问查看。这里安装一个 `minio` 并且通过 job 添加了一个 桶,用于存放 备份后的数据。

通过命令行工具安装 velero

```bash

velero install \

--provider aws \

--plugins velero/velero-plugin-for-aws:v1.2.1 \

--bucket velero \

--secret-file ./credentials-velero \

--use-volume-snapshots=false \

--backup-location-config region=minio,s3ForcePathStyle="true",s3Url=http://minio.velero.svc:9000

```

+ bucket:你在minio中创建的bucketname

+ backup-location-config: 把xxx.xxx.xxx.xxx改成你minio服务器的ip地址。

也可以导出 YAML 文件在应用,确认没问题,或者私库需要替换相关镜像

```bash

┌──[root@vms100.liruilongs.github.io]-[~/ansible/velero/velero-v1.10.1-rc.1-linux-amd64]

└─$velero install \

--provider aws \

--plugins velero/velero-plugin-for-aws:v1.2.1 \

--bucket velero \

--secret-file ./credentials-velero \

--use-volume-snapshots=false \

--backup-location-config region=minio,s3ForcePathStyle="true",s3Url=http://minio.velero.svc:9000

--dry-run -o yaml > velero_deploy.yaml

```

```bash

┌──[root@vms100.liruilongs.github.io]-[~/ansible/velero/velero-v1.10.1-rc.1-linux-amd64]

└─$kubectl apply -f velero_deploy.yaml

CustomResourceDefinition/backuprepositories.velero.io: attempting to create resource

CustomResourceDefinition/backuprepositories.velero.io: attempting to create resource client

..........

BackupStorageLocation/default: attempting to create resource

BackupStorageLocation/default: attempting to create resource client

BackupStorageLocation/default: created

Deployment/velero: attempting to create resource

Deployment/velero: attempting to create resource client

Deployment/velero: created

Velero is installed! ⛵ Use 'kubectl logs deployment/velero -n velero' to view the status.

┌──[root@vms100.liruilongs.github.io]-[~/ansible/velero/velero-v1.10.1-rc.1-linux-amd64]

└─$

```

部署完成的 job 会自动新建

### 备份

```bash

┌──[root@vms100.liruilongs.github.io]-[~/ansible/velero/velero-v1.10.1-rc.1-linux-amd64]

└─$velero backup create velero-demo

Backup request "velero-demo" submitted successfully.

Run `velero backup describe velero-demo` or `velero backup logs velero-demo` for more details.

┌──[root@vms100.liruilongs.github.io]-[~/ansible/velero/velero-v1.10.1-rc.1-linux-amd64]

└─$velero get backup velero-demo

NAME STATUS ERRORS WARNINGS CREATED EXPIRES STORAGE LOCATION SELECTOR

velero-demo InProgress 0 0 2023-01-28 22:18:45 +0800 CST 29d default

┌──[root@vms100.liruilongs.github.io]-[~/ansible/velero/velero-v1.10.1-rc.1-linux-amd64]

└─$

┌──[root@vms100.liruilongs.github.io]-[~/ansible/velero/velero-v1.10.1-rc.1-linux-amd64]

└─$velero get backup velero-demo

NAME STATUS ERRORS WARNINGS CREATED EXPIRES STORAGE LOCATION SELECTOR

velero-demo Completed 0 0 2023-01-28 22:18:45 +0800 CST 29d default

```

篇幅原因,这块具体 velero 的 备份恢复,定时备份等不多说明。

关于 k8s 高可用集群部署就和小伙伴分享到这里,生活加油。

上面涉及到的 Ansible 剧本,静态pod yaml 文件,YUM配置文件,包括后来的一些常用工具安装的 yaml 文件都以整理上传 gitee, 小伙伴可自行下载,篇幅问题,没有展示。

获取git 仓库地址方式,关注 公总好 **山河已无恙**,回复 **k8s-ha-deploy** 即可获得地址。

## 博文部分内容参考

文中涉及参考链接内容版权归原作者所有,如有侵权请告知

***

https://kubernetes.io/zh-cn/docs/setup/production-environment/tools/

https://github.com/Mirantis/cri-dockerd

https://kubernetes.io/zh-cn/docs/setup/production-environment/container-runtimes/

https://github.com/kubernetes/kubeadm/blob/main/docs/ha-considerations.md#options-for-software-load-balancing

---

© 2018-2023 liruilonger@gmail.com,All rights reserved. 保持署名-非商用-自由转载-相同方式共享(创意共享 3.0 许可证)