+

+

+

+

+

+

+

+

+ 品牌:

+

+

+

+ -

+

- 所有品牌 +

- A +

- B +

- C +

- D +

- E +

- F +

- G +

- H +

- I +

- J +

- K +

- L +

- M +

- N +

- O +

- P +

- Q +

- R +

- S +

- T +

- U +

- W +

- X +

- Y +

- Z +

+

+ -

+

-

+

+

+

+ 罗技(G)

+

+

+

+ 罗技(G)

+

+

+ -

+

+

+

+ 罗技(Logitech)

+

+

+

+ 罗技(Logitech)

+

+

+ -

+

+

+

+ 雷蛇(Razer)

+

+

+

+ 雷蛇(Razer)

+

+

+ -

+

+

+

+ 联想(lenovo)

+

+

+

+ 联想(lenovo)

+

+

+ -

+

+

+

+ 雷柏(Rapoo)

+

+

+

+ 雷柏(Rapoo)

+

+

+ -

+

+

+

+ 达尔优

+

+

+

+ 达尔优

+

+

+ -

+

+

+

+ 双飞燕(A4TECH)

+

+

+

+ 双飞燕(A4TECH)

+

+

+ -

+

+

+

+ 惠普(HP)

+

+

+

+ 惠普(HP)

+

+

+ -

+

+

+

+ 微软(Microsoft)

+

+

+

+ 微软(Microsoft)

+

+

+ -

+

+

+

+ 赛睿(SteelSeries)

+

+

+

+ 赛睿(SteelSeries)

+

+

+ -

+

+

+

+ 戴尔(DELL)

+

+

+

+ 戴尔(DELL)

+

+

+ -

+

+

+

+ 英菲克(INPHIC)

+

+

+

+ 英菲克(INPHIC)

+

+

+ -

+

+

+

+ 小米(MI)

+

+

+

+ 小米(MI)

+

+

+ -

+

+

+

+ ROG

+

+

+

+ ROG

+

+

+ -

+

+

+

+ ThinkPad

+

+

+

+ ThinkPad

+

+

+ -

+

+

+

+ 飞利浦(PHILIPS )

+

+

+

+ 飞利浦(PHILIPS )

+

+

+ -

+

+

+

+ 外星人(Alienware)

+

+

+

+ 外星人(Alienware)

+

+

+ -

+

+

+

+ 前行者(EWEADN)

+

+

+

+ 前行者(EWEADN)

+

+

+ -

+

+

+

+ 雷神(ThundeRobot)

+

+

+

+ 雷神(ThundeRobot)

+

+

+ -

+

+

+

+ 华为(HUAWEI)

+

+

+

+ 华为(HUAWEI)

+

+

+ -

+

+

+

+ 狼蛛(AULA)

+

+

+

+ 狼蛛(AULA)

+

+

+ -

+

+

+

+ 华硕(ASUS)

+

+

+

+ 华硕(ASUS)

+

+

+ - + + + 牧马人 + + +

-

+

+

+

+ 多彩(Delux)

+

+

+

+ 多彩(Delux)

+

+

+ -

+

+

+

+ 微星(MSI)

+

+

+

+ 微星(MSI)

+

+

+ -

+

+

+

+ 科大讯飞(iFLYTEK)

+

+

+

+ 科大讯飞(iFLYTEK)

+

+

+ -

+

+

+

+ 冰豹

+

+

+

+ 冰豹

+

+

+ -

+

+

+

+ 机械师(MACHENIKE)

+

+

+

+ 机械师(MACHENIKE)

+

+

+ -

+

+

+

+ ZOWIE GEAR

+

+

+

+ ZOWIE GEAR

+

+

+ - + + + 玩家国度(ROG) + + +

-

+

+

+

+ 灵蛇

+

+

+

+ 灵蛇

+

+

+ - + + + 罗技(logitech) + + +

-

+

+

+

+ 黑爵(AJAZZ)

+

+

+

+ 黑爵(AJAZZ)

+

+

+ - + + + 蝰蛇(VIPERADE) + + +

-

+

+

+

+ 洛斐(LOFREE)

+

+

+

+ 洛斐(LOFREE)

+

+

+ -

+

+

+

+ 美商海盗船(USCORSAIR)

+

+

+

+ 美商海盗船(USCORSAIR)

+

+

+ -

+

+

+

+ SANWA SUPPLY

+

+

+

+ SANWA SUPPLY

+

+

+ -

+

+

+

+ 宏碁(acer)

+

+

+

+ 宏碁(acer)

+

+

+ -

+

+

+

+ 沃野

+

+

+

+ 沃野

+

+

+ -

+

+

+

+ CHERRY

+

+

+

+ CHERRY

+

+

+ -

+

+

+

+ 新贵(Newmen)

+

+

+

+ 新贵(Newmen)

+

+

+ -

+

+

+

+ Xtrfy

+

+

+

+ Xtrfy

+

+

+ -

+

+

+

+ 金士顿(Kingston)

+

+

+

+ 金士顿(Kingston)

+

+

+ -

+

+

+

+ 富勒(Fuhlen)

+

+

+

+ 富勒(Fuhlen)

+

+

+ -

+

+

+

+ 宜丽客(ELECOM)

+

+

+

+ 宜丽客(ELECOM)

+

+

+ -

+

+

+

+ AKKO

+

+

+

+ AKKO

+

+

+ - + + + 因科特 + + +

- + + + 吉选(GESOBYTE) + + +

- + + + 迪摩 + + +

- + + + 银雕(YINDIAO) + + +

-

+

+

+

+ 富德

+

+

+

+ 富德

+

+

+ -

+

+

+

+ 麦塔奇(Microtouch)

+

+

+

+ 麦塔奇(Microtouch)

+

+

+ - + + + 3Dconnexion + + +

-

+

+

+

+ 爱国者(aigo)

+

+

+

+ 爱国者(aigo)

+

+

+ - + + + 狼界 + + +

-

+

+

+

+ 本手

+

+

+

+ 本手

+

+

+ -

+

+

+

+ 新盟(TECHNOLOGY)

+

+

+

+ 新盟(TECHNOLOGY)

+

+

+ -

+

+

+

+ 现代(HYUNDAI)

+

+

+

+ 现代(HYUNDAI)

+

+

+ - + + + 普泽罗 + + +

-

+

+

+

+ ThinkPlus

+

+

+

+ ThinkPlus

+

+

+ - + + + 库肯(KUKEN) + + +

-

+

+

+

+ MAD CATZ

+

+

+

+ MAD CATZ

+

+

+ - + + + 森松尼(sunsonny) + + +

-

+

+

+

+ BUBM

+

+

+

+ BUBM

+

+

+ -

+

+

+

+ Apple

+

+

+

+ Apple

+

+

+ - + + + 肯辛通(Kensington) + + +

- + + + 余音 + + +

-

+

+

+

+ 火银狐

+

+

+

+ 火银狐

+

+

+ -

+

+

+

+ 烽火狼

+

+

+

+ 烽火狼

+

+

+ -

+

+

+

+ 现代翼蛇

+

+

+

+ 现代翼蛇

+

+

+ -

+

+

+

+ 咪鼠科技(Mimouse)

+

+

+

+ 咪鼠科技(Mimouse)

+

+

+ -

+

+

+

+ 蝰蛇(KUISHE)

+

+

+

+ 蝰蛇(KUISHE)

+

+

+ - + + + 钛度 + + +

-

+

+

+

+ 磁动力(ZIDLI)

+

+

+

+ 磁动力(ZIDLI)

+

+

+ -

+

+

+

+ 游狼

+

+

+

+ 游狼

+

+

+ -

+

+

+

+ 得力(deli)

+

+

+

+ 得力(deli)

+

+

+ -

+

+

+

+ 冰狐

+

+

+

+ 冰狐

+

+

+ -

+

+

+

+ ENDGAME GEAR

+

+

+

+ ENDGAME GEAR

+

+

+ - + + + 掌握者 + + +

-

+

+

+

+ 虎符电竞(ESPORTS TIGER)

+

+

+

+ 虎符电竞(ESPORTS TIGER)

+

+

+ -

+

+

+

+ JRC

+

+

+

+ JRC

+

+

+ - + + + 斗鱼(DOUYU.COM) + + +

-

+

+

+

+ 追光豹

+

+

+

+ 追光豹

+

+

+ -

+

+

+

+ 贝戋马户(james donkey)

+

+

+

+ 贝戋马户(james donkey)

+

+

+ -

+

+

+

+ AOC

+

+

+

+ AOC

+

+

+ -

+

+

+

+ 摩天手(Mofii)

+

+

+

+ 摩天手(Mofii)

+

+

+ -

+

+

+

+ 方正科技(ifound)

+

+

+

+ 方正科技(ifound)

+

+

+ -

+

+

+

+ 京东京造

+

+

+

+ 京东京造

+

+

+ -

+

+

+

+ perixx

+

+

+

+ perixx

+

+

+ - + + + Dear Mean + + +

-

+

+

+

+ 酷冷至尊(CoolerMaster)

+

+

+

+ 酷冷至尊(CoolerMaster)

+

+

+ -

+

+

+

+ RK

+

+

+

+ RK

+

+

+ -

+

+

+

+ 独牙(DUYA)

+

+

+

+ 独牙(DUYA)

+

+

+ - + + + ET + + +

- + + + 腹灵(FL·ESPORTS) + + +

-

+

+

+

+ 科普斯

+

+

+

+ 科普斯

+

+

+ - + + + 猛豹 + + +

- + + + 惊雷 + + +

- + + + QQfamily + + +

- + + + 力胜 + + +

-

+

+

+

+ MIIIW

+

+

+

+ MIIIW

+

+

+ -

+

+

+

+ 技嘉(GIGABYTE)

+

+

+

+ 技嘉(GIGABYTE)

+

+

+ -

+

+

+

+ 炫光

+

+

+

+ 炫光

+

+

+ - + + + 米徒(ME TOO) + + +

- + + + 虹龙 + + +

-

+

+

+

+ HYPERX

+

+

+

+ HYPERX

+

+

+ - + + + 印象笔记 + + +

-

+

+

+

+ B.O.W

+

+

+

+ B.O.W

+

+

+ - + + + 快鼠(FAST MOUSE) + + +

- + + + GYSFONE + + +

-

+

+

+

+ 摩豹(Motospeed)

+

+

+

+ 摩豹(Motospeed)

+

+

+ -

+

+

+

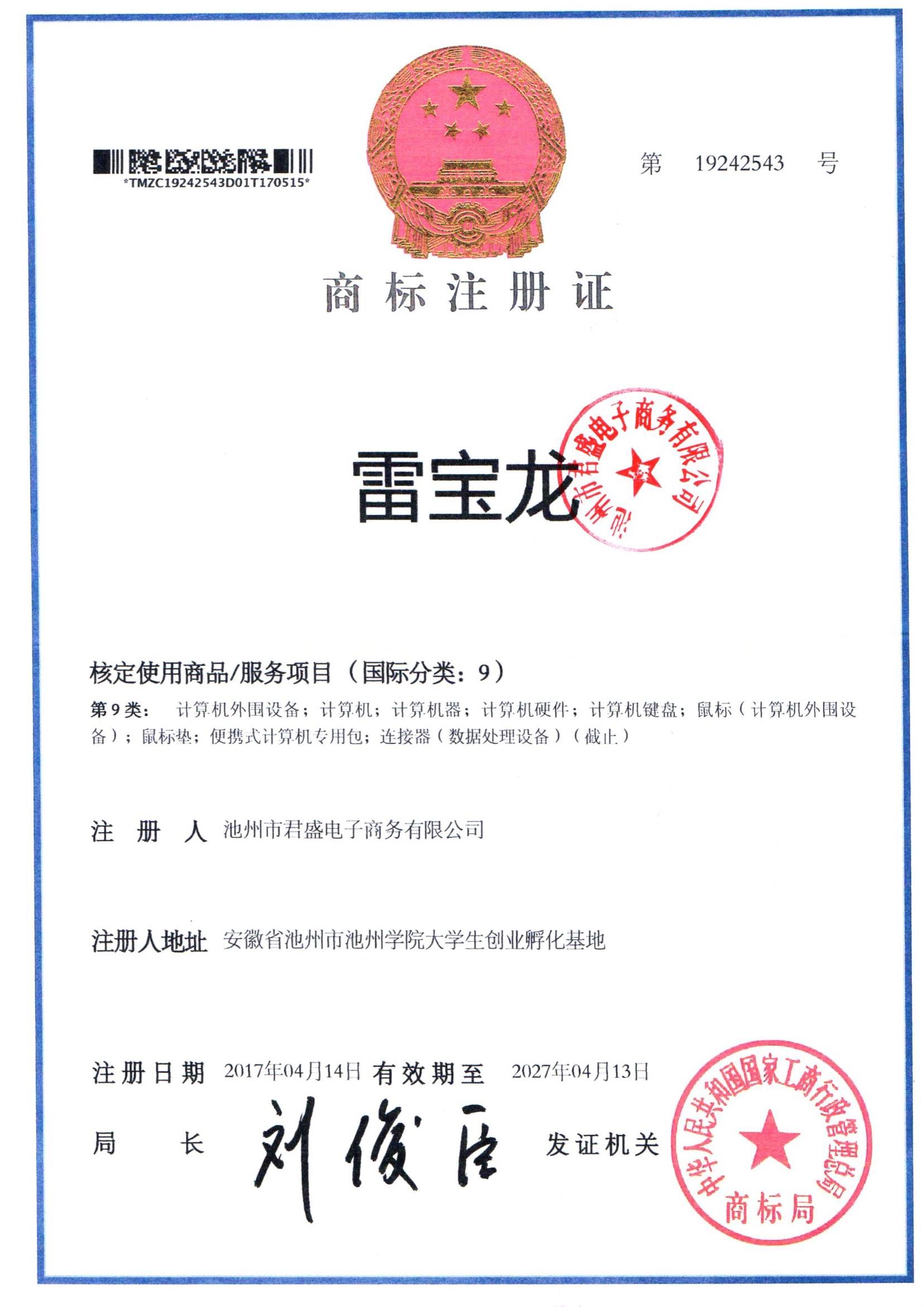

+ 雷宝龙

+

+

+

+ 雷宝龙

+

+

+ - + + + bejoy + + +

-

+

+

+

+ glorious

+

+

+

+ glorious

+

+

+ - + + + 纳卓者(NAZZHE) + + +

-

+

+

+

+ 赛德斯

+

+

+

+ 赛德斯

+

+

+ -

+

+

+

+ imiia

+

+

+

+ imiia

+

+

+ -

+

+

+

+ 联想(ThinkCentre)

+

+

+

+ 联想(ThinkCentre)

+

+

+ -

+

+

+

+ 雷迪凯(LDK.al)

+

+

+

+ 雷迪凯(LDK.al)

+

+

+ - + + + 因科特(ironcat) + + +

- + + + MageGee + + +

- + + + ONIKUMA + + +

-

+

+

+

+ 摩肯

+

+

+

+ 摩肯

+

+

+ - + + + SOZA + + +

-

+

+

+

+ 喵王

+

+

+

+ 喵王

+

+

+ -

+

+

+

+ 太空步(Monwalk)

+

+

+

+ 太空步(Monwalk)

+

+

+ -

+

+

+

+ 耐也(Niye)

+

+

+

+ 耐也(Niye)

+

+

+ - + + + 朗森 + + +

-

+

+

+

+ ikbc

+

+

+

+ ikbc

+

+

+ - + + + 剑圣一族 + + +

- + + + Leoisilence + + +

-

+

+

+

+ 京烁

+

+

+

+ 京烁

+

+

+ -

+

+

+

+ uFound

+

+

+

+ uFound

+

+

+ -

+

+

+

+ 虹PAD(hongpad)

+

+

+

+ 虹PAD(hongpad)

+

+

+ -

+

+

+

+ 德意龙(BORN IN WAR)

+

+

+

+ 德意龙(BORN IN WAR)

+

+

+ -

+

+

+

+ 极速射貂(GSEdo)

+

+

+

+ 极速射貂(GSEdo)

+

+

+ -

+

+

+

+ 黑峡谷(Hyeku)

+

+

+

+ 黑峡谷(Hyeku)

+

+

+ - + + + 慕正 + + +

- + + + 轻帆 + + +

- + + + 雷狼 + + +

- + + + 狼派(teamwolf) + + +

- + + + 东来也 + + +

-

+

+

+

+ 汉王(Hanvon)

+

+

+

+ 汉王(Hanvon)

+

+

+ -

+

+

+

+ 梦田(MVTV)

+

+

+

+ 梦田(MVTV)

+

+

+ -

+

+

+

+ 卡佐(AZZOR)

+

+

+

+ 卡佐(AZZOR)

+

+

+ - + + + 游猎者 + + +

-

+

+

+

+ 龙涛

+

+

+

+ 龙涛

+

+

+ -

+

+

+

+ 倍晶(BestJing)

+

+

+

+ 倍晶(BestJing)

+

+

+ - + + + Matcheasy + + +

-

+

+

+

+ 狼途(Langtu)

+

+

+

+ 狼途(Langtu)

+

+

+ -

+

+

+

+ 虎猫

+

+

+

+ 虎猫

+

+

+ - + + + 品怡 + + +

-

+

+

+

+ e元素

+

+

+

+ e元素

+

+

+ - + + + 相思豆 + + +

-

+

+

+

+ 魔炼者(MAGIC-REFINER)

+

+

+

+ 魔炼者(MAGIC-REFINER)

+

+

+ -

+

+

+

+ 长城(Great Wall)

+

+

+

+ 长城(Great Wall)

+

+

+ -

+

+

+

+ 初忆(CHUYI)

+

+

+

+ 初忆(CHUYI)

+

+

+ - + + + HIXANNY + + +

- + + + 敏涛 + + +

-

+

+

+

+ 酷倍达(QPAD )

+

+

+

+ 酷倍达(QPAD )

+

+

+ - + + + 全球翻译官 + + +

- + + + 菲沐(FAMOR) + + +

- + + + 邦兴晖 + + +

- + + + FirstBlood + + +

-

+

+

+

+ 讯拓(Sunt)

+

+

+

+ 讯拓(Sunt)

+

+

+ -

+

+

+

+ 荣耀(HONOR)

+

+

+

+ 荣耀(HONOR)

+

+

+ -

+

+

+

+ DCOMA

+

+

+

+ DCOMA

+

+

+ - + + + CCA + + +

-

+

+

+

+ 赤灵

+

+

+

+ 赤灵

+

+

+ -

+

+

+

+ 银雕(yindiao)

+

+

+

+ 银雕(yindiao)

+

+

+ -

+

+

+

+ oloey

+

+

+

+ oloey

+

+

+ - + + + 精晟小太阳 + + +

- + + + POLIGU + + +

-

+

+

+

+ 酷今(COOLTODAY)

+

+

+

+ 酷今(COOLTODAY)

+

+

+ - + + + 罗品贡技 + + +

-

+

+

+

+ HUKE

+

+

+

+ HUKE

+

+

+ -

+

+

+

+ 酷米索(KUMISUO)

+

+

+

+ 酷米索(KUMISUO)

+

+

+ -

+

+

+

+ 信蓝铭

+

+

+

+ 信蓝铭

+

+

+ - + + + 汉钦 + + +

-

+

+

+

+ 乐帆(LeFanT)

+

+

+

+ 乐帆(LeFanT)

+

+

+ - + + + 全语通 + + +

- + + + 微森 + + +

-

+

+

+

+ 西伯利亚(XIBERIA)

+

+

+

+ 西伯利亚(XIBERIA)

+

+

+ -

+

+

+

+ 不梵

+

+

+

+ 不梵

+

+

+ - + + + 黑吉蛇 + + +

-

+

+

+

+ QMXD

+

+

+

+ QMXD

+

+

+ - + + + 漫呗熊 + + +

-

+

+

+

+ Kolinsky

+

+

+

+ Kolinsky

+

+

+ -

+

+

+

+ 拼搏者

+

+

+

+ 拼搏者

+

+

+ -

+

+

+

+ 迪士尼(Disney)

+

+

+

+ 迪士尼(Disney)

+

+

+ -

+

+

+

+ 飞遁(LESAILES)

+

+

+

+ 飞遁(LESAILES)

+

+

+ - + + + 力拓 + + +

-

+

+

+

+ 泰格斯(TARGUS)

+

+

+

+ 泰格斯(TARGUS)

+

+

+ - + + + 佳晟丰(JIA SHENG FENG) + + +

-

+

+

+

+ 镭拓(Rantopad)

+

+

+

+ 镭拓(Rantopad)

+

+

+ -

+

+

+

+ 带带

+

+

+

+ 带带

+

+

+ - + + + 昇欧 + + +

- + + + 艾丝恺 + + +

- + + + Darmoshark + + +

- + + + 徕声(AUGLAMOUR) + + +

- + + + 黑沙(HEISHA) + + +

- + + + 爱沃希(I&W&X) + + +

- + + + DURGOD + + +

-

+

+

+

+ 虎克

+

+

+

+ 虎克

+

+

+ -

+

+

+

+ 攀升(IPASON)

+

+

+

+ 攀升(IPASON)

+

+

+ -

+

+

+

+ 果瀚

+

+

+

+ 果瀚

+

+

+ - + + + 鹧鸪鸟(CHUKAR) + + +

- + + + LIMEIDE + + +

- + + + WGJCE + + +

- + + + 玲魅 + + +

-

+

+

+

+ 京天(KOTIN)

+

+

+

+ 京天(KOTIN)

+

+

+ -

+

+

+

+ 神舟(HASEE)

+

+

+

+ 神舟(HASEE)

+

+

+ -

+

+

+

+ 轮廓(CENOZic)

+

+

+

+ 轮廓(CENOZic)

+

+

+ -

+

+

+

+ 零度世家

+

+

+

+ 零度世家

+

+

+ -

+

+

+

+ i-rocks

+

+

+

+ i-rocks

+

+

+ -

+

+

+

+ 宁美(NINGMEI)

+

+

+

+ 宁美(NINGMEI)

+

+

+ - + + + 帝伊工坊 + + +

- + + + 秋拓 + + +

-

+

+

+

+ 维恩克

+

+

+

+ 维恩克

+

+

+ -

+

+

+

+ B.FRIENDit

+

+

+

+ B.FRIENDit

+

+

+ -

+

+

+

+ 海尔(Haier)

+

+

+

+ 海尔(Haier)

+

+

+ -

+

+

+

+ F.L

+

+

+

+ F.L

+

+

+ -

+

+

+

+ HOBBYBOX

+

+

+

+ HOBBYBOX

+

+

+ - + + + 三侠 + + +

- + + + 心汐 + + +

- + + + 爵蝎(JUEXIE) + + +

-

+

+

+

+ 暗杀星

+

+

+

+ 暗杀星

+

+

+ - + + + 梵超 + + +

- + + + 良雫 + + +

- + + + FVYESH + + +

- + + + 百馨味 + + +

-

+

+

+

+ AJIUYU

+

+

+

+ AJIUYU

+

+

+ - + + + 轻松熊 + + +

-

+

+

+

+ 纽曼(Newmine)

+

+

+

+ 纽曼(Newmine)

+

+

+ - + + + 驰顾 + + +

- + + + 易科星 + + +

- + + + EGGUEA + + +

- + + + 雷腾 + + +

- + + + 逆狼 + + +

- + + + 极米熊 + + +

- + + + BULUO + + +

- + + + Readson + + +

- + + + 自由狼(ZIYOU LANG) + + +

-

+

+

+

+ 墨一(MOYi)

+

+

+

+ 墨一(MOYi)

+

+

+ - + + + isoojo + + +

-

+

+

+

+ 乾竡客

+

+

+

+ 乾竡客

+

+

+ -

+

+

+

+ 脉歌(Macaw)

+

+

+

+ 脉歌(Macaw)

+

+

+ -

+

+

+

+ a豆(adol)

+

+

+

+ a豆(adol)

+

+

+ - + + + Facroo + + +

- + + + 时代节点(ERA NODE) + + +

-

+

+

+

+ 锦读(JINDU)

+

+

+

+ 锦读(JINDU)

+

+

+ - + + + 机械师 + + +

- + + + 冰雷 + + +

-

+

+

+

+ 甘斯(GANSI)

+

+

+

+ 甘斯(GANSI)

+

+

+ -

+

+

+

+ 跆威(Tai wei)

+

+

+

+ 跆威(Tai wei)

+

+

+ - + + + 越飞传 + + +

-

+

+

+

+ 久宇

+

+

+

+ 久宇

+

+

+ - + + + 无尘谷 + + +

-

+

+

+

+ 纯彩(purecolor)

+

+

+

+ 纯彩(purecolor)

+

+

+ -

+

+

+

+ 优派(ViewSonic)

+

+

+

+ 优派(ViewSonic)

+

+

+ - + + + AHVBOT + + +

- + + + MIAVITO + + +

- + + + HAWOES + + +

- + + + 罗姿 + + +

- + + + 素咫(sozvr) + + +

-

+

+

+

+ 西部猎人(VV.HUNTER)

+

+

+

+ 西部猎人(VV.HUNTER)

+

+

+ - + + + 首爵 + + +

- + + + CVAOJUV + + +

- + + + 鑫片 + + +

-

+

+

+

+ GANSS

+

+

+

+ GANSS

+

+

+ - + + + 力鎂(LIMEIDE) + + +

-

+

+

+

+ 科乐多(KELEDUO)

+

+

+

+ 科乐多(KELEDUO)

+

+

+ -

+

+

+

+ 概力

+

+

+

+ 概力

+

+

+ -

+

+

+

+ 火影

+

+

+

+ 火影

+

+

+ -

+

+

+

+ 优想

+

+

+

+ 优想

+

+

+ -

+

+

+

+ 虎克(HUKE)

+

+

+

+ 虎克(HUKE)

+

+

+ -

+

+

+

+ 桑瑞得(Sunreed)

+

+

+

+ 桑瑞得(Sunreed)

+

+

+ -

+

+

+

+ 骨伽(COUGAR)

+

+

+

+ 骨伽(COUGAR)

+

+

+ - + + + HHKB + + +

- + + + 顾胜 + + +

- + + + 威润祺 + + +

-

+

+

+

+ 京炼

+

+

+

+ 京炼

+

+

+ - + + + SAELGAR + + +

- + + + 越俊云商 + + +

- + + + 赛瑟 + + +

-

+

+

+

+ JETech Design

+

+

+

+ JETech Design

+

+

+ -

+

+

+

+ 金正(NINTAUS)

+

+

+

+ 金正(NINTAUS)

+

+

+ -

+

+

+

+ RK ROYAL KLUDGE

+

+

+

+ RK ROYAL KLUDGE

+

+

+ - + + + 狄普(DiePuo) + + +

-

+

+

+

+ 亮朵(LIGHTDOT)

+

+

+

+ 亮朵(LIGHTDOT)

+

+

+ -

+

+

+

+ 索尼(SONY)

+

+

+

+ 索尼(SONY)

+

+

+ -

+

+

+

+ 姚膜

+

+

+

+ 姚膜

+

+

+ - + + + ROCCAT + + +

-

+

+

+

+ REALFORCE

+

+

+

+ REALFORCE

+

+

+ -

+

+

+

+ 小袋鼠(XIAO DAI SHU)

+

+

+

+ 小袋鼠(XIAO DAI SHU)

+

+

+ - + + + 咔咔鱼(KAKAY) + + +

-

+

+

+

+ 先马(SAMA)

+

+

+

+ 先马(SAMA)

+

+

+ - + + + 鹿为 + + +

- + + + AYALEY + + +

- + + + Corsair + + +

-

+

+

+

+ 米力

+

+

+

+ 米力

+

+

+ -

+

+

+

+ 虎猫(FMOUSE)

+

+

+

+ 虎猫(FMOUSE)

+

+

+ - + + + 酷奇(cooskin) + + +

- + + + HNM + + +

-

+

+

+

+ 成爵

+

+

+

+ 成爵

+

+

+ - + + + 优微客 + + +

- + + + 蓝盛(LENTION) + + +

- + + + 梵特科(FANTECH) + + +

- + + + 炽魂(Blasoul) + + +

- + + + Kensington + + +

-

+

+

+

+ 金陵声宝

+

+

+

+ 金陵声宝

+

+

+ -

+

+

+

+ 机械革命(MECHREVO)

+

+

+

+ 机械革命(MECHREVO)

+

+

+ -

+

+

+

+ 浦乐飞(PLUFY)

+

+

+

+ 浦乐飞(PLUFY)

+

+

+ - + + + 升派(ESPL) + + +

-

+

+

+

+ 品恒(PIHEN)

+

+

+

+ 品恒(PIHEN)

+

+

+ -

+

+

+

+ 英望

+

+

+

+ 英望

+

+

+ -

+

+

+

+ 派凡(oatsbasf)

+

+

+

+ 派凡(oatsbasf)

+

+

+ -

+

+

+

+ 一龙通金

+

+

+

+ 一龙通金

+

+

+ -

+

+

+

+ imice

+

+

+

+ imice

+

+

+ - + + + 苹果(Apple) + + +

-

+

+

+

+ 洋典(YANGDIAN)

+

+

+

+ 洋典(YANGDIAN)

+

+

+ - + + + 有道 + + +

-

+

+

+

+ 镖头(Biaotou)

+

+

+

+ 镖头(Biaotou)

+

+

+ -

+

+

+

+ 橙朗

+

+

+

+ 橙朗

+

+

+ -

+

+

+

+ 武极

+

+

+

+ 武极

+

+

+ - + + + 安麦威 + + +

-

+

+

+

+ 尼凡(Nifan)

+

+

+

+ 尼凡(Nifan)

+

+

+ -

+

+

+

+ 斯泰克(stiger)

+

+

+

+ 斯泰克(stiger)

+

+

+ -

+

+

+

+ 小乙(Ant Black)

+

+

+

+ 小乙(Ant Black)

+

+

+ - + + + 暴狼客 + + +

-

+

+

+

+ JINCOMSO

+

+

+

+ JINCOMSO

+

+

+ -

+

+

+

+ 宜适酷(EXCO)

+

+

+

+ 宜适酷(EXCO)

+

+

+ -

+

+

+

+ 镇魂歌

+

+

+

+ 镇魂歌

+

+

+ - + + + 七品 + + +

- + + + 嘻他 + + +

- + + + 韵果 + + +

- + + + BURJUMAN + + +

- + + + 宛丝希 + + +

- + + + 极梭(SPINDLEPOLE) + + +

-

+

+

+

+ 优本配

+

+

+

+ 优本配

+

+

+ - + + + Repair Your Life + + +

- + + + 荧阙 + + +

-

+

+

+

+ 列侯(LIEHOU)

+

+

+

+ 列侯(LIEHOU)

+

+

+ - + + + 梦叶夕(Mengyexi) + + +

- + + + 津浆 + + +

- + + + 万人迷(manovo) + + +

- + + + 御密达(YUMIDA) + + +

-

+

+

+

+ 菲尼拉(phylina)

+

+

+

+ 菲尼拉(phylina)

+

+

+ -

+

+

+

+ 酷嬷

+

+

+

+ 酷嬷

+

+

+ - + + + 逐讯 + + +

- + + + 素拓(SUTUO) + + +

- + + + 硬豹 + + +

- + + + Jelly Comb + + +

-

+

+

+

+ 阿米洛(Varmilo)

+

+

+

+ 阿米洛(Varmilo)

+

+

+ -

+

+

+

+ 启莱

+

+

+

+ 启莱

+

+

+ - + + + 雅诺仕(ANOCE) + + +

- + + + 峥图(ZHENGTU) + + +

-

+

+

+

+ 十八渡

+

+

+

+ 十八渡

+

+

+ - + + + 么航 + + +

- + + + 迪湃(FOPATI) + + +

- + + + 尚本 + + +

-

+

+

+

+ 艾拍宝(iPazzPort)

+

+

+

+ 艾拍宝(iPazzPort)

+

+

+ - + + + 索能(SUONENG) + + +

- + + + FUNKING + + +

-

+

+

+

+ 凯迪威

+

+

+

+ 凯迪威

+

+

+ - + + + 云里游(YUNLIYOU) + + +

-

+

+

+

+ 未来人类(Terrans Force)

+

+

+

+ 未来人类(Terrans Force)

+

+

+ -

+

+

+

+ 彩膜坊

+

+

+

+ 彩膜坊

+

+

+ -

+

+

+

+ Bonks

+

+

+

+ Bonks

+

+

+ - + + + YAIOCR + + +

- + + + 冠泽(GUANZE) + + +

- + + + 逆芝芙 + + +

-

+

+

+

+ 麦可莉(Macally)

+

+

+

+ 麦可莉(Macally)

+

+

+ -

+

+

+

+ 昊雄

+

+

+

+ 昊雄

+

+

+ - + + + 胖牛 + + +

- + + + 米物(MIIIW) + + +

-

+

+

+

+ BSN

+

+

+

+ BSN

+

+

+ - + + + 雷真 + + +

- + + + 鹊焗之 + + +

-

+

+

+

+ 天威(PrintRite)

+

+

+

+ 天威(PrintRite)

+

+

+ - + + + Dukotod + + +

-

+

+

+

+ 鑫喆

+

+

+

+ 鑫喆

+

+

+ - + + + 海琴烟 + + +

- + + + 吉润星 + + +

- + + + keychron + + +

-

+

+

+

+ 元迈

+

+

+

+ 元迈

+

+

+ - + + + 云派(witsp@d) + + +

-

+

+

+

+ 酷蛇(COOLSNAKE)

+

+

+

+ 酷蛇(COOLSNAKE)

+

+

+ - + + + 木丁丁 + + +

- + + + wkuk + + +

- + + + 鳗而登 + + +

-

+

+

+

+ 名龙堂(MLOONG)

+

+

+

+ 名龙堂(MLOONG)

+

+

+ -

+

+

+

+ 酷元素(KUYUANSU)

+

+

+

+ 酷元素(KUYUANSU)

+

+

+ -

+

+

+

+ 标拓

+

+

+

+ 标拓

+

+

+ -

+

+

+

+ cloud hin

+

+

+

+ cloud hin

+

+

+ - + + + 尚·雅 + + +

-

+

+

+

+ KH

+

+

+

+ KH

+

+

+ -

+

+

+

+ 广群达

+

+

+

+ 广群达

+

+

+ - + + + 希讯(HOPECENT) + + +

- + + + IZW + + +

- + + + 欢乐投 + + +

-

+

+

+

+ RS

+

+

+

+ RS

+

+

+ - + + + 优颐(YOUYI) + + +

-

+

+

+

+ 京俏

+

+

+

+ 京俏

+

+

+ - + + + 佐凡 + + +

- + + + 游戏狂人(GAME MADMAN) + + +

- + + + 尚雅 + + +

- + + + 橡儿 + + +

-

+

+

+

+ HRHPYM

+

+

+

+ HRHPYM

+

+

+ -

+

+

+

+ AWKICI

+

+

+

+ AWKICI

+

+

+ -

+

+

+

+ 埃普(UP)

+

+

+

+ 埃普(UP)

+

+

+ - + + + 摩加(Mojia) + + +

- + + + 耀胜(YAOSHENG) + + +

-

+

+

+

+ 范罗士(Fellowes)

+

+

+

+ 范罗士(Fellowes)

+

+

+ - + + + 画尚(halsanr) + + +

-

+

+

+

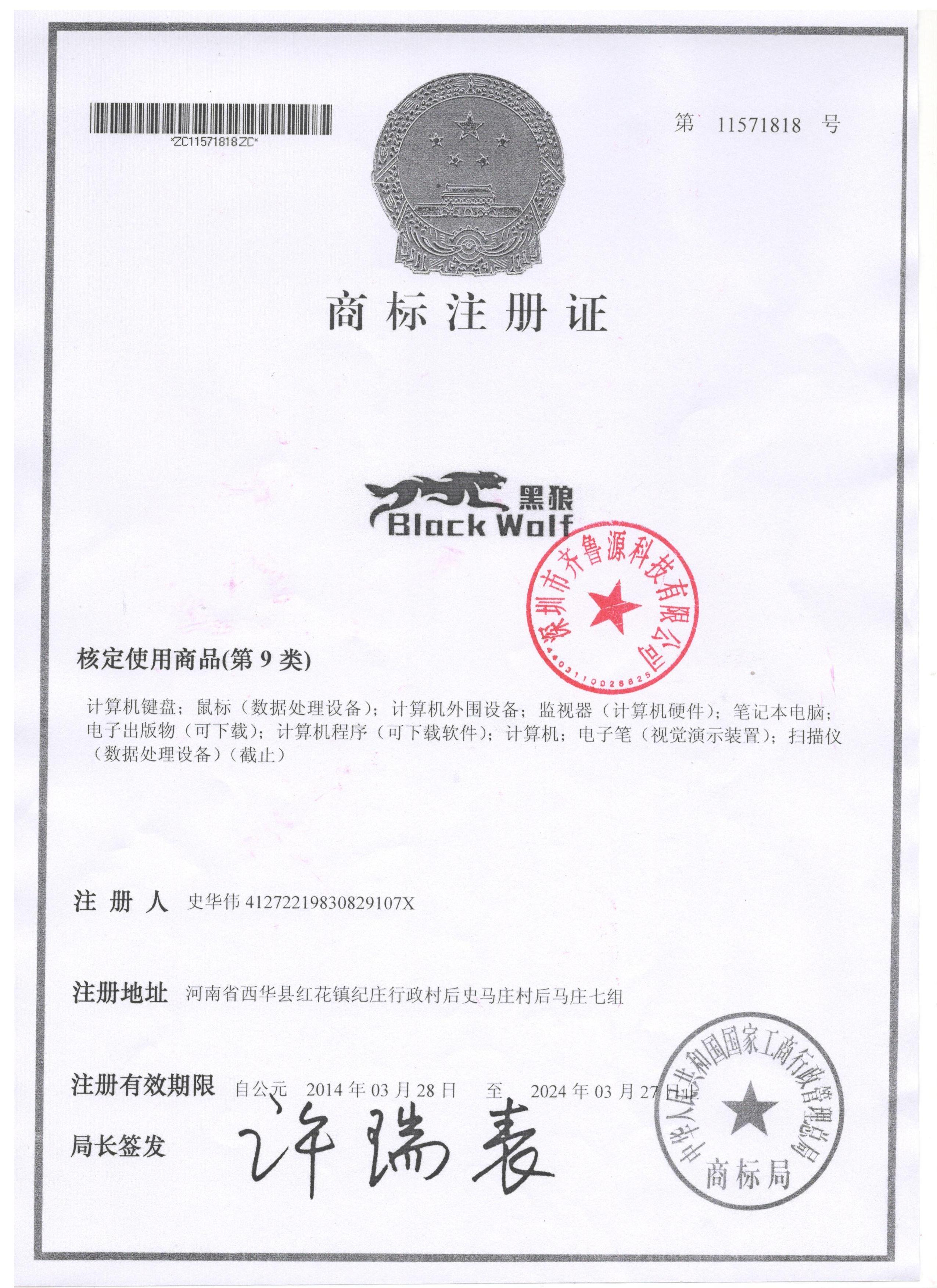

+ 黑狼(Black Wolf)

+

+

+

+ 黑狼(Black Wolf)

+

+

+ - + + + 同福茂 + + +

-

+

+

+

+ LOMAZOO

+

+

+

+ LOMAZOO

+

+

+ -

+

+

+

+ 安尚(ACTTO)

+

+

+

+ 安尚(ACTTO)

+

+

+ -

+

+

+

+ 维豹

+

+

+

+ 维豹

+

+

+ - + + + 小熊堂 + + +

- + + + 硕石(Suk Ore) + + +

-

+

+

+

+ 花朶

+

+

+

+ 花朶

+

+

+ -

+

+

+

+ 通优派

+

+

+

+ 通优派

+

+

+ -

+

+

+

+ 奥睿科(ORICO)

+

+

+

+ 奥睿科(ORICO)

+

+

+ -

+

+

+

+ 瀚沪(HANHU)

+

+

+

+ 瀚沪(HANHU)

+

+

+ - + + + CAPERE + + +

- + + + 悦皇(yuehuang) + + +

-

+

+

+

+ 绿联(UGREEN)

+

+

+

+ 绿联(UGREEN)

+

+

+

已选条件:

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+ 共69万+件商品

+

+

+

+

+-

+

+

- + + + +

- + + + +

- + + + +

- + + + +

- + + + +

- + + + +

- + + + +

- + + + +

- + + + +

- + + + +

- + + + +

- + + + +

- + + + +

- + + + +

- + + + +

-

+ +++ ++ +

+ + + +

+ + ¥199.00 + ++ + + + + +

+

+ - + + + +

- + + + +

- + + + +

- + + + +

- + + + +

- + + + +

- + + + +

- + + + +

- + + + +

- + + + +

- + + + +

- + + + +

- + + + +

- + + +

正在加载中,请稍后~~

+

+

+

+

+

+

+

+  +

+(1)ModuleNotFoundError: No module named 'xxxx'`

+

+- 为什么在pycharm中不报错, 在命令行当中报错

+

+ ```python

+ Pycharm会自动将当前项目的根目录添加到路径列表当中

+ ```

+

+(2) `ModuleNotFoundError: No module named 'parser.search'; 'parser' is not a package`

+

+- 自定义包和内置包名有冲突

+

+ 修改包名即可

+

+- 导入的不是一个包

+

+

+

+(3) `ModuleNotFoundError: No module named '__main__.jd_parser'; '__main__' is no t a package`

+

+- **入口程序不可以使用相对路径**

+

+- `__main__`

+

+ 主程序模块名会被修改为`__main__`

+

+ ```python

+

+ if __name__ == "__main__":#入口

+ # 用来代替生产者

+ mysql_con = pymysql.connect(**MYSQL_CONF)

+ ```

+

+

+

+(4) `ValueError: attempted relative import beyond top-level package`

+

+当前访问路径已经超过了python已知的最大路径

+

+```python

+from tutorial_2.jd_crawler.jd_parser.search import parse_jd_item

+

+top-level package 指的是上述from导入命令中的首路径tutorial_2, 而不是根据目录结构

+```

+

+- 把工作目录加入到路径列表当中

+- 进入到项目根目录下执行命令

+- 上述两个操作相当于将项目根目录加入到路径列表当中

+

+

+

+(1)ModuleNotFoundError: No module named 'xxxx'`

+

+- 为什么在pycharm中不报错, 在命令行当中报错

+

+ ```python

+ Pycharm会自动将当前项目的根目录添加到路径列表当中

+ ```

+

+(2) `ModuleNotFoundError: No module named 'parser.search'; 'parser' is not a package`

+

+- 自定义包和内置包名有冲突

+

+ 修改包名即可

+

+- 导入的不是一个包

+

+

+

+(3) `ModuleNotFoundError: No module named '__main__.jd_parser'; '__main__' is no t a package`

+

+- **入口程序不可以使用相对路径**

+

+- `__main__`

+

+ 主程序模块名会被修改为`__main__`

+

+ ```python

+

+ if __name__ == "__main__":#入口

+ # 用来代替生产者

+ mysql_con = pymysql.connect(**MYSQL_CONF)

+ ```

+

+

+

+(4) `ValueError: attempted relative import beyond top-level package`

+

+当前访问路径已经超过了python已知的最大路径

+

+```python

+from tutorial_2.jd_crawler.jd_parser.search import parse_jd_item

+

+top-level package 指的是上述from导入命令中的首路径tutorial_2, 而不是根据目录结构

+```

+

+- 把工作目录加入到路径列表当中

+- 进入到项目根目录下执行命令

+- 上述两个操作相当于将项目根目录加入到路径列表当中

+

+ +

+# 注意事项

+

+- 确定入口程序, 没有一个锚定的路径就没有办法做相对路径的管理

+- 将项目根目录加入到入口程序当中

+- 进入到项目根目录下执行命令

+- 项目目录结构不要嵌套的太深

+- 脚本文件或者临时运行单个模块中的方法, 可以将根目录临时添加到**路径列表**当中

+

+# 课后作业

+

+- 用命令行启动`jd_crawler`

+- 在`/test`目录中增加`parser_test.py`模块做解析测试.

\ No newline at end of file

diff --git "a/\347\254\254\344\272\214\346\234\237\350\256\255\347\273\203\350\220\245/5\347\217\255/5\347\217\255_\344\272\221/\347\254\2549\345\221\2500222--0228/CSS-BeautifulSoup\345\205\203\347\264\240\345\256\232\344\275\215-9-2noteyun -0309\350\241\245.md" "b/\347\254\254\344\272\214\346\234\237\350\256\255\347\273\203\350\220\245/5\347\217\255/5\347\217\255_\344\272\221/\347\254\2549\345\221\2500222--0228/CSS-BeautifulSoup\345\205\203\347\264\240\345\256\232\344\275\215-9-2noteyun -0309\350\241\245.md"

new file mode 100644

index 0000000000000000000000000000000000000000..e5b75e91ba6c6f6880e3ba5ad765e1eaa8dcac6e

--- /dev/null

+++ "b/\347\254\254\344\272\214\346\234\237\350\256\255\347\273\203\350\220\245/5\347\217\255/5\347\217\255_\344\272\221/\347\254\2549\345\221\2500222--0228/CSS-BeautifulSoup\345\205\203\347\264\240\345\256\232\344\275\215-9-2noteyun -0309\350\241\245.md"

@@ -0,0 +1,251 @@

+9-2noteyun

+

+# css-selector

+

+> 尽量避免解析路径中包含位置信息

+

+> chrome页面中内置了Jquery环境, 用$符号来表示

+

+## 直接定位元素

+

+- 通过id进行定位

+

+ ```python

+ $("#id值")

+ ```

+

+- 通过class进行定位

+

+ ```python

+ $(".class值")

+ ```

+

+- **通过属性名进行定位**

+

+ ```python

+ $("标签名[属性名='属性值']")

+

+ $("ul[class='gl-warp clearfix']")

+ ```

+

+## 获取兄弟节点

+

+- 获取当前节点的下一个节点

+

+ - dom提供的接口, 不属于css-selector语法

+

+ ```python

+ tmp = $("li[data-sku='6039832']")[0]

+ tmp.nextElementSibling#获取兄弟节点;右边一个商品

+ ```

+

+ - 通过css-selector(不建议)

+

+ ```python

+ $("ul[class='gl-warp clearfix'] li:first-child + li")

+ ```

+

+- 获取当前节点的上一个节点

+

+ - dom提供的接口, 不属于css-selector语法

+

+ ```python

+ tmp = $("li[data-sku='2136538']")[0]

+ tmp.previousElementSibling #获取兄弟节点;左边一个商品

+ ```

+

+## 获取父子节点

+

+- 获取父节点

+

+ - dom提供的接口, 不属于css-selector语法

+

+ ```python

+ tmp.parentElement

+ ```

+

+- 获取子节点

+

+ - 获取所有子节点

+

+ - **遍历**所有符合条件的元素

+

+ ```python

+ $("ul[class='gl-warp clearfix'] div[class='gl-i-wrap']")

+

+ ```

+

+ $("ul[class='gl-warp clearfix'] li[class='gl-item']")[0]

+

+ ```

+

+ - dom提供的接口, 不属于css-selector语法

+

+ ```python

+ $("ul[class='gl-warp clearfix']")[0].children

+ ```

+

+

+

+ - 获取第一个子节点

+

+ ```python

+ :fist-child

+ $("ul[class='gl-warp clearfix'] li:first-child")[0]

+ ```

+

+ - 获取最后一个子节点

+

+ ```python

+ :last-child

+ $("ul[class='gl-warp clearfix'] li:last-child")[0]

+ ```

+

+ - 获取第N个子节点

+

+ ```python

+ #:nth-child(索引) 获取第五个

+ $("ul[class='gl-warp clearfix'] li:nth-child(5)")[0]

+ ```

+

+

+

+# 模糊匹配

+

+- 匹配开头

+

+ `^`

+

+ ```python

+ # 匹配data-sku属性值为2开头的元素

+ $("li[data-sku^='2']")

+ ```

+

+

+

+- 匹配结尾

+

+ `$`

+

+ ```python

+ $("li[data-sku$='2']")

+ ```

+

+- 匹配子集

+

+ `*`

+

+ ```python

+ $("li[data-sku*='2']")

+ ```

+

+

+

+- 获取文本值

+

+ ```python

+ $("li[data-sku='6039832'] div[class='p-name p-name-type-2'] em")[0].innerText

+ ```

+

+

+

+

+

+

+

+- 获取属性值

+

+ ```python

+ $("ul[class='gl-warp clearfix'] li")[0].getAttribute("data-sku")

+ ```

+

+

+

+# BeautifulSoup

+

+- 安装

+

+ ```python

+ pip install bs4

+ pip install lxml

+ ```

+

+- 使用BeautifulSoup

+

+ ```python

+ from bs4 import BeautifulSoup

+

+

+ def jd_search_parse(html):

+ soup = BeautifulSoup(html, "lxml")

+ item = soup.select("li[data-sku='6039832']")[0]

+ ```

+

+- 直接定位元素

+

+ 略

+

+- 去除空白字符

+

+ ```python

+ html = html.replace('\r\n', "").replace("\n", "").replace("\t", "")

+ ```

+

+

+

+- 获取兄弟节点

+

+ - 获取上一个节点

+

+ ```python

+ tmp_ele.previous_sibling

+ ```

+

+ - 获取下一个节点

+

+ ```python

+ tmp_ele.next_sibling

+ ```

+

+- 获取父子节点

+

+ - 获取父节点

+

+ ```python

+ tmp_ele.parent

+ ```

+

+ - 获取子节点

+

+ ```python

+ tmp_ele.children

+ ```

+

+- 模糊匹配

+

+ 略

+

+- 获取文本值

+

+ ```python

+ content = tmp_ele.text.strip()

+ ```

+

+- 获取属性值

+

+ ```python

+ value = tmp_ele.attrs["data-sku"]

+ ```

+

+

+

+

+

+

+

+ debug:50分钟之后;

+

+# 课后作业

+

+- 练习css-selector

+- 练习用beautifulsoup进行页面解析

\ No newline at end of file

diff --git "a/\347\254\254\344\272\214\346\234\237\350\256\255\347\273\203\350\220\245/5\347\217\255/5\347\217\255_\344\272\221/\347\254\2549\345\221\2500222--0228/jd_crawler/001.py" "b/\347\254\254\344\272\214\346\234\237\350\256\255\347\273\203\350\220\245/5\347\217\255/5\347\217\255_\344\272\221/\347\254\2549\345\221\2500222--0228/jd_crawler/001.py"

new file mode 100644

index 0000000000000000000000000000000000000000..229d72edceb621b08c99bb6919500be82b3d178a

--- /dev/null

+++ "b/\347\254\254\344\272\214\346\234\237\350\256\255\347\273\203\350\220\245/5\347\217\255/5\347\217\255_\344\272\221/\347\254\2549\345\221\2500222--0228/jd_crawler/001.py"

@@ -0,0 +1,6 @@

+import sys

+print(sys.path)#打印路径列表

+# ['E:\\PycharmProjects\\train002_1231\\second-python-bootcamp\\第二期训练营\\5班\\5班_云\\第9周0222--0228\\9-3\\jd_crawler', 'E:\\PycharmProjects\\train002_1231\\second-python-bootcamp', 'E:\\PycharmProjects\\train002_1231\\second-python-bootcamp\\第二期训练营\\5班\\5班_云\\第9周0222--0228\\9-3\\jd_crawler', 'E:\\PycharmProjects\\train002_1231\\second-python-bootcamp\\第二期训练营\\5班\\5班_云\\第9周0222--0228\\9-3', 'D:\\bsoft\\ananaconda\\python37.zip', 'D:\\bsoft\\ananaconda\\DLLs', 'D:\\bsoft\\ananaconda\\lib', 'D:\\bsoft\\ananaconda', 'D:\\bsoft\\ananaconda\\lib\\site-packages', 'D:\\bsoft\\ananaconda\\lib\\site-packages\\win32', 'D:\\bsoft\\ananaconda\\lib\\site-packages\\win32\\lib', 'D:\\bsoft\\ananaconda\\lib\\site-packages\\Pythonwin']

+# sys.path.append(r"H:\PyCharmProjects\tutorials_2")#不灵活

+print("001",__name__)

+# sys.path.append(r"E:\PycharmProjects\Spider_Code\9-3")

diff --git "a/\347\254\254\344\272\214\346\234\237\350\256\255\347\273\203\350\220\245/5\347\217\255/5\347\217\255_\344\272\221/\347\254\2549\345\221\2500222--0228/jd_crawler/jd_parser/detail.py" "b/\347\254\254\344\272\214\346\234\237\350\256\255\347\273\203\350\220\245/5\347\217\255/5\347\217\255_\344\272\221/\347\254\2549\345\221\2500222--0228/jd_crawler/jd_parser/detail.py"

new file mode 100644

index 0000000000000000000000000000000000000000..e69de29bb2d1d6434b8b29ae775ad8c2e48c5391

diff --git "a/\347\254\254\344\272\214\346\234\237\350\256\255\347\273\203\350\220\245/5\347\217\255/5\347\217\255_\344\272\221/\347\254\2549\345\221\2500222--0228/jd_crawler/jd_parser/search.py" "b/\347\254\254\344\272\214\346\234\237\350\256\255\347\273\203\350\220\245/5\347\217\255/5\347\217\255_\344\272\221/\347\254\2549\345\221\2500222--0228/jd_crawler/jd_parser/search.py"

new file mode 100644

index 0000000000000000000000000000000000000000..1d1c7de8d90ceee46722cdda79b5159b969ed987

--- /dev/null

+++ "b/\347\254\254\344\272\214\346\234\237\350\256\255\347\273\203\350\220\245/5\347\217\255/5\347\217\255_\344\272\221/\347\254\2549\345\221\2500222--0228/jd_crawler/jd_parser/search.py"

@@ -0,0 +1,39 @@

+from bs4 import BeautifulSoup

+import json

+

+def parse_jd_item(html):#解析器

+ result = []#列表,收集,解析的结果

+

+ soup = BeautifulSoup(html, "lxml")

+ print(soup)

+ item_array = soup.select("ul[class='gl-warp clearfix'] li[class='gl-item']")

+ # $("ul[class='gl-warp clearfix'] li[class='gl-item']")

+ for item in item_array:

+ try:

+ sku_id = item.attrs["data-sku"]

+ img = item.select("img[data-img='1']")

+ price = item.select("div[class='p-price']")

+ title = item.select("div[class='p-name p-name-type-2']")

+ shop = item.select("div[class='p-shop']")

+ icons = item.select("div[class='p-icons']")

+ # print(img)

+

+

+ img = img[0].attrs['data-lazy-img'] if img else ""

+ price = price[0].strong.i.text if price else ""

+ title = title[0].text.strip() if title else ""

+ shop = shop[0].a.attrs['title'] if shop[0].text.strip() else ""

+ icons = json.dumps([tag_ele.text for tag_ele in icons[0].select("i")]) if icons else '[]'

+

+ result.append((sku_id, img, price, title, shop, icons))#收集结果

+ except Exception as e:

+ print(e.args)

+ return result

+

+#

+# if __name__ == "__main__":

+# with open(r"..\\test\\search.html", "r", encoding="utf-8") as f:

+#

+# html = f.read()

+# result = parse_jd_item(html)

+# print(result)

\ No newline at end of file

diff --git "a/\347\254\254\344\272\214\346\234\237\350\256\255\347\273\203\350\220\245/5\347\217\255/5\347\217\255_\344\272\221/\347\254\2549\345\221\2500222--0228/jd_crawler/main.py" "b/\347\254\254\344\272\214\346\234\237\350\256\255\347\273\203\350\220\245/5\347\217\255/5\347\217\255_\344\272\221/\347\254\2549\345\221\2500222--0228/jd_crawler/main.py"

new file mode 100644

index 0000000000000000000000000000000000000000..b3056f5fb2dfbd7e29986db1f876daf392bd6cba

--- /dev/null

+++ "b/\347\254\254\344\272\214\346\234\237\350\256\255\347\273\203\350\220\245/5\347\217\255/5\347\217\255_\344\272\221/\347\254\2549\345\221\2500222--0228/jd_crawler/main.py"

@@ -0,0 +1,61 @@

+import random

+import pymysql

+import requests

+import sys

+sys.path.append(r"E:\PycharmProjects\Spider_Code\week9_3")

+sys.path.append(r"E:\PycharmProjects\Spider_Code\week9_3\jd_crawler")

+print(sys.path)

+

+from jd_crawler.jd_parser.search import parse_jd_item

+from jd_crawler.settings import MYSQL_CONF, HEADERS

+import sys

+print(sys.path)

+

+

+def saver(item_array):#保存到sql数据库

+ """

+ 持久化爬取结果

+ :param item_array:

+ :return:

+ """

+ cursor = mysql_con.cursor()#每次建立一个游标

+ SQL = """INSERT INTO jd_search(sku_id,img,price, title, shop, icons)

+ VALUES ( %s,%s, %s, %s, %s, %s)"""

+ cursor.executemany(SQL, item_array)

+ mysql_con.commit()#提交、入库

+ cursor.close()#关闭游标

+

+def donwloader(task):#下载器

+ """

+ 下载器

+ 请求目标网址的组件

+ :param task:

+ :return:

+ """

+ url = "https://search.jd.com/Search"

+ params = {

+ "keyword": task #关键词的列表

+ }

+ res = requests.get(url=url, params=params, headers=HEADERS, timeout=10,

+ # proxies={"https": f"https:144.255.48.62","http": f"http:144.255.48.62"}

+ )

+ return res

+

+

+def main(task_array):#main函数,调度器

+ """

+ 爬虫任务的调度

+ :return:

+ """

+ for task in task_array:

+ result = donwloader(task)#下载器

+ item_array = parse_jd_item(result.text)#解析器

+ print("GET ITEMS", item_array)#打印语句,检查执行的正确与否,及其过程

+ saver(item_array)

+

+

+if __name__ == "__main__":#入口

+ # 用来代替生产者

+ mysql_con = pymysql.connect(**MYSQL_CONF)

+ task_array = ["鼠标", "键盘", "显卡", "耳机"]

+ main(task_array)

\ No newline at end of file

diff --git "a/\347\254\254\344\272\214\346\234\237\350\256\255\347\273\203\350\220\245/5\347\217\255/5\347\217\255_\344\272\221/\347\254\2549\345\221\2500222--0228/jd_crawler/settings.py" "b/\347\254\254\344\272\214\346\234\237\350\256\255\347\273\203\350\220\245/5\347\217\255/5\347\217\255_\344\272\221/\347\254\2549\345\221\2500222--0228/jd_crawler/settings.py"

new file mode 100644

index 0000000000000000000000000000000000000000..d260ebbe81a4237833c331fedfe1fe8d34055935

--- /dev/null

+++ "b/\347\254\254\344\272\214\346\234\237\350\256\255\347\273\203\350\220\245/5\347\217\255/5\347\217\255_\344\272\221/\347\254\2549\345\221\2500222--0228/jd_crawler/settings.py"

@@ -0,0 +1,14 @@

+# 设置文件

+# 请求头

+HEADERS = {

+ "user-agent": "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/87.0.4280.141 Safari/537.36",

+ # "upgrade-insecure-requests": "1"

+}

+

+# 配置

+MYSQL_CONF = {

+ "host": "127.0.0.1",

+ "user": "root",

+ "password": "123456",

+ "db": "py_class"

+}

\ No newline at end of file

diff --git "a/\347\254\254\344\272\214\346\234\237\350\256\255\347\273\203\350\220\245/5\347\217\255/5\347\217\255_\344\272\221/\347\254\2549\345\221\2500222--0228/jd_crawler/test/parser_test.py" "b/\347\254\254\344\272\214\346\234\237\350\256\255\347\273\203\350\220\245/5\347\217\255/5\347\217\255_\344\272\221/\347\254\2549\345\221\2500222--0228/jd_crawler/test/parser_test.py"

new file mode 100644

index 0000000000000000000000000000000000000000..779883b2bb5919b18abb6846b2bc2e9791fef706

--- /dev/null

+++ "b/\347\254\254\344\272\214\346\234\237\350\256\255\347\273\203\350\220\245/5\347\217\255/5\347\217\255_\344\272\221/\347\254\2549\345\221\2500222--0228/jd_crawler/test/parser_test.py"

@@ -0,0 +1,12 @@

+import sys

+sys.path.append(r"E:\PycharmProjects\Spider_Code\week9_3\jd_crawler")

+print(sys.path)

+

+from jd_crawler.jd_parser.search import parse_jd_item

+

+with open(r"..\\test\\search.html", "r", encoding="utf-8") as f:

+ html = f.read()

+ result = parse_jd_item(html)

+ print(result)

+

+ # with open(r"..\\test\\search.html", "r", encoding="utf-8") as f:

\ No newline at end of file

diff --git "a/\347\254\254\344\272\214\346\234\237\350\256\255\347\273\203\350\220\245/5\347\217\255/5\347\217\255_\344\272\221/\347\254\2549\345\221\2500222--0228/jd_crawler/test/search.html" "b/\347\254\254\344\272\214\346\234\237\350\256\255\347\273\203\350\220\245/5\347\217\255/5\347\217\255_\344\272\221/\347\254\2549\345\221\2500222--0228/jd_crawler/test/search.html"

new file mode 100644

index 0000000000000000000000000000000000000000..0f67859bf30f108ca01618bdfc3a29b171484be0

--- /dev/null

+++ "b/\347\254\254\344\272\214\346\234\237\350\256\255\347\273\203\350\220\245/5\347\217\255/5\347\217\255_\344\272\221/\347\254\2549\345\221\2500222--0228/jd_crawler/test/search.html"

@@ -0,0 +1,6615 @@

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+# 注意事项

+

+- 确定入口程序, 没有一个锚定的路径就没有办法做相对路径的管理

+- 将项目根目录加入到入口程序当中

+- 进入到项目根目录下执行命令

+- 项目目录结构不要嵌套的太深

+- 脚本文件或者临时运行单个模块中的方法, 可以将根目录临时添加到**路径列表**当中

+

+# 课后作业

+

+- 用命令行启动`jd_crawler`

+- 在`/test`目录中增加`parser_test.py`模块做解析测试.

\ No newline at end of file

diff --git "a/\347\254\254\344\272\214\346\234\237\350\256\255\347\273\203\350\220\245/5\347\217\255/5\347\217\255_\344\272\221/\347\254\2549\345\221\2500222--0228/CSS-BeautifulSoup\345\205\203\347\264\240\345\256\232\344\275\215-9-2noteyun -0309\350\241\245.md" "b/\347\254\254\344\272\214\346\234\237\350\256\255\347\273\203\350\220\245/5\347\217\255/5\347\217\255_\344\272\221/\347\254\2549\345\221\2500222--0228/CSS-BeautifulSoup\345\205\203\347\264\240\345\256\232\344\275\215-9-2noteyun -0309\350\241\245.md"

new file mode 100644

index 0000000000000000000000000000000000000000..e5b75e91ba6c6f6880e3ba5ad765e1eaa8dcac6e

--- /dev/null

+++ "b/\347\254\254\344\272\214\346\234\237\350\256\255\347\273\203\350\220\245/5\347\217\255/5\347\217\255_\344\272\221/\347\254\2549\345\221\2500222--0228/CSS-BeautifulSoup\345\205\203\347\264\240\345\256\232\344\275\215-9-2noteyun -0309\350\241\245.md"

@@ -0,0 +1,251 @@

+9-2noteyun

+

+# css-selector

+

+> 尽量避免解析路径中包含位置信息

+

+> chrome页面中内置了Jquery环境, 用$符号来表示

+

+## 直接定位元素

+

+- 通过id进行定位

+

+ ```python

+ $("#id值")

+ ```

+

+- 通过class进行定位

+

+ ```python

+ $(".class值")

+ ```

+

+- **通过属性名进行定位**

+

+ ```python

+ $("标签名[属性名='属性值']")

+

+ $("ul[class='gl-warp clearfix']")

+ ```

+

+## 获取兄弟节点

+

+- 获取当前节点的下一个节点

+

+ - dom提供的接口, 不属于css-selector语法

+

+ ```python

+ tmp = $("li[data-sku='6039832']")[0]

+ tmp.nextElementSibling#获取兄弟节点;右边一个商品

+ ```

+

+ - 通过css-selector(不建议)

+

+ ```python

+ $("ul[class='gl-warp clearfix'] li:first-child + li")

+ ```

+

+- 获取当前节点的上一个节点

+

+ - dom提供的接口, 不属于css-selector语法

+

+ ```python

+ tmp = $("li[data-sku='2136538']")[0]

+ tmp.previousElementSibling #获取兄弟节点;左边一个商品

+ ```

+

+## 获取父子节点

+

+- 获取父节点

+

+ - dom提供的接口, 不属于css-selector语法

+

+ ```python

+ tmp.parentElement

+ ```

+

+- 获取子节点

+

+ - 获取所有子节点

+

+ - **遍历**所有符合条件的元素

+

+ ```python

+ $("ul[class='gl-warp clearfix'] div[class='gl-i-wrap']")

+

+ ```

+

+ $("ul[class='gl-warp clearfix'] li[class='gl-item']")[0]

+

+ ```

+

+ - dom提供的接口, 不属于css-selector语法

+

+ ```python

+ $("ul[class='gl-warp clearfix']")[0].children

+ ```

+

+

+

+ - 获取第一个子节点

+

+ ```python

+ :fist-child

+ $("ul[class='gl-warp clearfix'] li:first-child")[0]

+ ```

+

+ - 获取最后一个子节点

+

+ ```python

+ :last-child

+ $("ul[class='gl-warp clearfix'] li:last-child")[0]

+ ```

+

+ - 获取第N个子节点

+

+ ```python

+ #:nth-child(索引) 获取第五个

+ $("ul[class='gl-warp clearfix'] li:nth-child(5)")[0]

+ ```

+

+

+

+# 模糊匹配

+

+- 匹配开头

+

+ `^`

+

+ ```python

+ # 匹配data-sku属性值为2开头的元素

+ $("li[data-sku^='2']")

+ ```

+

+

+

+- 匹配结尾

+

+ `$`

+

+ ```python

+ $("li[data-sku$='2']")

+ ```

+

+- 匹配子集

+

+ `*`

+

+ ```python

+ $("li[data-sku*='2']")

+ ```

+

+

+

+- 获取文本值

+

+ ```python

+ $("li[data-sku='6039832'] div[class='p-name p-name-type-2'] em")[0].innerText

+ ```

+

+

+

+

+

+

+

+- 获取属性值

+

+ ```python

+ $("ul[class='gl-warp clearfix'] li")[0].getAttribute("data-sku")

+ ```

+

+

+

+# BeautifulSoup

+

+- 安装

+

+ ```python

+ pip install bs4

+ pip install lxml

+ ```

+

+- 使用BeautifulSoup

+

+ ```python

+ from bs4 import BeautifulSoup

+

+

+ def jd_search_parse(html):

+ soup = BeautifulSoup(html, "lxml")

+ item = soup.select("li[data-sku='6039832']")[0]

+ ```

+

+- 直接定位元素

+

+ 略

+

+- 去除空白字符

+

+ ```python

+ html = html.replace('\r\n', "").replace("\n", "").replace("\t", "")

+ ```

+

+

+

+- 获取兄弟节点

+

+ - 获取上一个节点

+

+ ```python

+ tmp_ele.previous_sibling

+ ```

+

+ - 获取下一个节点

+

+ ```python

+ tmp_ele.next_sibling

+ ```

+

+- 获取父子节点

+

+ - 获取父节点

+

+ ```python

+ tmp_ele.parent

+ ```

+

+ - 获取子节点

+

+ ```python

+ tmp_ele.children

+ ```

+

+- 模糊匹配

+

+ 略

+

+- 获取文本值

+

+ ```python

+ content = tmp_ele.text.strip()

+ ```

+

+- 获取属性值

+

+ ```python

+ value = tmp_ele.attrs["data-sku"]

+ ```

+

+

+

+

+

+

+

+ debug:50分钟之后;

+

+# 课后作业

+

+- 练习css-selector

+- 练习用beautifulsoup进行页面解析

\ No newline at end of file

diff --git "a/\347\254\254\344\272\214\346\234\237\350\256\255\347\273\203\350\220\245/5\347\217\255/5\347\217\255_\344\272\221/\347\254\2549\345\221\2500222--0228/jd_crawler/001.py" "b/\347\254\254\344\272\214\346\234\237\350\256\255\347\273\203\350\220\245/5\347\217\255/5\347\217\255_\344\272\221/\347\254\2549\345\221\2500222--0228/jd_crawler/001.py"

new file mode 100644

index 0000000000000000000000000000000000000000..229d72edceb621b08c99bb6919500be82b3d178a

--- /dev/null

+++ "b/\347\254\254\344\272\214\346\234\237\350\256\255\347\273\203\350\220\245/5\347\217\255/5\347\217\255_\344\272\221/\347\254\2549\345\221\2500222--0228/jd_crawler/001.py"

@@ -0,0 +1,6 @@

+import sys

+print(sys.path)#打印路径列表

+# ['E:\\PycharmProjects\\train002_1231\\second-python-bootcamp\\第二期训练营\\5班\\5班_云\\第9周0222--0228\\9-3\\jd_crawler', 'E:\\PycharmProjects\\train002_1231\\second-python-bootcamp', 'E:\\PycharmProjects\\train002_1231\\second-python-bootcamp\\第二期训练营\\5班\\5班_云\\第9周0222--0228\\9-3\\jd_crawler', 'E:\\PycharmProjects\\train002_1231\\second-python-bootcamp\\第二期训练营\\5班\\5班_云\\第9周0222--0228\\9-3', 'D:\\bsoft\\ananaconda\\python37.zip', 'D:\\bsoft\\ananaconda\\DLLs', 'D:\\bsoft\\ananaconda\\lib', 'D:\\bsoft\\ananaconda', 'D:\\bsoft\\ananaconda\\lib\\site-packages', 'D:\\bsoft\\ananaconda\\lib\\site-packages\\win32', 'D:\\bsoft\\ananaconda\\lib\\site-packages\\win32\\lib', 'D:\\bsoft\\ananaconda\\lib\\site-packages\\Pythonwin']

+# sys.path.append(r"H:\PyCharmProjects\tutorials_2")#不灵活

+print("001",__name__)

+# sys.path.append(r"E:\PycharmProjects\Spider_Code\9-3")

diff --git "a/\347\254\254\344\272\214\346\234\237\350\256\255\347\273\203\350\220\245/5\347\217\255/5\347\217\255_\344\272\221/\347\254\2549\345\221\2500222--0228/jd_crawler/jd_parser/detail.py" "b/\347\254\254\344\272\214\346\234\237\350\256\255\347\273\203\350\220\245/5\347\217\255/5\347\217\255_\344\272\221/\347\254\2549\345\221\2500222--0228/jd_crawler/jd_parser/detail.py"

new file mode 100644

index 0000000000000000000000000000000000000000..e69de29bb2d1d6434b8b29ae775ad8c2e48c5391

diff --git "a/\347\254\254\344\272\214\346\234\237\350\256\255\347\273\203\350\220\245/5\347\217\255/5\347\217\255_\344\272\221/\347\254\2549\345\221\2500222--0228/jd_crawler/jd_parser/search.py" "b/\347\254\254\344\272\214\346\234\237\350\256\255\347\273\203\350\220\245/5\347\217\255/5\347\217\255_\344\272\221/\347\254\2549\345\221\2500222--0228/jd_crawler/jd_parser/search.py"

new file mode 100644

index 0000000000000000000000000000000000000000..1d1c7de8d90ceee46722cdda79b5159b969ed987

--- /dev/null

+++ "b/\347\254\254\344\272\214\346\234\237\350\256\255\347\273\203\350\220\245/5\347\217\255/5\347\217\255_\344\272\221/\347\254\2549\345\221\2500222--0228/jd_crawler/jd_parser/search.py"

@@ -0,0 +1,39 @@

+from bs4 import BeautifulSoup

+import json

+

+def parse_jd_item(html):#解析器

+ result = []#列表,收集,解析的结果

+

+ soup = BeautifulSoup(html, "lxml")

+ print(soup)

+ item_array = soup.select("ul[class='gl-warp clearfix'] li[class='gl-item']")

+ # $("ul[class='gl-warp clearfix'] li[class='gl-item']")

+ for item in item_array:

+ try:

+ sku_id = item.attrs["data-sku"]

+ img = item.select("img[data-img='1']")

+ price = item.select("div[class='p-price']")

+ title = item.select("div[class='p-name p-name-type-2']")

+ shop = item.select("div[class='p-shop']")

+ icons = item.select("div[class='p-icons']")

+ # print(img)

+

+

+ img = img[0].attrs['data-lazy-img'] if img else ""

+ price = price[0].strong.i.text if price else ""

+ title = title[0].text.strip() if title else ""

+ shop = shop[0].a.attrs['title'] if shop[0].text.strip() else ""

+ icons = json.dumps([tag_ele.text for tag_ele in icons[0].select("i")]) if icons else '[]'

+

+ result.append((sku_id, img, price, title, shop, icons))#收集结果

+ except Exception as e:

+ print(e.args)

+ return result

+

+#

+# if __name__ == "__main__":

+# with open(r"..\\test\\search.html", "r", encoding="utf-8") as f:

+#

+# html = f.read()

+# result = parse_jd_item(html)

+# print(result)

\ No newline at end of file

diff --git "a/\347\254\254\344\272\214\346\234\237\350\256\255\347\273\203\350\220\245/5\347\217\255/5\347\217\255_\344\272\221/\347\254\2549\345\221\2500222--0228/jd_crawler/main.py" "b/\347\254\254\344\272\214\346\234\237\350\256\255\347\273\203\350\220\245/5\347\217\255/5\347\217\255_\344\272\221/\347\254\2549\345\221\2500222--0228/jd_crawler/main.py"

new file mode 100644

index 0000000000000000000000000000000000000000..b3056f5fb2dfbd7e29986db1f876daf392bd6cba

--- /dev/null

+++ "b/\347\254\254\344\272\214\346\234\237\350\256\255\347\273\203\350\220\245/5\347\217\255/5\347\217\255_\344\272\221/\347\254\2549\345\221\2500222--0228/jd_crawler/main.py"

@@ -0,0 +1,61 @@

+import random

+import pymysql

+import requests

+import sys

+sys.path.append(r"E:\PycharmProjects\Spider_Code\week9_3")

+sys.path.append(r"E:\PycharmProjects\Spider_Code\week9_3\jd_crawler")

+print(sys.path)

+

+from jd_crawler.jd_parser.search import parse_jd_item

+from jd_crawler.settings import MYSQL_CONF, HEADERS

+import sys

+print(sys.path)

+

+

+def saver(item_array):#保存到sql数据库

+ """

+ 持久化爬取结果

+ :param item_array:

+ :return:

+ """

+ cursor = mysql_con.cursor()#每次建立一个游标

+ SQL = """INSERT INTO jd_search(sku_id,img,price, title, shop, icons)

+ VALUES ( %s,%s, %s, %s, %s, %s)"""

+ cursor.executemany(SQL, item_array)

+ mysql_con.commit()#提交、入库

+ cursor.close()#关闭游标

+

+def donwloader(task):#下载器

+ """

+ 下载器

+ 请求目标网址的组件

+ :param task:

+ :return:

+ """

+ url = "https://search.jd.com/Search"

+ params = {

+ "keyword": task #关键词的列表

+ }

+ res = requests.get(url=url, params=params, headers=HEADERS, timeout=10,

+ # proxies={"https": f"https:144.255.48.62","http": f"http:144.255.48.62"}

+ )

+ return res

+

+

+def main(task_array):#main函数,调度器

+ """

+ 爬虫任务的调度

+ :return:

+ """

+ for task in task_array:

+ result = donwloader(task)#下载器

+ item_array = parse_jd_item(result.text)#解析器

+ print("GET ITEMS", item_array)#打印语句,检查执行的正确与否,及其过程

+ saver(item_array)

+

+

+if __name__ == "__main__":#入口

+ # 用来代替生产者

+ mysql_con = pymysql.connect(**MYSQL_CONF)

+ task_array = ["鼠标", "键盘", "显卡", "耳机"]

+ main(task_array)

\ No newline at end of file

diff --git "a/\347\254\254\344\272\214\346\234\237\350\256\255\347\273\203\350\220\245/5\347\217\255/5\347\217\255_\344\272\221/\347\254\2549\345\221\2500222--0228/jd_crawler/settings.py" "b/\347\254\254\344\272\214\346\234\237\350\256\255\347\273\203\350\220\245/5\347\217\255/5\347\217\255_\344\272\221/\347\254\2549\345\221\2500222--0228/jd_crawler/settings.py"

new file mode 100644

index 0000000000000000000000000000000000000000..d260ebbe81a4237833c331fedfe1fe8d34055935

--- /dev/null

+++ "b/\347\254\254\344\272\214\346\234\237\350\256\255\347\273\203\350\220\245/5\347\217\255/5\347\217\255_\344\272\221/\347\254\2549\345\221\2500222--0228/jd_crawler/settings.py"

@@ -0,0 +1,14 @@

+# 设置文件

+# 请求头

+HEADERS = {

+ "user-agent": "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/87.0.4280.141 Safari/537.36",

+ # "upgrade-insecure-requests": "1"

+}

+

+# 配置

+MYSQL_CONF = {

+ "host": "127.0.0.1",

+ "user": "root",

+ "password": "123456",

+ "db": "py_class"

+}

\ No newline at end of file

diff --git "a/\347\254\254\344\272\214\346\234\237\350\256\255\347\273\203\350\220\245/5\347\217\255/5\347\217\255_\344\272\221/\347\254\2549\345\221\2500222--0228/jd_crawler/test/parser_test.py" "b/\347\254\254\344\272\214\346\234\237\350\256\255\347\273\203\350\220\245/5\347\217\255/5\347\217\255_\344\272\221/\347\254\2549\345\221\2500222--0228/jd_crawler/test/parser_test.py"

new file mode 100644

index 0000000000000000000000000000000000000000..779883b2bb5919b18abb6846b2bc2e9791fef706

--- /dev/null

+++ "b/\347\254\254\344\272\214\346\234\237\350\256\255\347\273\203\350\220\245/5\347\217\255/5\347\217\255_\344\272\221/\347\254\2549\345\221\2500222--0228/jd_crawler/test/parser_test.py"

@@ -0,0 +1,12 @@

+import sys

+sys.path.append(r"E:\PycharmProjects\Spider_Code\week9_3\jd_crawler")

+print(sys.path)

+

+from jd_crawler.jd_parser.search import parse_jd_item

+

+with open(r"..\\test\\search.html", "r", encoding="utf-8") as f:

+ html = f.read()

+ result = parse_jd_item(html)

+ print(result)

+

+ # with open(r"..\\test\\search.html", "r", encoding="utf-8") as f:

\ No newline at end of file

diff --git "a/\347\254\254\344\272\214\346\234\237\350\256\255\347\273\203\350\220\245/5\347\217\255/5\347\217\255_\344\272\221/\347\254\2549\345\221\2500222--0228/jd_crawler/test/search.html" "b/\347\254\254\344\272\214\346\234\237\350\256\255\347\273\203\350\220\245/5\347\217\255/5\347\217\255_\344\272\221/\347\254\2549\345\221\2500222--0228/jd_crawler/test/search.html"

new file mode 100644

index 0000000000000000000000000000000000000000..0f67859bf30f108ca01618bdfc3a29b171484be0

--- /dev/null

+++ "b/\347\254\254\344\272\214\346\234\237\350\256\255\347\273\203\350\220\245/5\347\217\255/5\347\217\255_\344\272\221/\347\254\2549\345\221\2500222--0228/jd_crawler/test/search.html"

@@ -0,0 +1,6615 @@

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+ +

+  +

+  +

+  +

+  +

+  +

+  +罗技(Logitech)M220 鼠标 无线鼠标 办公鼠标 静音鼠标 对称鼠标 灰黑色 带无线2.4G接收器

+ 【静无止静,享受静音】无线自由连接,即插即用性微型接收器,稳定流畅的光学追踪【寻找无线静音鼠标,摆脱尾巴束缚,M220了解一下】

+

+罗技(Logitech)M220 鼠标 无线鼠标 办公鼠标 静音鼠标 对称鼠标 灰黑色 带无线2.4G接收器

+ 【静无止静,享受静音】无线自由连接,即插即用性微型接收器,稳定流畅的光学追踪【寻找无线静音鼠标,摆脱尾巴束缚,M220了解一下】

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+