**A library for benchmarking, developing and deploying deep learning anomaly detection algorithms**

---

[Key Features](#key-features) •

[Docs](https://anomalib.readthedocs.io/en/latest/) •

[Notebooks](examples/notebooks) •

[License](LICENSE)

[]()

[]()

[]()

[]()

[](https://github.com/open-edge-platform/anomalib/actions/workflows/pre_merge.yml)

[](https://codecov.io/gh/open-edge-platform/anomalib)

[](https://pepy.tech/project/anomalib)

[](https://snyk.io/advisor/python/anomalib)

[](https://anomalib.readthedocs.io/en/latest/?badge=latest)

[](https://gurubase.io/g/anomalib)

---

> 🌟 **Announcing v2.0.0 Release!** 🌟

>

> We're excited to announce the release of Anomalib v2.0.0! This version introduces significant improvements and customization options to enhance your anomaly detection workflows. Please be aware that there are several API changes between `v1.2.0` and `v2.0.0`, so please be careful when updating your existing pipelines. Key features include:

>

> - Multi-GPU support

> - New [dataclasses](https://anomalib.readthedocs.io/en/latest/markdown/guides/how_to/data/dataclasses.html) for model in- and outputs.

> - Flexible configuration of [model transforms and data augmentations](https://anomalib.readthedocs.io/en/latest/markdown/guides/how_to/data/transforms.html).

> - Configurable modules for pre- and post-processing operations via [`Preprocessor`](https://anomalib.readthedocs.io/en/latest/markdown/guides/how_to/models/pre_processor.html) and [`Postprocessor`](https://anomalib.readthedocs.io/en/latest/markdown/guides/how_to/models/post_processor.html)

> - Customizable model evaluation workflow with new [Metrics API](https://anomalib.readthedocs.io/en/latest/markdown/guides/how_to/evaluation/metrics.html) and [`Evaluator`](https://anomalib.readthedocs.io/en/latest/markdown/guides/how_to/evaluation/evaluator.html) module.

> - Configurable module for visualization via `Visualizer` (docs guide: coming soon)

>

> We value your input! Please share feedback via [GitHub Issues](https://github.com/open-edge-platform/anomalib/issues) or our [Discussions](https://github.com/open-edge-platform/anomalib/discussions)

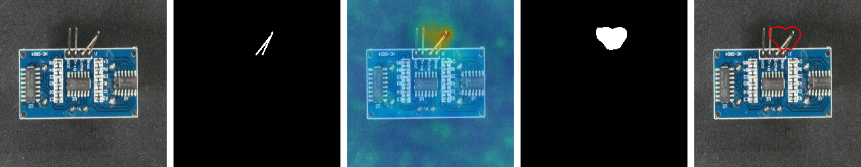

# 👋 Introduction

Anomalib is a deep learning library that aims to collect state-of-the-art anomaly detection algorithms for benchmarking on both public and private datasets. Anomalib provides several ready-to-use implementations of anomaly detection algorithms described in the recent literature, as well as a set of tools that facilitate the development and implementation of custom models. The library has a strong focus on visual anomaly detection, where the goal of the algorithm is to detect and/or localize anomalies within images or videos in a dataset. Anomalib is constantly updated with new algorithms and training/inference extensions, so keep checking!

**A library for benchmarking, developing and deploying deep learning anomaly detection algorithms**

---

[Key Features](#key-features) •

[Docs](https://anomalib.readthedocs.io/en/latest/) •

[Notebooks](examples/notebooks) •

[License](LICENSE)

[]()

[]()

[]()

[]()

[](https://github.com/open-edge-platform/anomalib/actions/workflows/pre_merge.yml)

[](https://codecov.io/gh/open-edge-platform/anomalib)

[](https://pepy.tech/project/anomalib)

[](https://snyk.io/advisor/python/anomalib)

[](https://anomalib.readthedocs.io/en/latest/?badge=latest)

[](https://gurubase.io/g/anomalib)

**A library for benchmarking, developing and deploying deep learning anomaly detection algorithms**

---

[Key Features](#key-features) •

[Docs](https://anomalib.readthedocs.io/en/latest/) •

[Notebooks](examples/notebooks) •

[License](LICENSE)

[]()

[]()

[]()

[]()

[](https://github.com/open-edge-platform/anomalib/actions/workflows/pre_merge.yml)

[](https://codecov.io/gh/open-edge-platform/anomalib)

[](https://pepy.tech/project/anomalib)

[](https://snyk.io/advisor/python/anomalib)

[](https://anomalib.readthedocs.io/en/latest/?badge=latest)

[](https://gurubase.io/g/anomalib)